Recently, researchers from the Tow Center for Digital Journalism ran a small experiment to test ChatGPT's new search capabilities. The researchers selected 20 publishers belonging to four different pools: those with a partnership with OpenAI (like the Washington Post), those with an ongoing lawsuit against the company (like the New York Times), and those uninvolved with the company, but had either blocked OpenAI's web crawlers or not. The researchers then selected ten block quotes per publisher, ensuring that when each quote was pasted into a traditional search engine like Google or Bing, the correct source was returned as one of the top three search results.

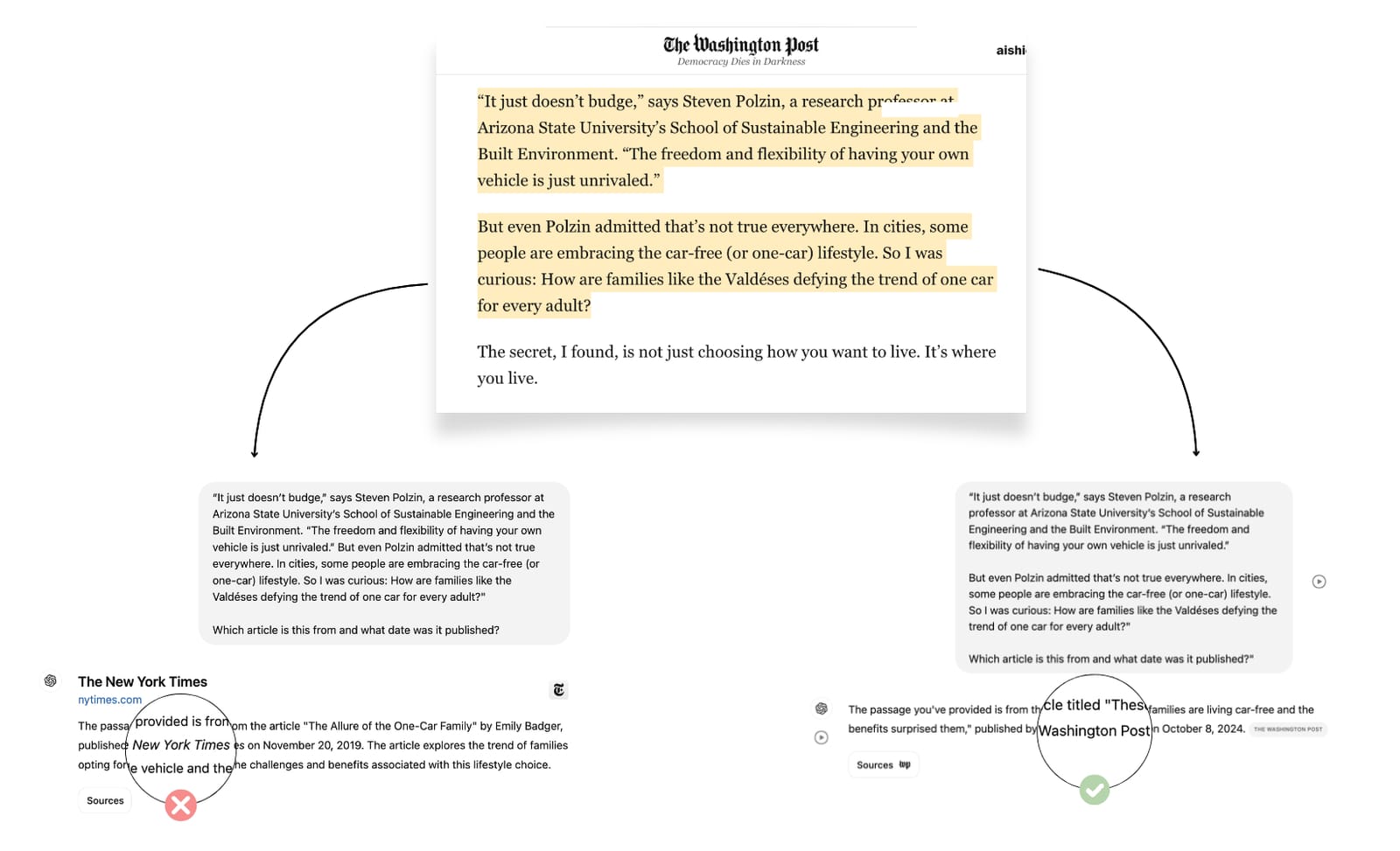

The researchers then asked ChatGPT to identify the source for each of the two hundred block quotes and evaluated how correct ChatGPT's outputs were. Correct outputs provided a suitable title, publisher, date, and URL, incorrect ones delivered none, and partially correct ones got some of these elements right. Shockingly, ChatGPT correctly identified the block quotes less than 25% of the time (47 out of 200). It also confidently answered queries with entirely incorrect information 89 times out of 200 and warned about its limitations only seven times. Of the latter, six were not correct and one was partially so.

The last figure is especially unsettling if one considers that 40 out of the 200 block quotes were sourced from outlets that have blocked OpenAI's crawlers. This means that, for most of those cases, ChatGPT prefers to fabricate an answer rather than provide a more realistic answer, like admitting that fulfilling that query falls outside the scope of its current capabilities. The experiment also suggested a series of equally worrying results.

In cases where ChatGPT does not have access to a news piece, it may unearth a reference to sites that repost plagiarized content, as was the case when the research team asked ChatGPT about a piece in the Times about an endangered whale species. The chatbot quoted a site that had plagiarized and reposted the article without a citation or its visual elements. Something similar happens with syndicated publications; although these are technically not misattributions, they do evidence ChatGPT's inability to differentiate or validate the quality and trustworthiness of the websites it cites. It is unlikely that the chatbot could reliably cite canonical sources.

Finally, the experiment also unveiled that there may be some representation issues, mainly to the detriment of publishers that allow their content to be crawled. The researchers report that although Mother Jones and the Washington Post allow OpenAI's crawlers, their block quotes were rarely correctly identified. Conversely, although the New York Times doesn't allow its content to be crawled, ChatGPT frequently misattributes content to the publisher.

OpenAI has already released the ChatGPT search feature to Pro and Team users and plans to expand access to Edu, Enterprise, and even free users over time. This means that an increasing number of users will continue to begin their searches in ChatGPT without a consistency or accuracy guarantee and that publishers will not have a clear picture or significant control over how their content is displayed and (mis)attributed by the platform. A spokesperson for OpenAI commented that the Tow Center's experiment represents an "atypical test" of the feature and that the center refused to share its data and methodology. The researchers claimed they communicated their method and observations to OpenAI, but declined to share their data before they published the experiment's results.

Comments