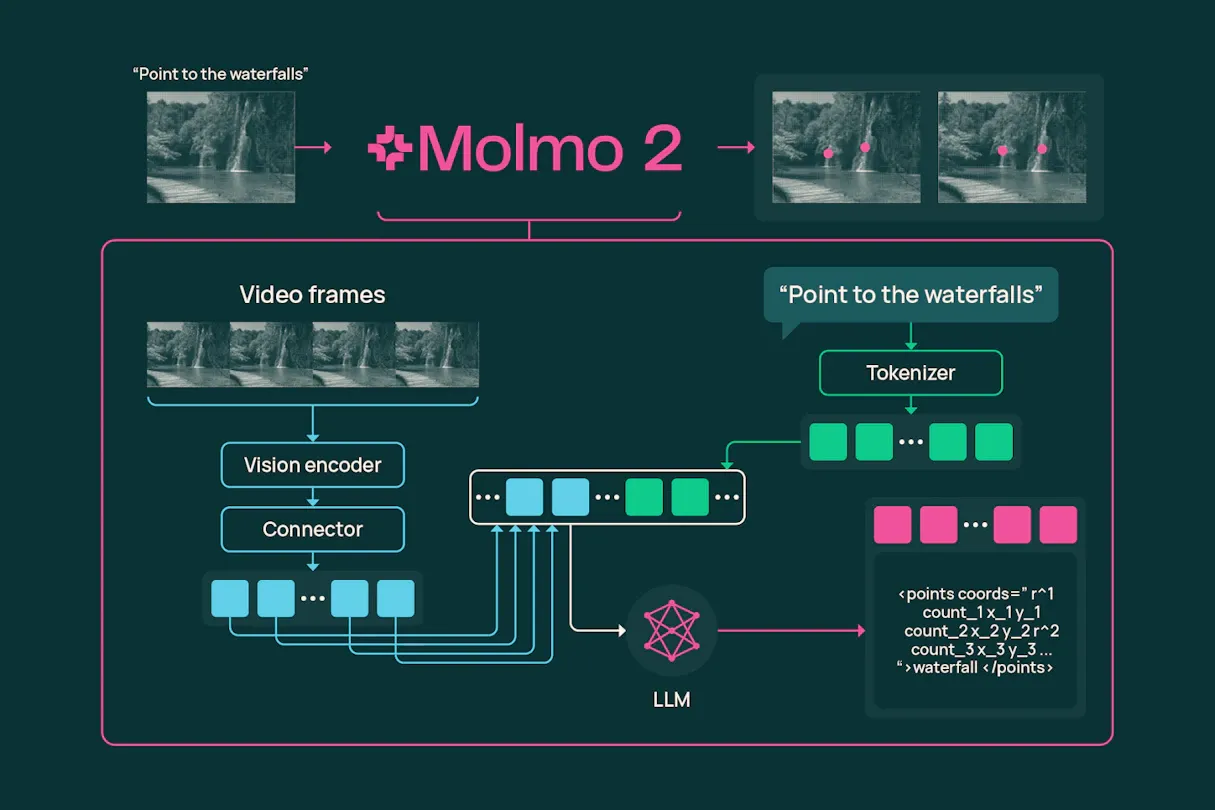

Building on the recent announcement of Olmo 3.1, an updated checkpoint on the Olmo 3 language models, this week the Allen Institute for AI (Ai2) released Molmo 2, a state-of-the-art open multimodal model family that brings breakthrough capabilities in video comprehension, object tracking, and multi-image reasoning to the open-source community.

Building on last year's Molmo, which pioneered image pointing for AI systems, Molmo 2 introduces video pointing, multi-frame reasoning, and precise temporal understanding. The 8B-parameter model surpasses its 72B-parameter predecessor in accuracy and pixel-level grounding, while outperforming proprietary models like Gemini 3 on video tracking tasks.

Remarkably efficient, Molmo 2 was trained on just 9.19 million videos compared to 72.5 million for Meta's PerceptionLM. Despite this reduced data requirement, the compact 4B variant outperforms larger open models like Qwen 3-VL-8B on image and multi-image reasoning benchmarks.

Molmo 2's capabilities address critical needs in robotics, autonomous vehicles, industrial systems, and scientific research. The models can identify precise pixel coordinates and timestamps for events, maintain consistent object tracking through occlusions and scene changes, and produce detailed long-form video captions with anomaly detection.

"Molmo 2 surpasses many frontier models on key video understanding tasks with a fraction of the data," said Ali Farhadi, Ai2's CEO. "We are excited to see the immense impact this model will have on the AI landscape."

True to Ai2's commitment to openness, all model weights, nine new training datasets totaling over nine million examples, and evaluation tools are publicly available on GitHub, Hugging Face, and the Ai2 Playground. The captioning corpus alone includes over 100,000 videos with descriptions averaging 900 words each.

Comments