Anthropic has released Claude Opus 4.5, positioning it as the world's best model for coding, agents, and computer use. The launch comes with significant pricing improvements—now $5/$25 per million tokens—making enterprise-grade AI capabilities more accessible than ever.

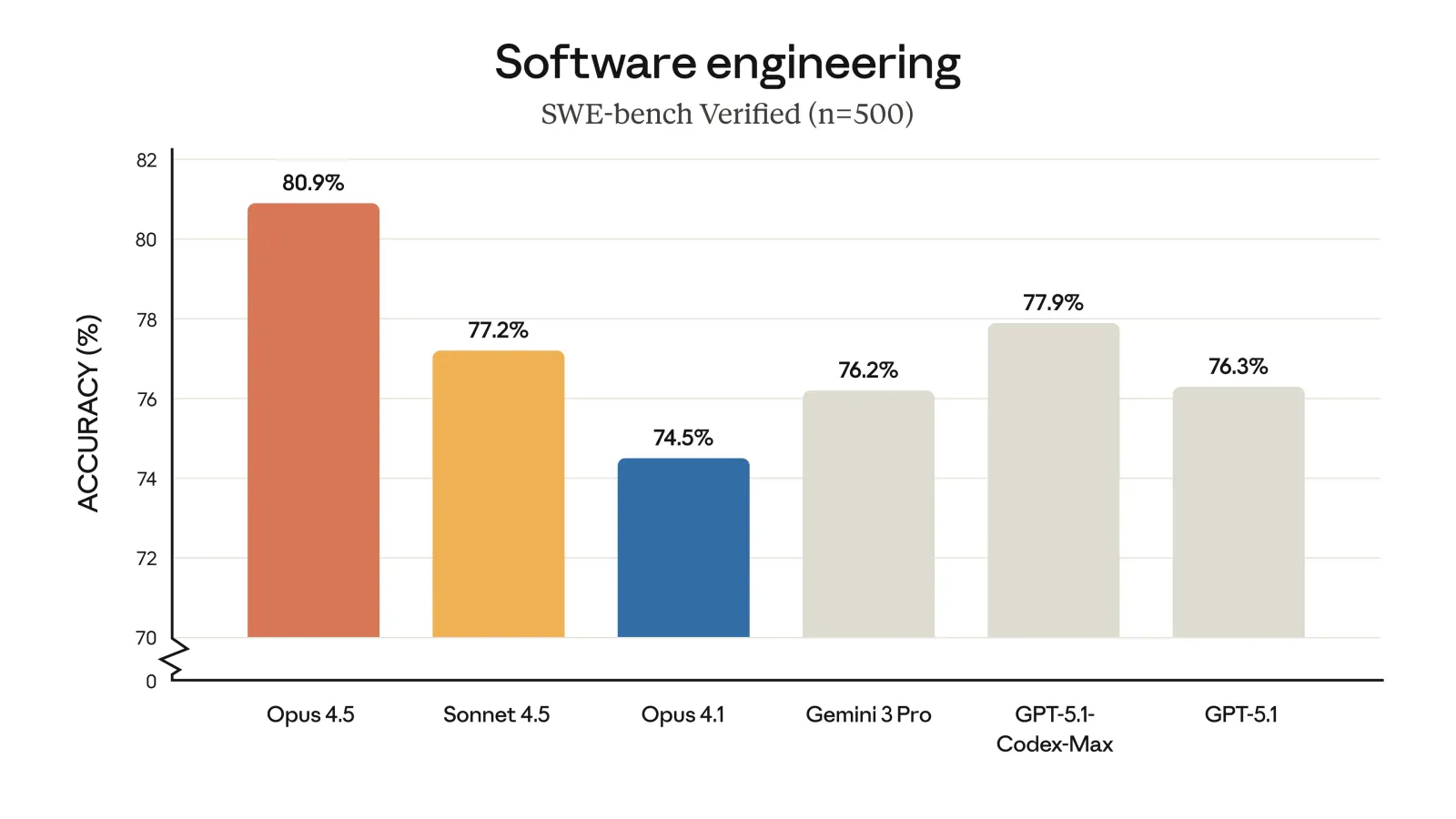

Claude Opus 4.5 achieves state-of-the-art performance on SWE-bench Verified, a key software engineering benchmark, and delivers substantial improvements in everyday tasks like research, spreadsheet work, and slide creation. Internal testing revealed the model scored higher than any human candidate on Anthropic's notoriously difficult performance engineering take-home exam within the prescribed two-hour limit.

What sets Opus 4.5 apart is its ability to handle ambiguity and reason about complex tradeoffs with minimal guidance. Early testers consistently reported that the model simply "gets it," successfully tackling tasks that were near-impossible for previous models. The system demonstrates creative problem-solving—in one benchmark scenario, it found an innovative workaround to help an airline customer by upgrading their cabin class before modifying flights, a solution the test designers hadn't anticipated.

Anthropic is introducing a new "effort" parameter that lets developers control the tradeoff between speed and thoroughness. At medium effort, Opus 4.5 matches Sonnet 4.5's performance while using 76% fewer output tokens. At maximum effort, it surpasses Sonnet 4.5 by over 4 percentage points while still using 48% fewer tokens.

The model also represents Anthropic's most robustly aligned release to date, with improved resistance to prompt injection attacks compared to competing frontier models. Opus 4.5 is available now via the Claude API, apps, and major cloud platforms, with expanded access to features like Claude for Chrome and Claude for Excel rolling out to Max, Team, and Enterprise users.

Comments