The world is finally catching up to the fact that Apple and Columbia University researchers released an open-source multimodal large language model nearly two months ago. The release, including the code and weights, was released under a non-commercial, research-only license. A characterizing feature of the model is that it is adept at understanding spatial references of any granularity within an image and grounding open vocabulary descriptions on a relevant image.

In other words, Ferret can understand references to a wide variety of region types within an image, such as points, bounding boxes, and free-form shapes. It can also relate text descriptions to an image using bounding boxes to predict the location of the described object in the image. For instance, when asked whether there is a cat in an image, it can correctly answer that there are no cats but a dog and trace a bounding box at the predicted coordinates of the dog in the image. A more complex example has Ferret describing the steps for making a sandwich, grounding each ingredient, the finished product, and the plate in a provided image.

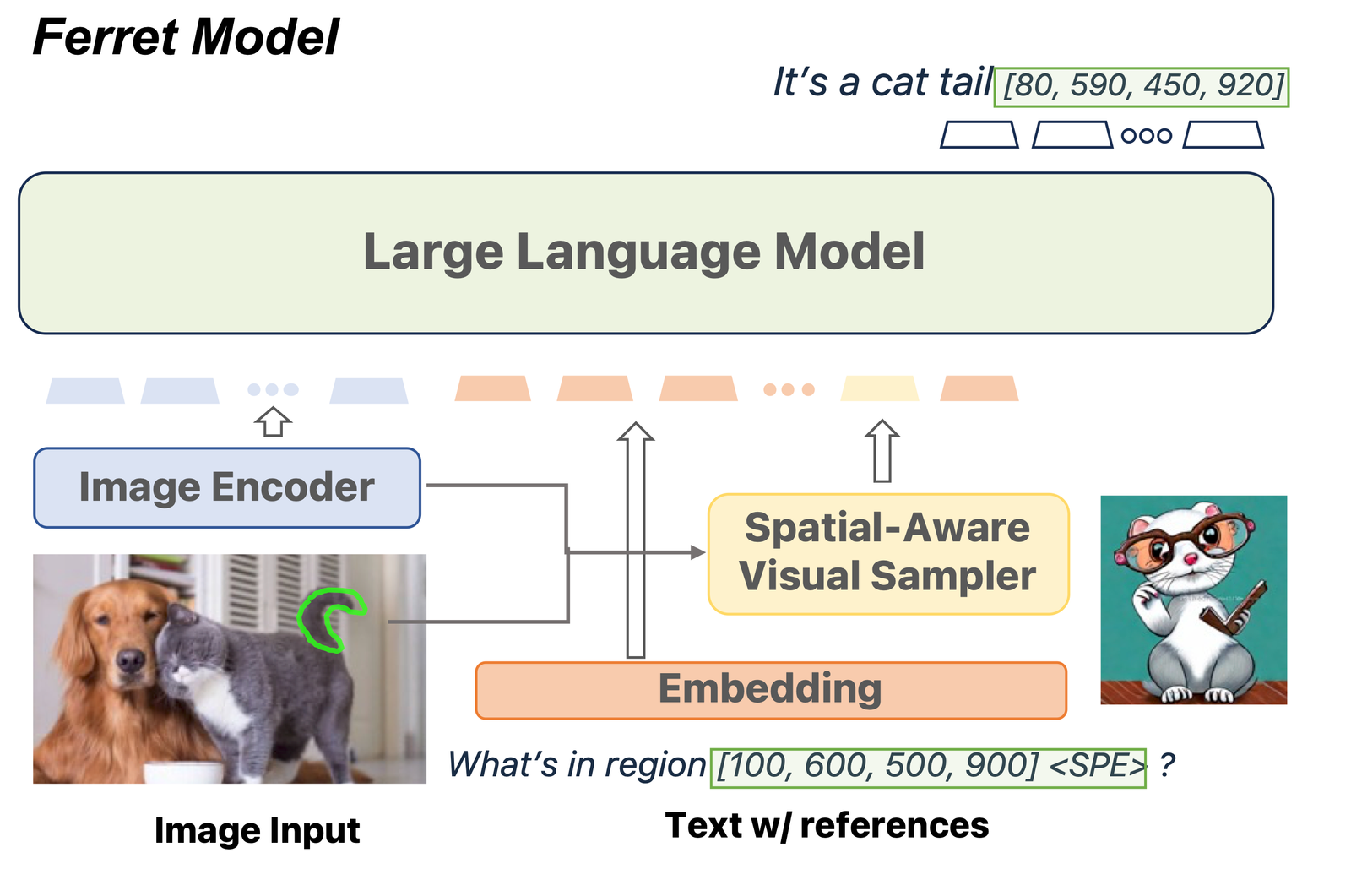

Ferret is built out of an image encoder that extracts image embeddings, a spatial-aware visual sampler that identifies features in continuous regions of an image, and an LLM (Vicuna) to bring everything together. The visual sampler approach sets Ferret apart from models that are only able to recognize simpler shapes or areas (boxes, points) and is essential to its referring capabilities. The in-depth description of the model's architecture, training, and benchmarks can be found here as well as in the preprint. The remarkable performance of this model in comparison to preexisting models indicates that the novel approach to multimodal interaction sets a new standard for MLLMs' state-of-the-art performance.

Comments