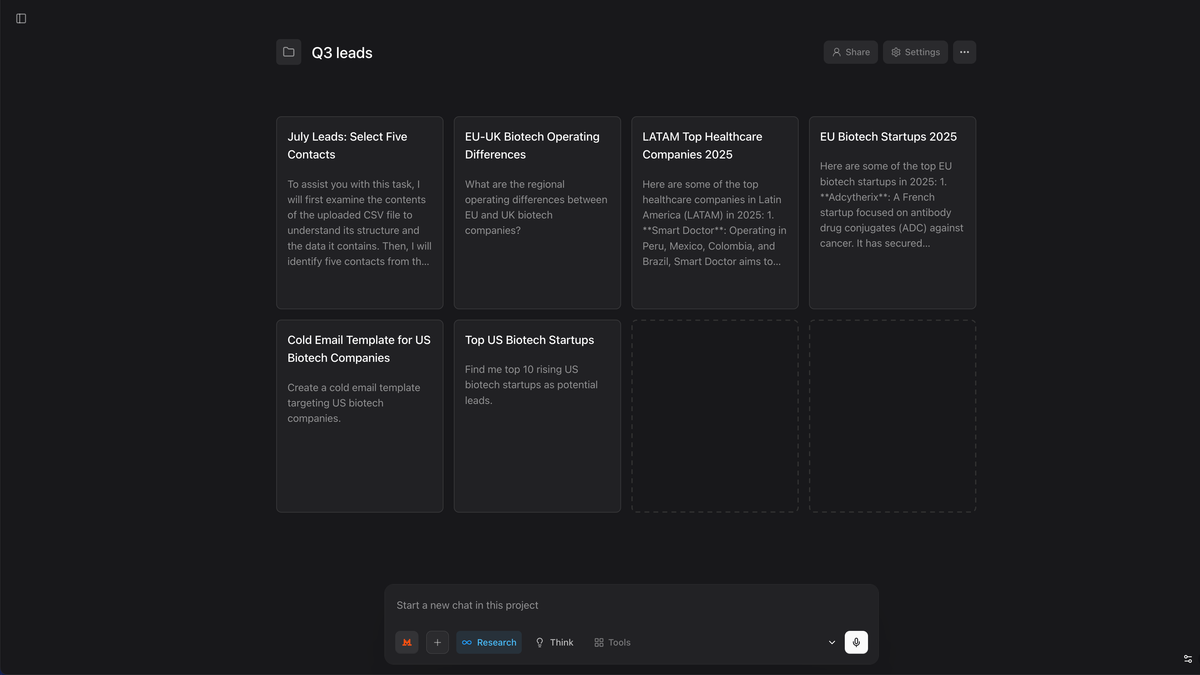

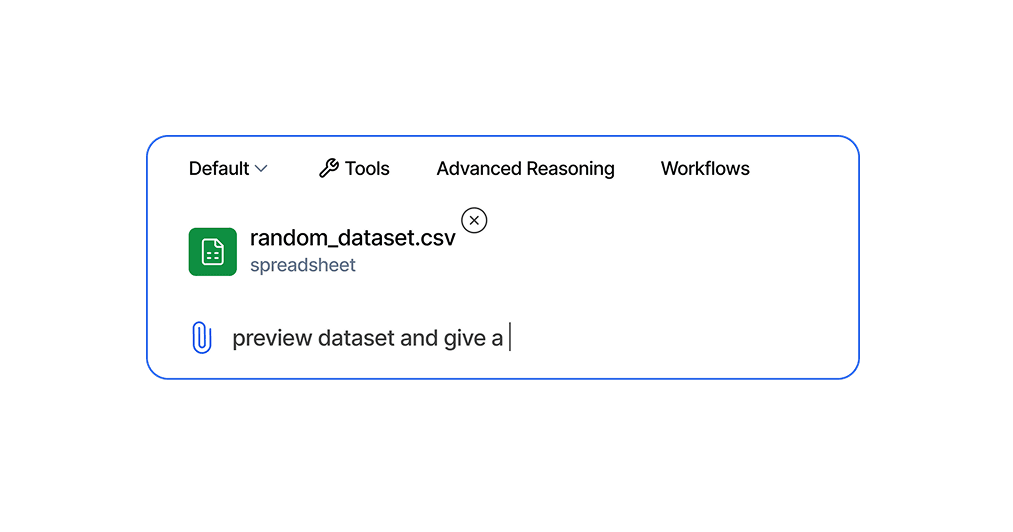

Julius AI raises $10M to create data visualizations from natural language prompts

Julius AI has raised $10 million in seed funding led by Bessemer Venture Partners to expand its natural language data analysis platform. Julius AI can generate insights and visualizations by simply asking questions in plain English.