On Monday, Cohere for AI—Cohere's open research lab—published a blog post detailing the capabilities of Aya Vision, the latest release from the lab's Aya project, itself a quest to ensure every language has a fair representation in state-of-the-art AI. In the post, the Cohere for AI team notes that model performance is still uneven across languages, especially in multimodal tasks. Thus, the new Aya Vision models were developed to bridge that gap by enabling multimodal capabilities in the 23 languages spoken by more than half of the world's population.

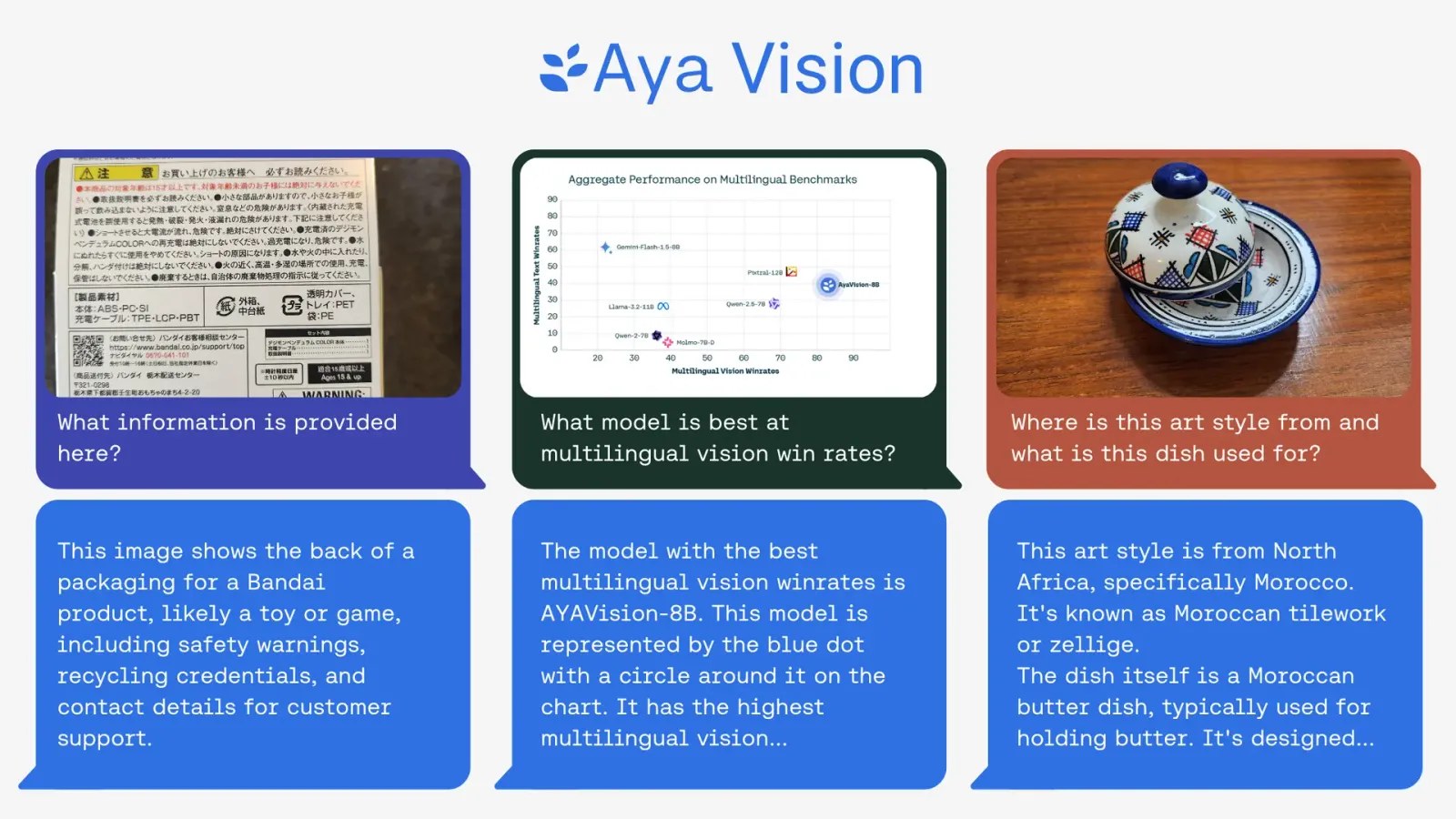

The Aya Vision models come in two sizes (8B and 32B), and both are proficient at generating image captions, answering questions about images, generating text, and providing natural and straightforward translations from text and pictures. Cohere reports that the Aya Vision models have set a "new frontier in vision performance", as they outperform several openly available models, including Qwen2.5-VL 7B, Gemini Flash 1.5 8B, Llama-3.2 11B, Llama-3.2 90B Vision, Molmo 72B and Qwen2-VL 72B, in the AyaVisionBench and m-WildVision benchmarks.

The benchmarks were developed in-house to increase the resources available to test the multilingual performance of vision models in real-world tasks. In particular, Cohere shared it would open-source AyaVisionBench, which consists of 135 image-question pairs spanning real-world scenarios in 9 different categories, including image captioning, visual question-answering, OCR, transcription, visual reasoning, and converting screenshots into code. These image-question pairs are available in 23 languages, thus enabling the thorough testing of a model's multilingual visual capabilities.

The Aya Vision models are available to users on the Cohere Playground chat and via WhatsApp. They can also be downloaded from Kaggle and Hugging Face.

Comments