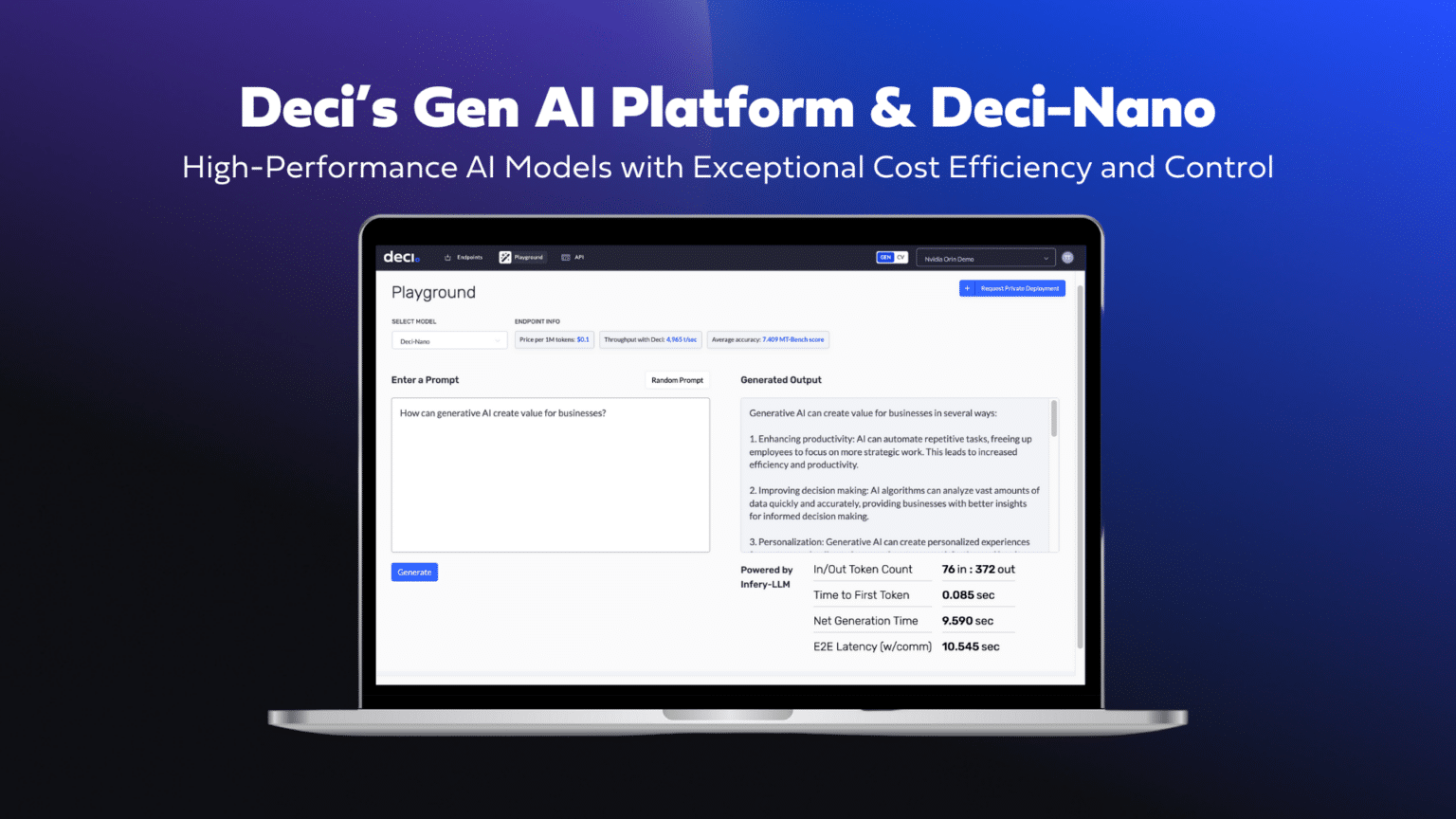

Deci's Gen AI Development Platform includes an inference engine, an AI cluster management solution, and a series of proprietary, fine-tuneable LLMs, starting with Deci-Nano. This small model scores higher than Mistral-7b-instruct-v0.2 and Gemma-7b-it in MT Bench and features a higher throughput than both models. Deci-Nano is 38% faster than Mistral-7b-instruct-v0.2 and 33% faster than Gemma-7b-it when benchmarked on NVIDIA A100 GPUs. Deci Nano also features a modest 8K-token context window and an affordable price of $0.1 per 1M tokens. All these features make Deci-Nano ideal for the production of real-time applications.

Deci-Nano's features are complemented by a variety of deployment options via the Generative AI Development Platform. Other supported models can also be deployed using the platform via Deci's API or on-premises. API deployments include serverless instances with per-token pricing and dedicated instances for ease of fine-tuning and increased privacy protection. Private cloud deployments can be containerized for increased control or a customized managed inference deployed within Kubernetes clusters for a hands-off approach. Finally, on-premises deployments enable users to integrate containerized models into private data centers. Containers host the chosen model and the Infery SDK, yielding complete control over the deployment and the highest level of data privacy and security. Deci also guarantees the possibility of migrating between options to accommodate businesses' evolving needs without affecting customized and fine-tuned models.

Every deployment option benefits from the enhanced throughput of the Deci models. In virtual cloud deployments, the enhanced throughput results in greater efficiency per GPU hour, making the deployment cost-effective and allowing organizations to serve more users at once. On-premises deployments receive an energy-saving and productivity boost that enables the use of GPUs for additional tasks. Deci's software-only approach also features a minimized environmental impact that lowers carbon emissions during the training and inference phases.

Comments