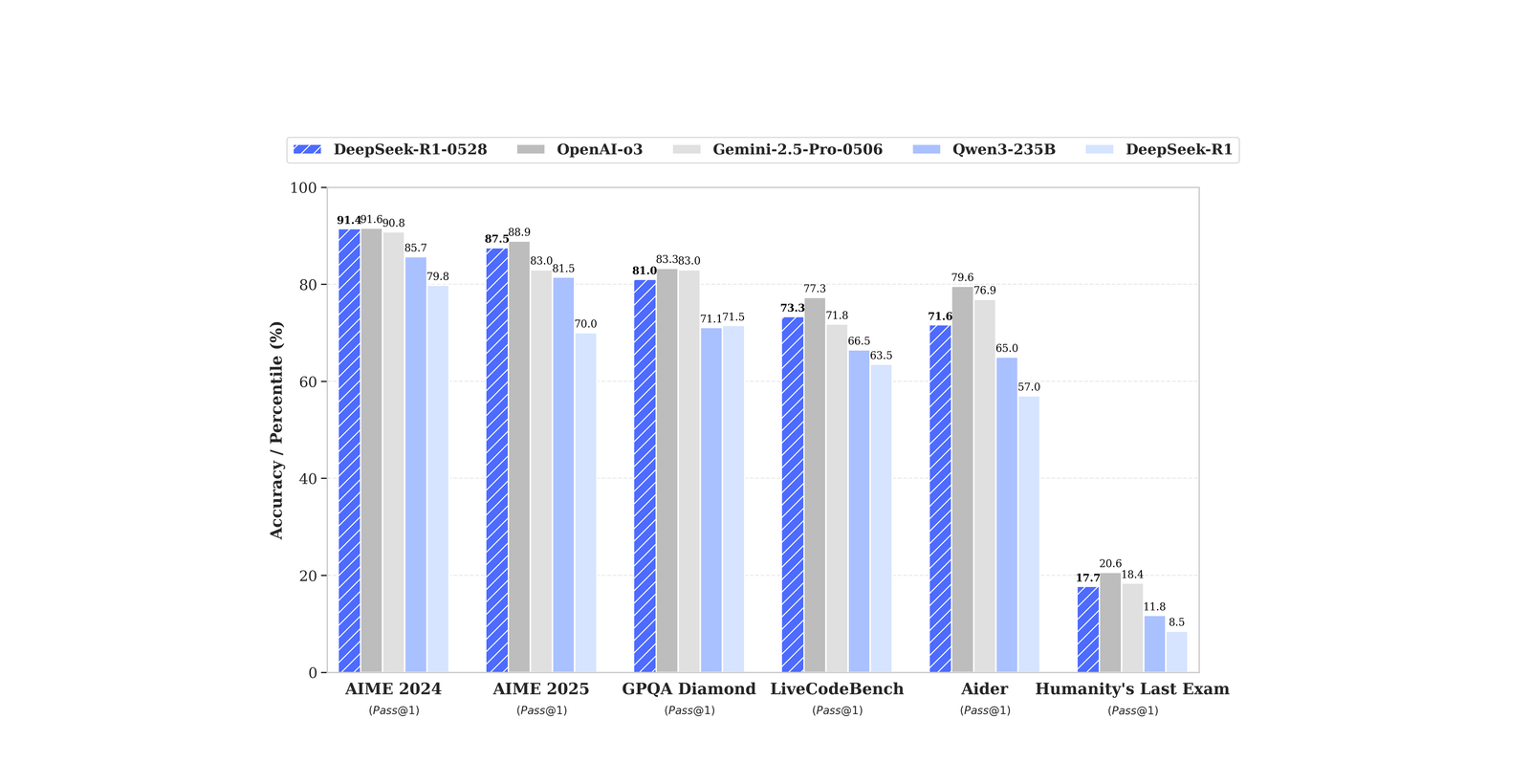

Chinese AI startup DeepSeek recently published a minor upgrade to its popular R1 reasoning model on its Hugging Face repository. Additionally, DeepSeek released DeepSeek-R1-0528-Qwen3-8B, a new distilled 8B parameter variant of R1 based on Qwen 3 8B Base, a small, dense model released barely a month ago. DeepSeek claims that, with this update, the full-size R1 model approaches the performance of OpenAI's o3 and Gemini 2.5 Pro. Interestingly, DeepSeek also shows that its distill model delivers a better benchmark performance in the AIME 24/25 (competition math) evaluations than Gemini 2.5 Flash.

Updated R1: Minor Improvements with Major Requirements

The updated R1, now available on Hugging Face under an MIT license for commercial use, maintains the architecture of its predecessor, which means that it likely cannot run on consumer hardware without modification. Like the original R1 model, DeepSeek-R1-0528 requires at least 16 NVIDIA A100 80GB GPUs to run, among other substantial hardware requirements.

According to DeepSeek, the most substantial change is that the updated R1 reasons more deeply and features enhanced inference capabilities. These improvements stem from "increased computational resources and introducing algorithmic optimization mechanisms during post-training." Additionally, DeepSeek modified its usage recommendations for the model, stating that it now supports a system prompt and that <think> tags are no longer required to trigger the model's "thinking" process.

DeepSeek-R1-0528-Qwen3-8B: Efficiency Meets Performance

The more significant development is DeepSeek-R1-0528-Qwen3-8B, a distilled version built on Alibaba's Qwen3-8B foundation model. Despite being dramatically smaller, this variant demonstrates impressive performance:

- DeepSeek-R1-0528-Qwen3-8B shows an improvement of about 10% on the AIME benchmark compared to the base model Qwen3-8B.

- Nearly matches Microsoft's Phi 4 reasoning model on the HMMT math benchmark

- Approaches the performance of Qwen3-235B-A22B across the tested math benchmarks

Like for Qwen3-8B, the recommended configuration for this model is at least a single GPU with 40-80GB vRAM (such as Nvidia H100). DeepSeek says this makes the distilled model attractive for academic research and focused development on compact reasoning models.

Accessibility and Availability

Both models are available under MIT licensing for unrestricted commercial use. The distilled model is already hosted by several platforms and offered through API access. The upgraded R1 can be accessed on the DeepSeek chat and through an OpenAI-compatible API at DeepSeek's developer platform.

Comments