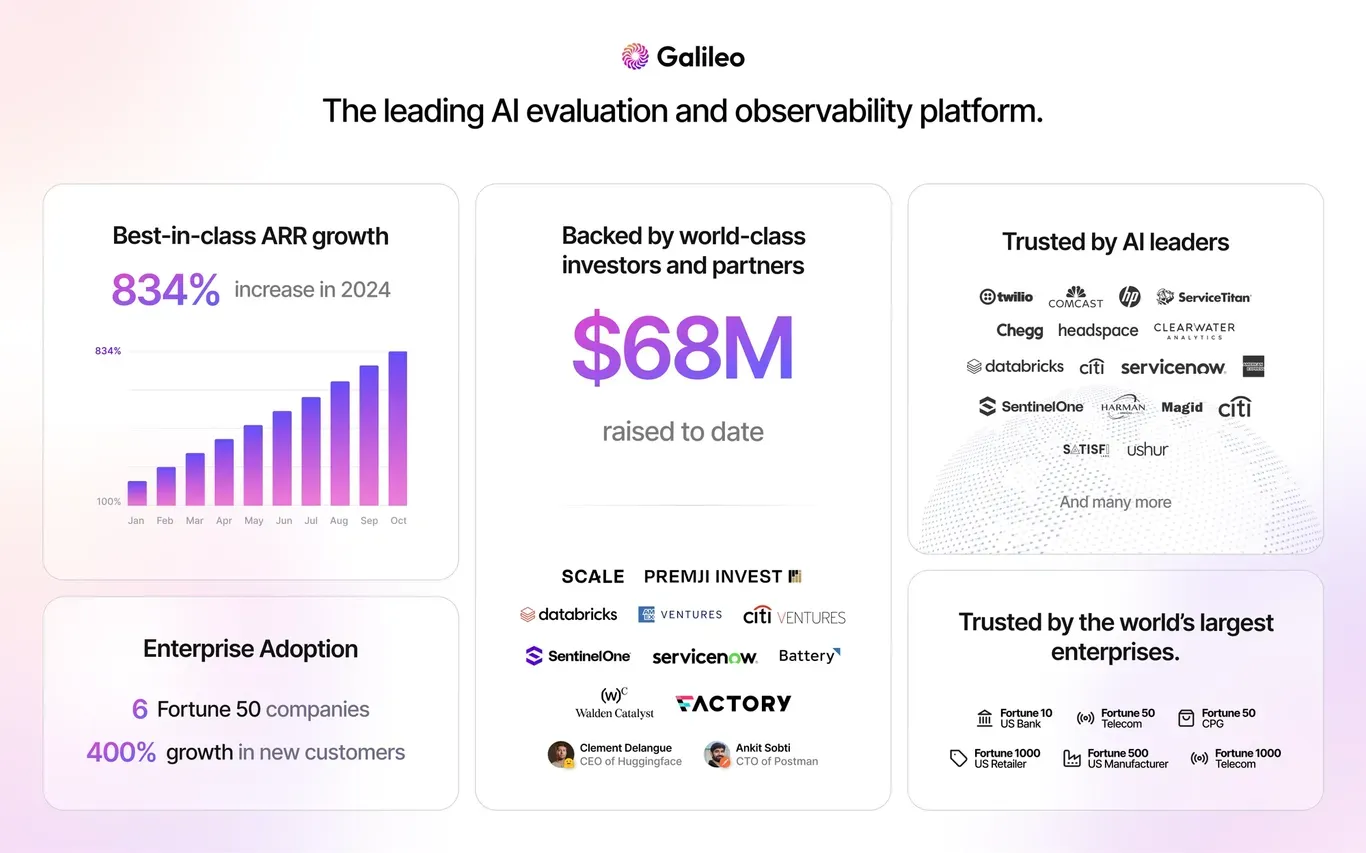

Galileo, a leading AI observability platform and the maintainer of the LLM Hallucination Index, recently shared it has raised $45 million in a Series B funding round led by Scale Venture Partners, with participation from Databricks Ventures, Premji Invest, Amex Ventures, Citi Ventures, ServiceNow, and SentinelOne. Galileo also mentioned that Clement Delangue (CEO, Hugging Face) and Ankit Sobti (CTO, Postman) are joining as investors, and acknowledged the continued support from existing investors including Battery Ventures, Walden Capital, and Factory.

The funding round will fuel the development of Galileo's Evaluation Intelligence Platform, which already supports customers—including Twilio, Comcast, HP, and ServiceTitan—with a consistent and reliable measurement system that facilitates accurate and trustworthy AI applications. The Series B round brings Galileo's total raised funds to $68 million. The funding also arrives right as Galileo has experienced remarkable growth: an 834% increase in revenue and a quadrupling of enterprise customers, including six Fortune 50 companies.

Since its inception in 2021, Galileo has been working to solve the measurement problem in AI, which it considers comprises three separate challenges: a lack of tools, metrics, or frameworks to measure AI accuracy, the uniqueness of each case which calls for unique measurements, and the difficulties associated with reliably scaling existing measurement techniques.

To address each of these challenges, Galileo has built its Evaluation Intelligence on three pillars. First, it is designed to provide end-to-end support across the AI development workflow. Galileo's Evaluate, Observe, and Protect products are built on the company's research-backed metrics, leading to a consistent and reliable measurement system. The Luna Evaluation Suite features high-performance evaluation models that work out-of-the-box without requiring ground truth data and support the creation of high-quality test cases over time.

Finally, the evaluation metrics in the Luna Evaluation Suite are designed to be adaptable and scalable: they fine-tune themselves to the requirements of each use case, the Galileo Wizard inference engine ensures the best possible performance regardless of the amount of queries, and over time, the Evaluation Intelligence Platform delivers tailored insights that allow the individualized optimization of the evaluation methods to fit the requirements of each organization.

In addition to celebrating the news of their Series B, Galileo has also announced its upcoming GenAI Productionize 2.0 conference on October 29, where Galileo, along with AI leaders from Writer, Cohere, NVIDIA, Twilio, Databricks, Unstructured.io, CrewAI, and other organizations will discuss Evaluation Intelligence at length.

Comments