Considering the recent excitement surrounding AI-powered agents, LangChain recently shared it will conduct a round of experiments to determine which agentic architectures are best suited for which use cases. Throughout a series of experiments, LangChain will explore how the performance of a single agent with access to multiple tools compares to that of a multi-agent framework where each node has better-defined application domains. LangChain has divided its research into several stages, starting with studying the variations in the performance of single agents as the available context and number of tools increase.

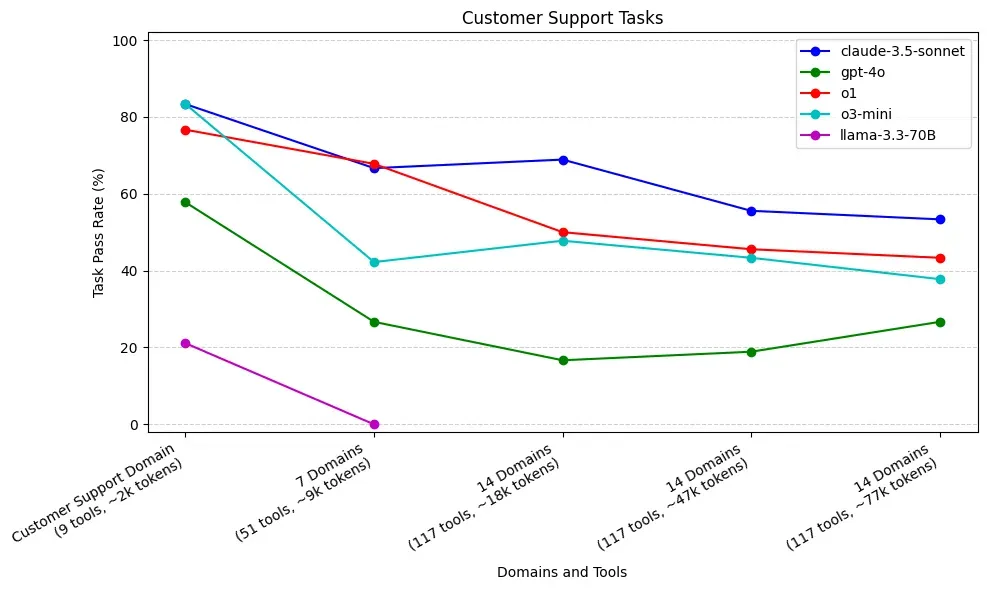

The initial experiment involves the ReAct framework, a simple agentic framework, and the basis for LangChain's internal assistant, which has two specific action domains: calendar scheduling and customer support. In the experiment, these domains were handled by independent agents to test the performance of Claude 3.5 Sonnet, GPT-4o, o1, o3-mini, and Llama-3.3-70B. Each domain was tested with 30 tasks with three runs each. More tools and context were progressively added after each 90-task round to increase complexity.

The findings revealed significant limitations in single-agent architectures as their responsibilities expand. The study showed that increasing the context and providing access to additional tools consistently degraded agent performance across all tested models. Additionally, the experiment showed that performance degradation was particularly pronounced in the customer support domain experiment, where expected trajectories are longer (2.7 avg. tool calls) than in the scheduling domain experiment (1.4 avg. tool calls).

Claude 3.5 Sonnet, o1, and o3-mini demonstrated comparable performance among the tested models. Moreover, these three performed noticeably better than GPT-40 and Llama 3.3 70B. Significantly, o3-mini matched o1 and Claude with smaller context windows, but its performance declined more steeply as context grew. GPT-40's and Llama 3.3 70B's performance on the calendar scheduling experiment was especially deficient: GPT-40's performance dropped dramatically—from 39/90 to a mere 2/90—with the increase from one to seven domains (out of 14), and Llama 3.3 70B failed to call the correct tool even when working in a single domain.

The research has important implications for developing AI systems, suggesting that single agents may not be the optimal solution for multi-domain workflows. To continue this series of experiments, LangChain plans to explore several research avenues: First, multi-agent architectures, where domains are more clearly delimited, to see whether an architecture that distributes responsibilities across multiple specialized agents fares better in accomplishing single-domain tasks in a multi-domain workflow. Then, tasks with longer trajectories and more complex tool calls to further stress-test single and multi-agent architectures. Finally, the performance of multi-agent tasks when dealing with cross-domain tasks requiring instructions and tools from multiple domains.

Comments