Meta AI, the assistant developed by Meta and available across the company's platforms (Facebook, Instagram, Messenger, and WhatsApp), is getting a new feature that enables users to chat out loud with the assistant. The feature is closer to the recently launched Gemini Live and was announced just a day after OpenAI confirmed the general rollout of its Advanced Voice Mode for Pro and Team users. The voice feature enables users to ask questions that will then be transcribed and processed by Meta AI before it outputs its response using a synthetic voice.

Meta reportedly invested a hefty sum to enable users to choose among a range of voices, including those of celebrities Awkwafina, Dame Judi Dench, John Cena, Keegan-Michael Key, and Kristen Bell, with whom the company claims it has struck deals to use their likenesses to power the new Meta AI feature. The announcement befits one of CEO Mark Zuckerberg's goals to make Meta AI the most widely used AI assistant by the end of this year.

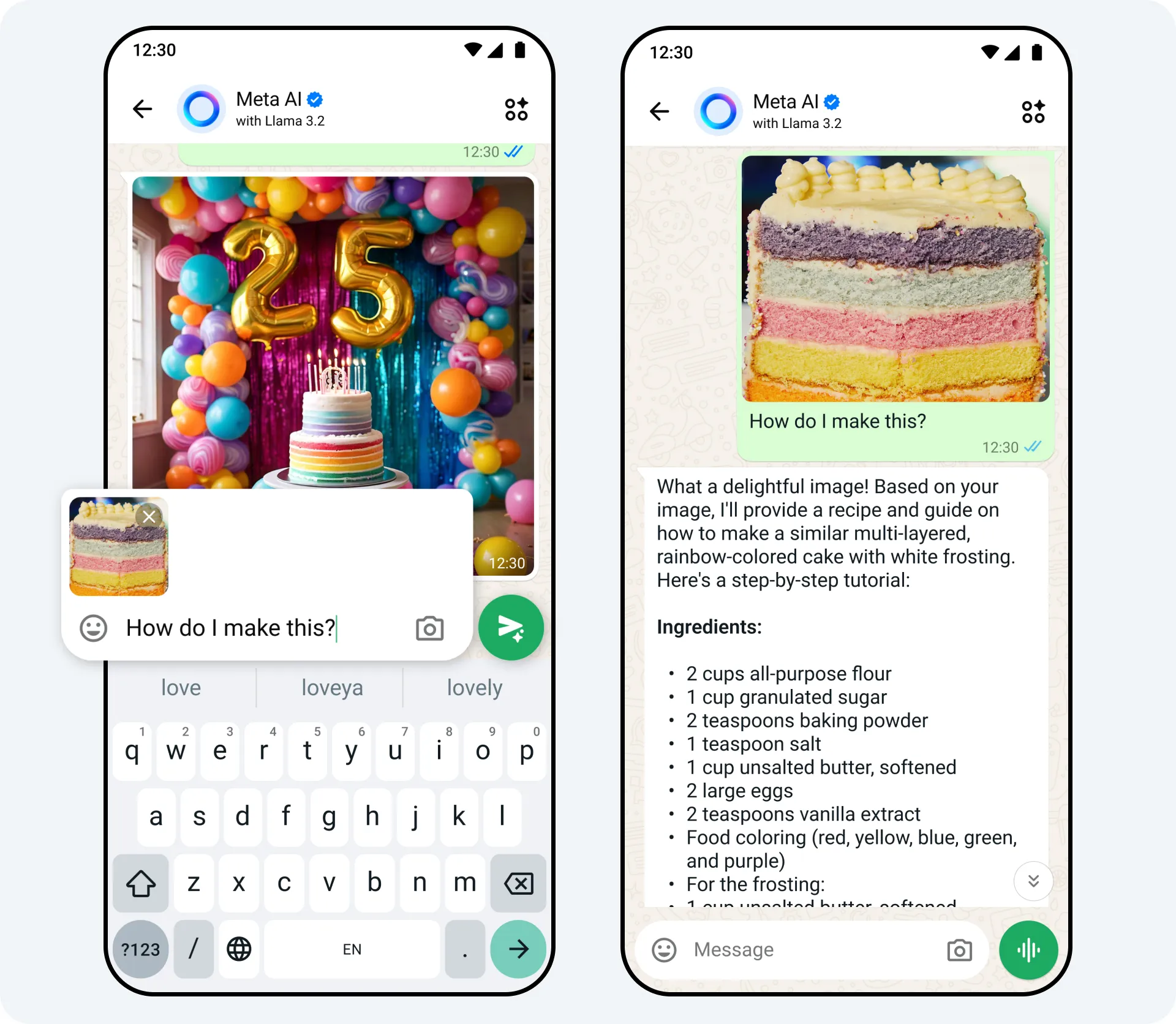

Thanks to the new multimodal Llama 3.2 models, Meta AI can now answer questions about and edit user-provided images, catching up with all the closed-source AI-powered assistants already accepting images as valid inputs. This feature is reminiscent of the recently announced expanded capabilities for Snapchat's MyAI, powered by Gemini. Coincidentally, both features are marketed as ideal for plant identification.

Additionally, users can provide images and then ask Meta AI to edit them by telling it what to remove, replace, or add to the picture. Whether it is an outfit change, or removing unwanted objects from the background, users need only describe it in a text-based prompt accompanying their image and Meta AI will deliver. Some image-related AI features are also available directly on Instagram and Facebook. For instance, when resharing a picture as an Instagram Story, a new background-generating feature can analyze the picture to understand its contents and suggest a custom matching background.

Meta AI on Instagram is also getting a live translation feature for Reels where Meta AI generates dubbing and automatically lip-syncs it to the video. Now in an experimental stage, the feature is limited to Reels from selected Spanish and English-speaking creators from the US and Latin America. Meta has plans to expand the feature to more languages and creators.

Instagram, Facebook, and Messenger are getting expanded 'Imagine me' capabilities. Users can now access this feature directly on their feed, Stories, and Facebook profile picture, where they can also easily share these AI-generated creations and have Meta AI suggest captions for them in Facebook and Instagram Stories. Additionally, Meta AI can help users create custom chat themes for Messenger and Instagram DMs.

Perhaps the most notorious of the upcoming AI features is one currently under testing, where automatically Meta AI-generated content based on user interests and current trends will be displayed to users in their Facebook and Instagram feeds. It appears the company wants to attract users to engage with the generated content, so they are motivated to create more. According to Meta, tapping on the automatically generated content enables users to modify it, while swiping takes them to the 'Imagine' feature.

Comments