Meta's Fundamental AI Research (FAIR) team has unveiled some of its early research work by releasing a series of AI models and datasets. By doing this, Meta hopes to reinforce its commitment to open science and collaborative innovation. These research artifacts span various domains, including image-to-text and text-to-music generation models, a multi-token prediction model, and tools for responsible AI development. Here's a quick rundown of the released artifacts:

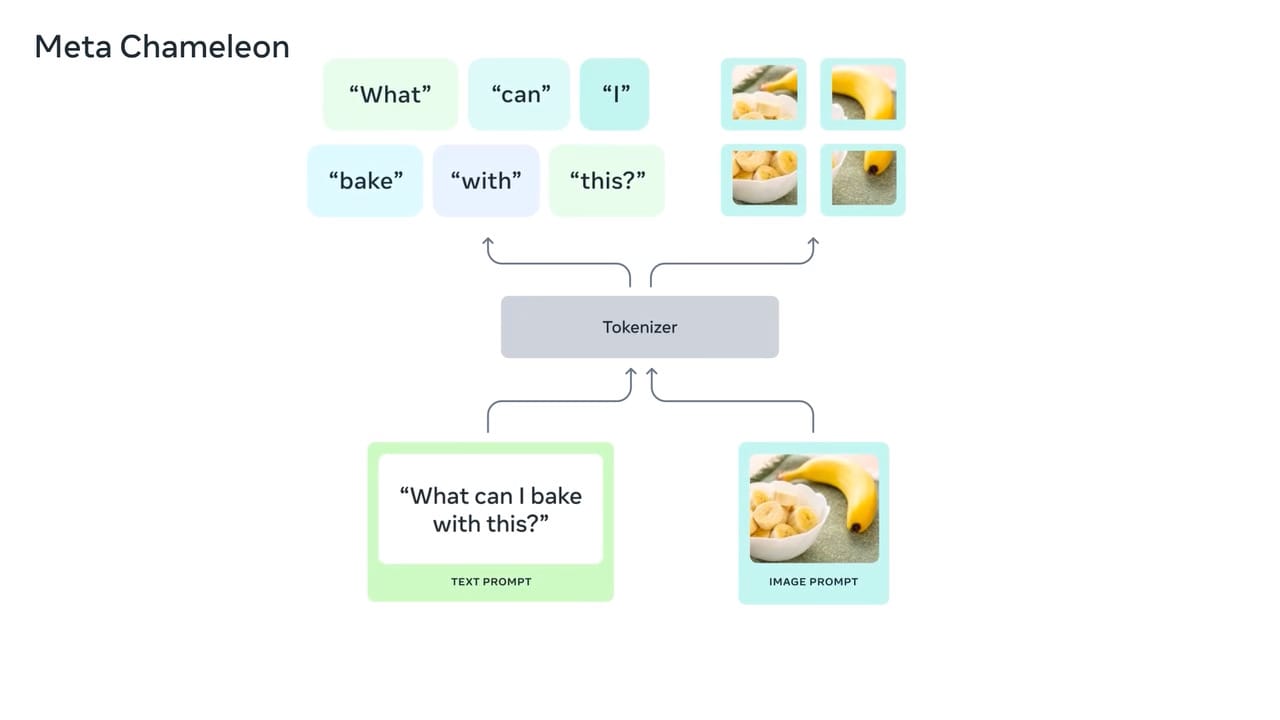

- Meta Chameleon: A versatile model that combines text and images as input and can generate any combination of text and image as output. Meta Chameleon features a single unified architecture for both encoding and decoding. Meta shared some of the 7B and 34B models' components under a research-only license. In particular, Meta is not sharing the image generator for now; the research-only release supports multimodal inputs, but its outputs are text-only.

- Multi-token prediction models: LLMs famously require massive amounts of text for training because they predict text word-by-word. Meta has been researching multi-token prediction, to improve the efficiency and scalability of LLMs. With this approach, models can predict multiple words simultaneously, improving the speed and efficiency of the model's training and capabilities. Meta released pre-trained code completion models under a research-only license.

- Meta Joint Audio and Symbolic Conditioning for Temporally Controlled Text-to-Music Generation (JASCO): An advanced text-to-music generation model that accepts conditioning inputs (such as chords or beats) to improve control over the model's outputs. Currently a research paper release, Meta plans to release the inference code and models soon.

- AudioSeal: A pioneering audio watermarking technique for detecting AI-generated speech. AudioSeal's localized detection approach optimizes and speeds up the detection of AI-generated material 485 times compared to other methods. The model and training code for AudioSeal are available under a commercial license.

- PRISM dataset: Meta advised PRISM's compilation, a diverse collection of the stated preferences and feedback of 1,500 participants from 75 countries to 8,011 live conversations with 21 LLMs. PRISM is now available from Meta's external partners.

- Geographic diversity research: Tools and methods to improve representation and mitigate bias related to geographic representation in text-to-image models.

These releases aim to push the boundaries of AI research while addressing crucial aspects of responsible development. By sharing their work openly, Meta FAIR hopes to inspire further innovations and foster important discussions within the AI community.

Comments