Meta recently announced the release of its models pre-trained for code completion using its multi-token prediction approach. The models, which fall under Meta's non-commercial research license, are now available under gated access at the AI at Meta Hugging Face repository. Meta first announced the upcoming availability of these models about two weeks ago, when the company published an update on several of its research artifacts, including Meta Chameleon (which was released as a limited version that outputs text only), text-to-music generation model that accepts conditioning inputs, a digital watermarking technique, and the PRISM dataset.

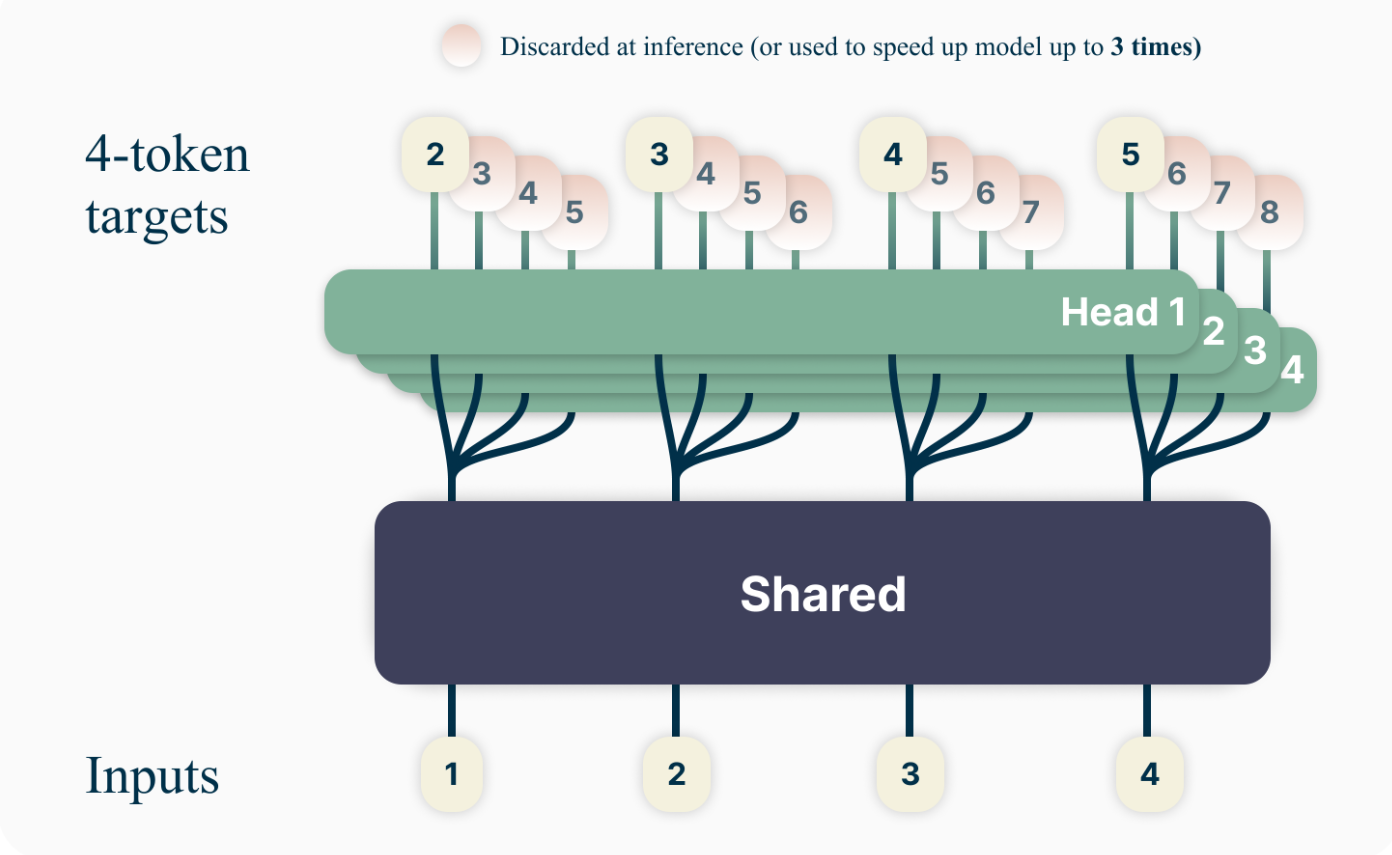

As the name suggests, multi-token prediction approaches teach models to predict the next n tokens at once, as opposed to the standard next-token prediction approach which has the model maximize the probability of the next token in a sequence, given the history of the previous tokens. Although multi-token prediction is not new, the research team behind Meta's models developed a novel approach that has no training time or memory overhead, is shown to be beneficial at scale, with models solving more coding problems from the benchmark testing, and is demonstrated to enable inference up to 3 times faster than baseline.

The model release includes two pairs of 7 billion-parameter baseline and multi-token prediction models. The first pair of models was trained on 200 billion tokens of code, while the other pair was trained on 1 trillion.

Comments