In a recent blog post, Mistral AI shared it has updated Codestral, its code generation model, with a new architecture and an improved tokenizer that makes code generations and completions twice as fast when compared with its predecessor. Codestral, originally launched a little over seven months ago, is proficient in English and over 80 popular and lesser known programming languages and supports fill-in-the-middle (FIM), code correction and test generation tasks. It is also the first model to be released under Mistral AI's Non-Production License, a license that allows model use for strictly personal testing, research, experimentation and evaluation purposes.

The updated Codestral 25.01 supports a best-in-class 256K token context length, which twice as large as the next best model (DeepSeek Coder V2 lite with 128K). The model was put to the test in a benchmark comparison with other leading code generation models smaller than 200 billion parameters. Codestral 25.01 displays strong performance across a variety of Python coding benchmarks, including HumanEval (multiple languages), LiveCodeBench, Spider, and CanItEdit. Codestral 25.01 is also the indisputable leader in FIM benchmarks, outperforming rivals DeepSeek and OpenAI.

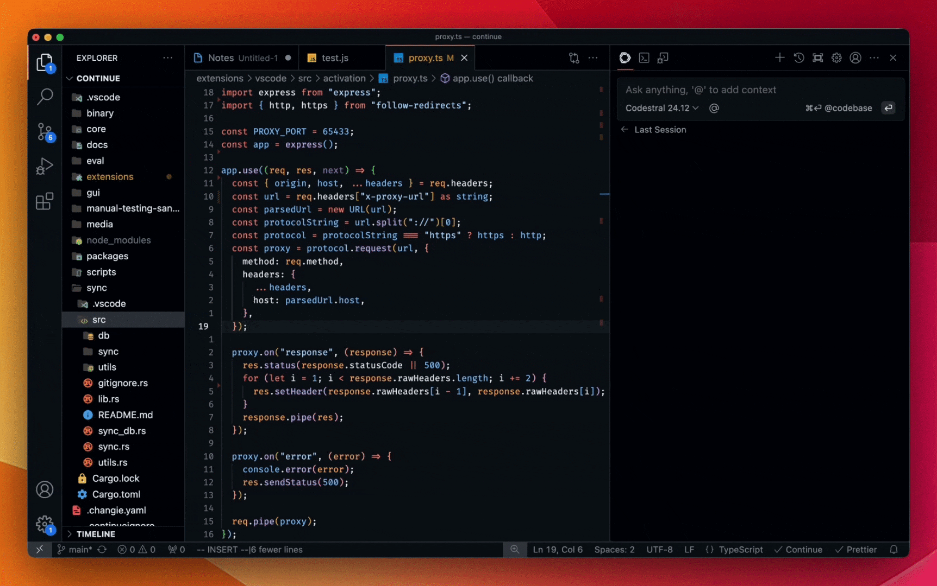

Developers will soon have access to Codestral 25.01 through Mistral AI's IDE and plugin partners, starting with Continue.dev for VS Code or JetBrains. Codestral 25.01 is also available for local deployments, through the Codestral API, and on Google Cloud’s Vertex AI and Azure AI Foundry (private preview only). Soon, Codestral 25.01 will also be available on Amazon Bedrock.

Comments