Last week, the China-based research lab Moonshot AI released Kimi K2.5, a powerful open source multimodal model trained on 15 trillion mixed visual and text tokens, alongside Kimi Code, an open source coding tool that competes with similar products from closed-source providers, such as Anthropic's Claude Code and Google's Gemini CLI.

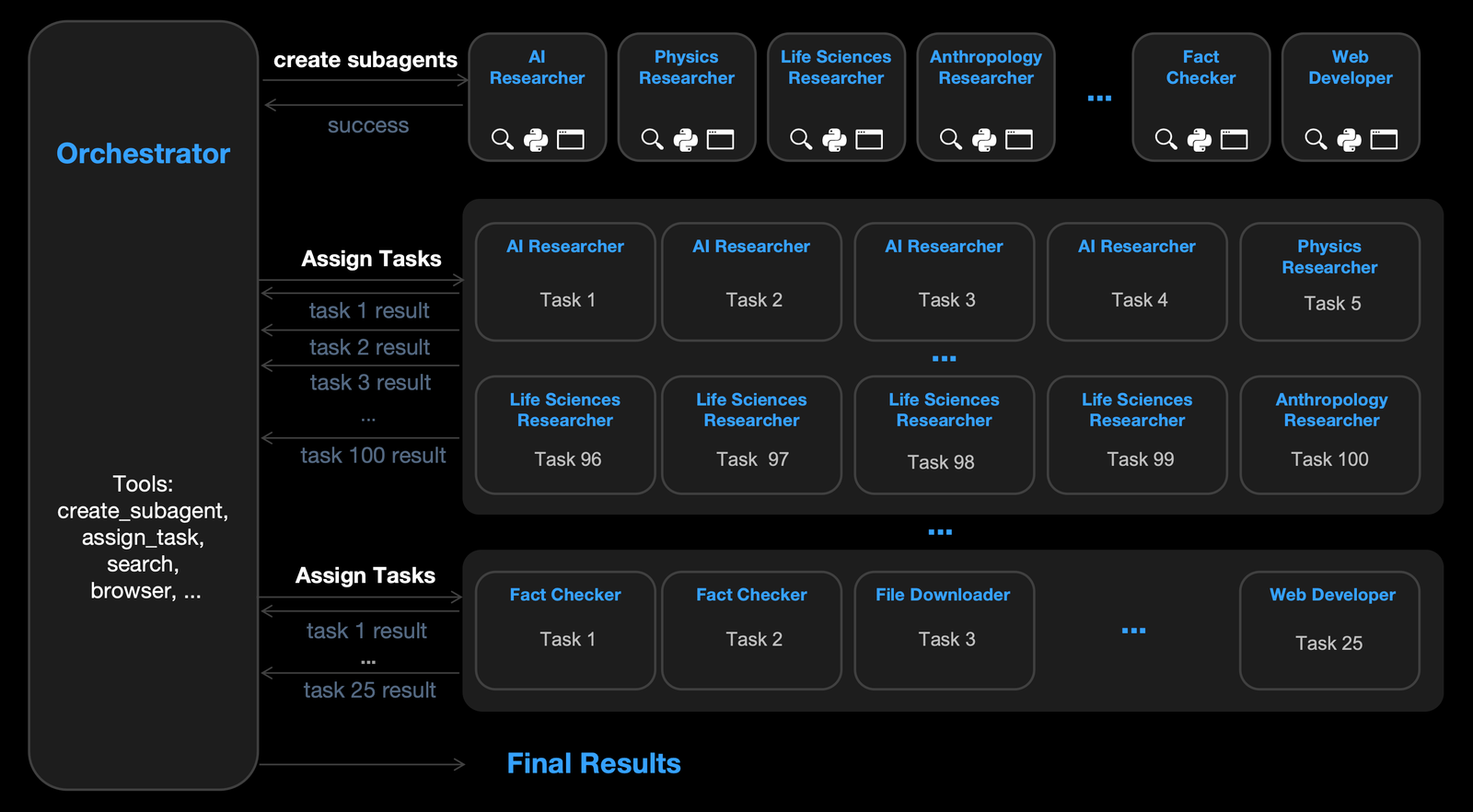

The natively multimodal model excels at coding and introduces "agent swarm" technology—a self-directed orchestration system where up to 100 sub-agents execute parallel workflows across up to 1,500 coordinated steps without predefined roles. Using Parallel-Agent Reinforcement Learning (PARL), K2.5 can reduce execution time by up to 4.5x compared to single-agent setups.

Benchmark results show Kimi K2.5 matching or exceeding proprietary competitors in key areas. It outperforms Gemini 3 Pro on the SWE-Bench Verified coding benchmark and beats both GPT 5.2 and Gemini 3 Pro on SWE-Bench Multilingual. For video understanding, it surpasses GPT 5.2 and Claude Opus 4.5 on VideoMMMU.

The model's coding capabilities extend beyond text—developers can input images or videos and request similar interfaces. Kimi Code, available through terminals or integrated into IDEs like VSCode, Cursor, and Zed, supports visual inputs and automatically discovers existing skills and MCPs from pre-existing workflows and integrates them into its working environment.

Founded by former Google and Meta researcher Yang Zhilin, Moonshot is backed by Alibaba and HongShan. The company has been steadily raising money from investors: $1 billion at a $2.5 billion valuation in its February 2024 Series B, then secured $500 million more at $4.3 billion in December last year, and is reportedly seeking to raise a new round at a $5 billion valuation.

The release positions Moonshot to compete in the lucrative coding tools market, where

Comments