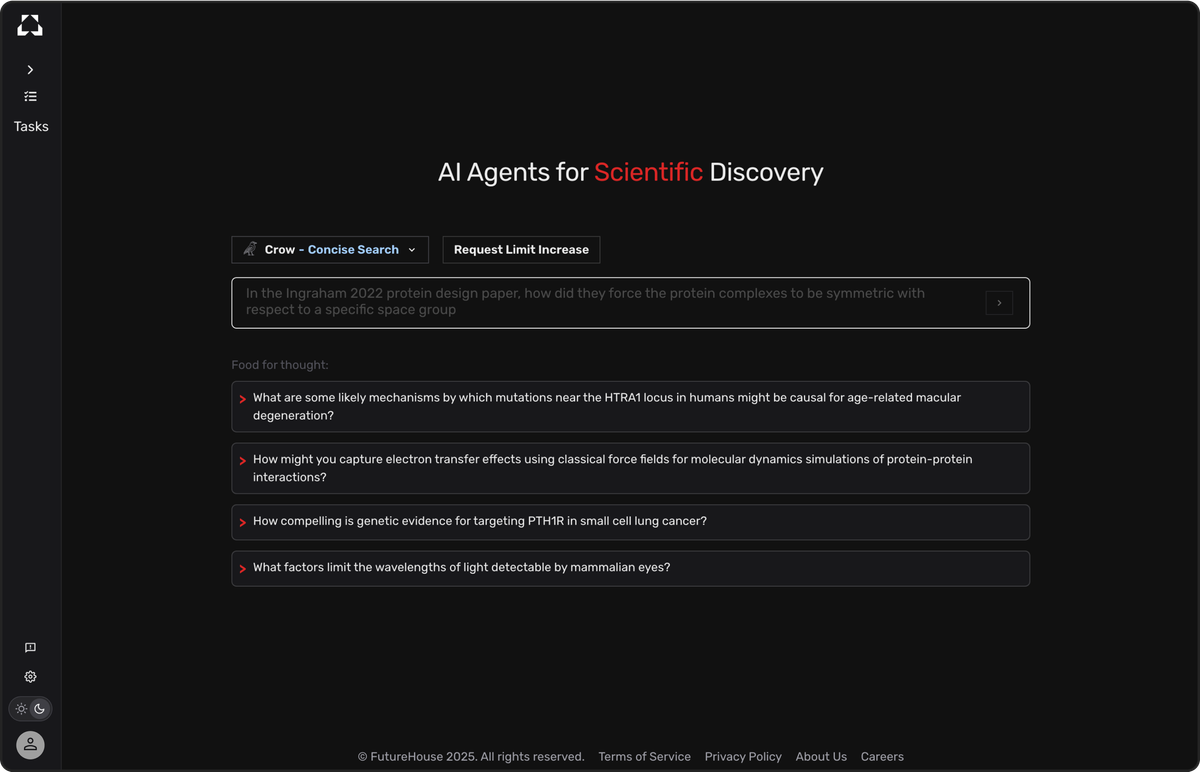

FutureHouse launched a closed beta for Finch, its AI tool for 'data-driven discovery'

FutureHouse, the Eric Schmidt-backed nonprofit with ambitions to build an "AI scientist" within the next decade, has released a new tool called Finch aimed at accelerating biological discovery. Currently in the closed beta phase, Finch can assist with open-ended and directed data analysis tasks.