NVIDIA recently unveiled the RTX AI Toolkit, a free-to-use, comprehensive set of tools and SDKs designed to help developers seamlessly integrate, optimize, and deploy AI models within their Windows applications. It aims to deliver optimal AI performance for local and cloud requirements while simplifying the process of leveraging generative AI models to make it accessible even to developers without prior experience in AI frameworks.

The Toolkit provides an end-to-end workflow addressing the most prominent challenges for integrating AI capabilities. Developers can leverage pre-trained models from Hugging Face, customize them using fine-tuning techniques in tools like NVIDIA AI Workbench to meet individual application requirements, and then quantize them using tools like TensorRT Model Optimizer and TensorRT Cloud to ensure efficient performance across a wide range of NVIDIA GeForce RTX GPUs, both locally and in the cloud.

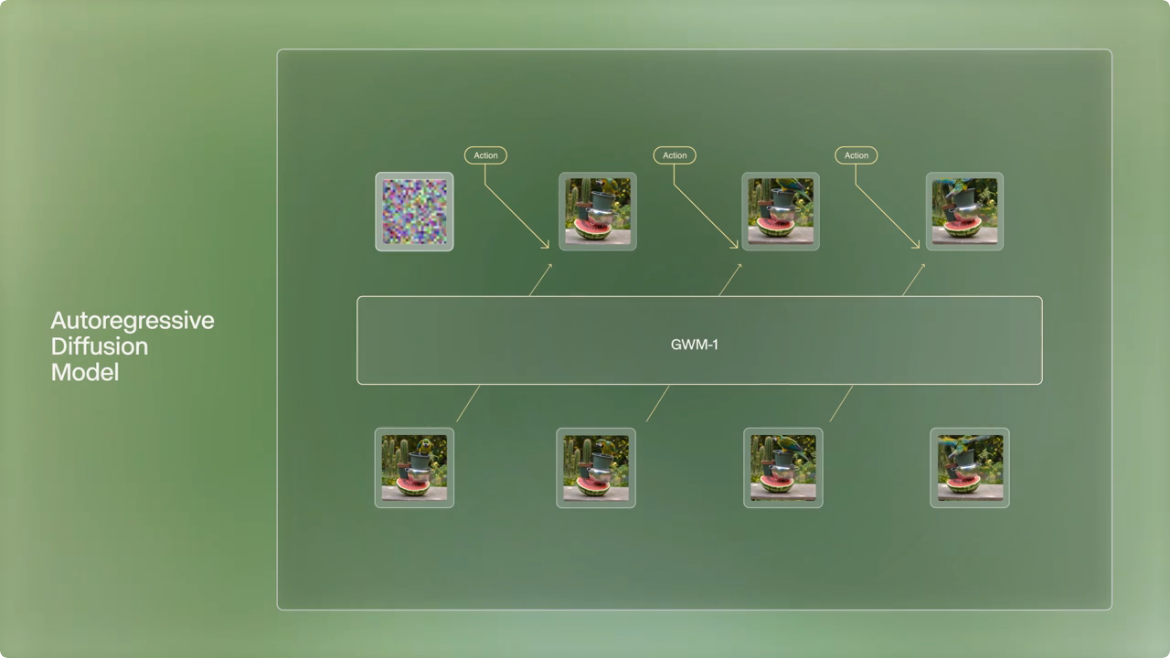

Once models are fine-tuned and optimized, the RTX AI Toolkit provides tools for on-device and cloud deployments. The NVIDIA AI Inference Manager (AIM) (early access) performs all the pre-configurations required for AI inference and integration. It even determines whether a PC can run the model locally or on the cloud, based on runtime compatibility checks and developer policies. The NVIDIA AIM enables developers to leverage NVIDIA NIM for cloud deployment or TensorRT for on-device deployment.

The RTX AI Toolkit is already being adopted by creative software providers Adobe, Blackmagic Design, and Topaz Labs to deliver AI-accelerated apps tailored for RTX PCs. Moreover, the components of the Toolkit are available as integrations through developer frameworks such as LangChain and LlamaIndex, while ecosystem tools, including Automatic1111, Comfy.UI, Jan.AI, OobaBooga, and Sanctum.AI are accelerated with the RTX AI Toolkit to simplify and optimize the building process further. Developer access to the NVIDIA RTX AI Toolkit will be available shortly.

Comments