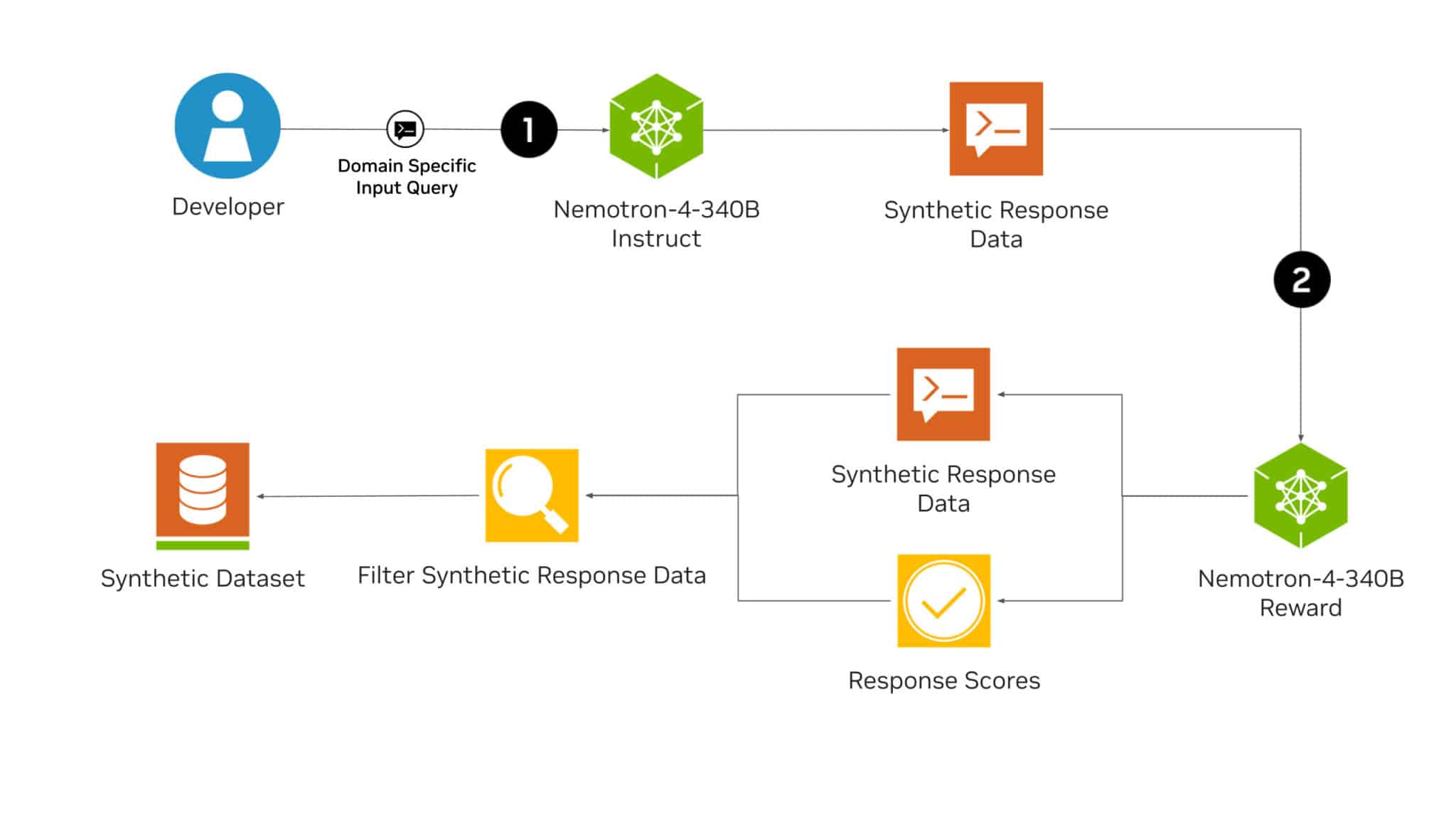

NVIDIA's Nemotron-4 340B is an open family of models that comprise an end-to-end synthetic data generation pipeline for training large language models (LLMs) in contexts where access to large and diverse labeled datasets is cost-prohibitive. Nemotron-4 340B comprises base, instruct, and reward models optimized to work with NVIDIA NeMo for model training and inference with the open-source NVIDIA TensorRT-LLM library.

The instruct model creates diverse synthetic data mimicking real-world data, while the reward model evaluates and filters responses based on attributes like helpfulness and coherence. The Nemotron-4 340B Reward model currently tops the Hugging Face RewardBench leaderboard. Moreover, the Nemotron-4 340B Base model allows researchers to create custom instruct and reward models on proprietary data, assisted by the HelpSteer2 dataset.

Nemotron-4 340B Instruct was subject to rigorous safety evaluations, although users should always perform additional assessments to ensure the adequacy of the model's outputs to the targeted use cases. Users can download Nemotron-4 340B from the NVIDIA NGC catalog and Hugging Face. The models will also be available at ai.nvidia.com as an NVIDIA NIM microservice shortly. In-depth technical details are available in the research papers on the model and dataset.

Comments