Researchers at NVIDIA have recently proposed a combination of model pruning and distillation as an efficient strategy to obtain performant small language models which are less resource intensive and more affordable to deploy. In their research paper, the team demonstrates the benefits of this approach by applying it to Nemotron-4 15B to obtain Minitron 8B and 4B. The findings from the paper include that models obtained via the pruning and distillation method:

- fare significantly better than a 4B parameter model trained from scratch and a 4B parameter model pruned from a 15B one and retrained using traditional methods;

- require fewer training tokens and computing resources to perform competitively in comparison with similar-sized models; and

- display performance comparable to Mistral 7B, Gemma 7B, and Llama-3 8B, which are trained with significantly more tokens (up to 15T, compared to Minitron's ~100B).

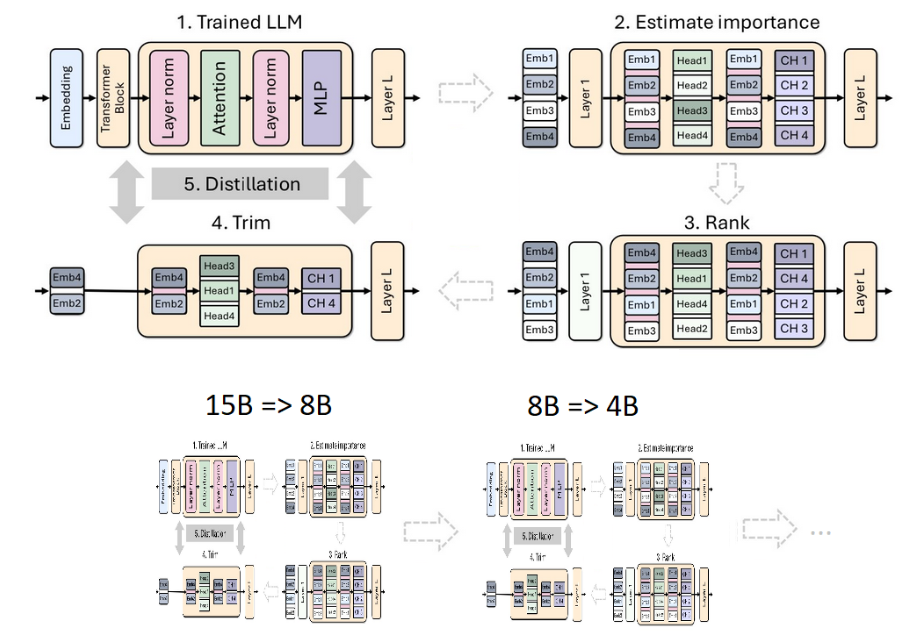

To obtain a smaller model, the research team advanced in three stages. First, the team evaluated the importance of each component in the 15B model (layer, neuron, head, and embedding channel), ranked them, and pruned to the desired 8B parameter size. Then, the resulting model was subject to light retraining using model distillation with the 15B model as teacher and the 8B model as student. Finally, the retrained 8B model became the starting point and teacher for the 4B model. In addition to describing this process in detail the research paper outlines some best practices to consider for future applications of the pruning and distillation approach.

NVIDIA recently put those practices to the test by applying them to Meta's Llama 3.1 8B model, creating Llama-3.1-Minitron 4B. This new model performs competitively against other state-of-the-art open-source models of similar size, including Phi-2 2.7B and Gemma2 2.6B. Llama-3.1-Minitron 4B will be available in the NVIDIA HuggingFace collection soon.

Comments