On Tuesday, OpenAI released gpt-oss-120b and gpt-oss-20b, two text-only "open" reasoning models that the company claims perform comparably to its own closed-source o3 and o4-mini models. The models were released under an Apache 2.0 license, which supports both commercial and non-commercial use.

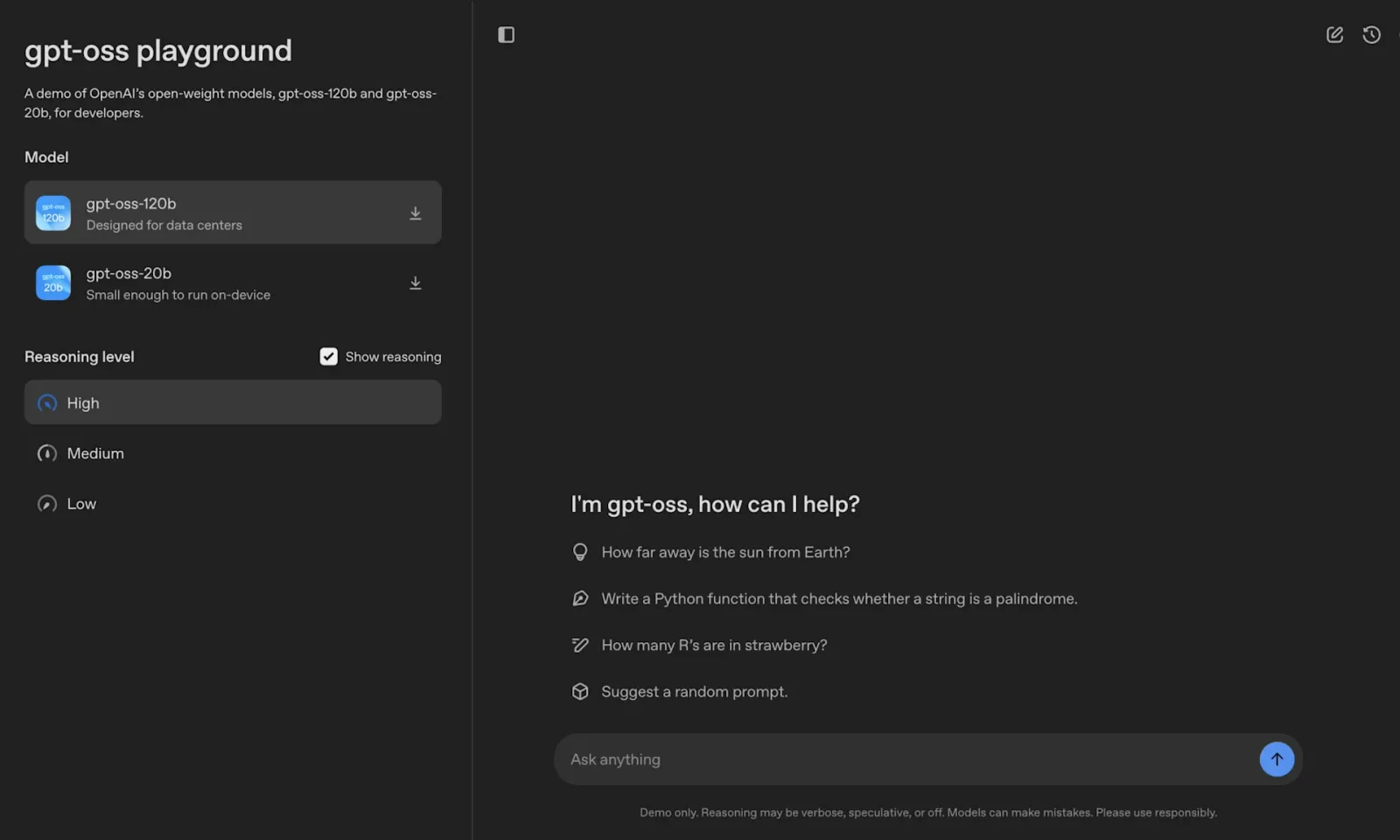

These releases mark OpenAI's first open-weight models since GPT-2 in 2019, representing a significant shift for the company that has primarily pursued closed-source development. The larger gpt-oss-120b model runs on a single Nvidia GPU, making it ideal for use in data centers and high-end consumer hardware. Meanwhile, the lighter gpt-oss-20b can be run on consumer laptops with at least 16GB of memory.

Both models demonstrate strong performance on reasoning benchmarks, outperforming similarly sized open models from competitors like DeepSeek and Qwen. On the competitive coding benchmark Codeforces, gpt-oss-120b and gpt-oss-20b (with tool use) scored 2622 and 2516, respectively. According to OpenAI, gpt-oss-120b's performance surpasses that of the o3-mini model, while closely matching or even exceeding that of o4-mini.

The launch comes amid growing pressure from Chinese AI labs dominating the open-source space and the Trump administration's push for U.S. companies to release more open technologies, which could have "geostrategic value" for the country. CEO Sam Altman framed the release as advancing OpenAI's mission to "ensure AGI benefits all of humanity" while promoting "an open AI stack created in the United States, based on democratic values."

Comments