OpenAI is enhancing enterprise support by launching features focused on large organizations or developers who may require more flexible scaling options. The company's newest offerings include security-focused features, administrative control, and cost management. Improved security features include Private Link, a solution that connects Azure and OpenAI with minimal exposure to the rest of the internet, and native Multi-Factor Authentication (MFA). These join OpenAI's existing security measures, including SOC 2 Type II certification, single sign-on (SSO), data encryption at rest using AES-256 and in transit using TLS 1.2, and role-based access controls. OpenAI also offers Business Associate Agreements for healthcare customers requiring HIPAA compliance and a zero data retention policy for qualifying customers.

The new Projects feature gives administrators fine-grained controls and oversight over individual OpenAI projects. The feature provides better administrative control, allowing organizations to scope roles and API keys to particular projects, restrict or allow model availability, set usage and rate-based limits, and create service account API keys allowing project access without being linked to individual users. The Assistants API was updated to include improved retrieval (now supporting 10,000 files per assistant), streaming support for real-time, conversational responses (a highly-demanded feature), 'vector_store' objects for better file management, control over token usage, tool choice parameters, and support for fine-tuned GPT-3.5 Turbo models.

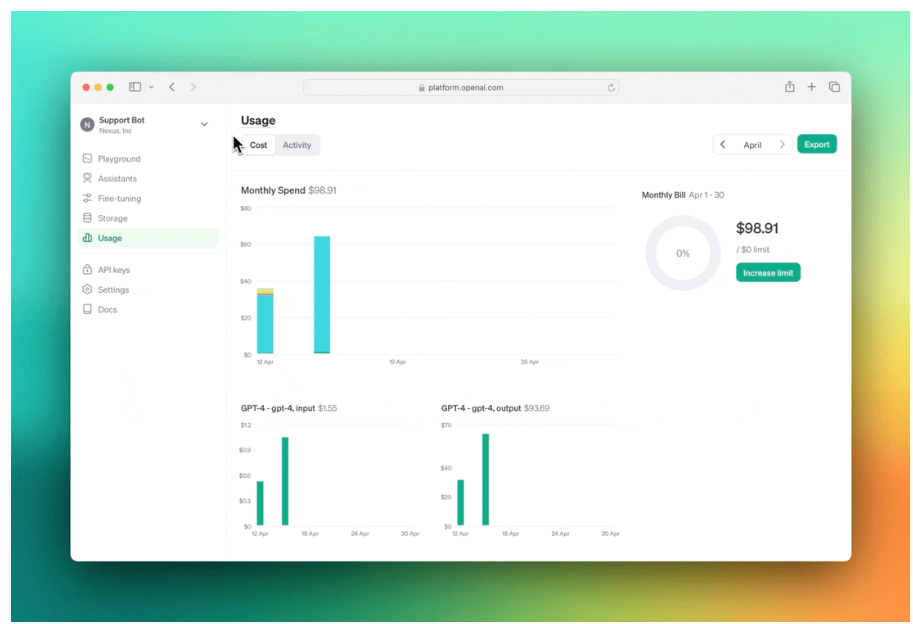

Finally, the available cost management options now include 10–50% discounts based on committed throughput sizes on GPT-4 or GPT-4 Turbo, and the new Batch API for asynchronous non-urgent workload runs. Batch API requests cost half as much, offer significantly higher rate limits, and deliver results within 24 hours, making them ideal for use cases like model evaluation, offline classification, summarization, and synthetic data generation.

Comments