Over the last few days, OpenAI has shared the launch of two alpha programs through different channels. The first one is a version of GPT-4o with an expanded output token limit. GPT-4o originally launched with a 4,000-token output limit, which GPT-4o Long Output extends 16x, to 64K tokens. Customers selected for the alpha program can access the model via an API call to the name gpt-4o-64k-output-alpha.

Since longer completions are more expensive, GPT-4o Long Output is slightly more costly than GPT-4o, at $6.00 per million input tokens, and $18.00 per million output ones. Still, OpenAI expects that the selected partners testing this model will find it useful for use cases that typically require longer completions, like translation or text extraction. Depending on the feedback resulting from the alpha program, OpenAI will decide if it makes GPT-4o Long Output more broadly available.

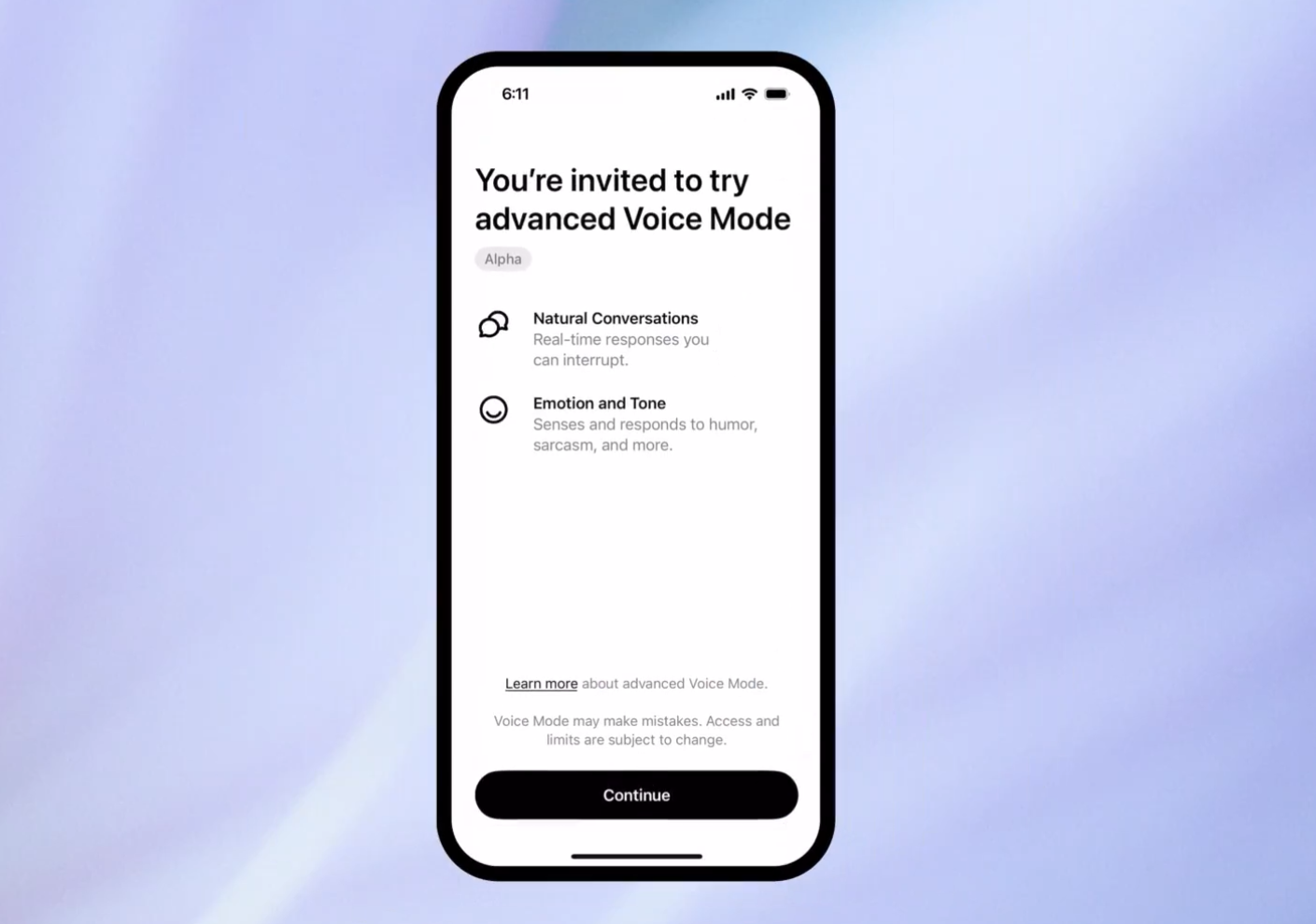

OpenAI announced on X that it would start testing Advanced Voice Mode, the feature the company temporarily put on hold citing the need to make several final adjustments, including the ability to "detect and refuse certain content". Advanced Voice Mode will roll out to a small subset of ChatGPT Plus users first, extending over time to the remaining Plus users until everyone has access to the feature by this fall. Users with access to the feature will receive an email and an in-app message. The video and screen-sharing features announced with the launch of GPT-4o are still upcoming.

OpenAI stated that in preparation to start rolling Advanced Voice Mode, it tested the feature with over 100+ external red teamers across 45 languages, implemented protections so the feature will only speak in the four preset voices, and set guardrails against violent or copyrighted content. OpenAI plans to release a full report on the new Voice Mode capabilities, limitations, and safety evaluations soon.

Comments