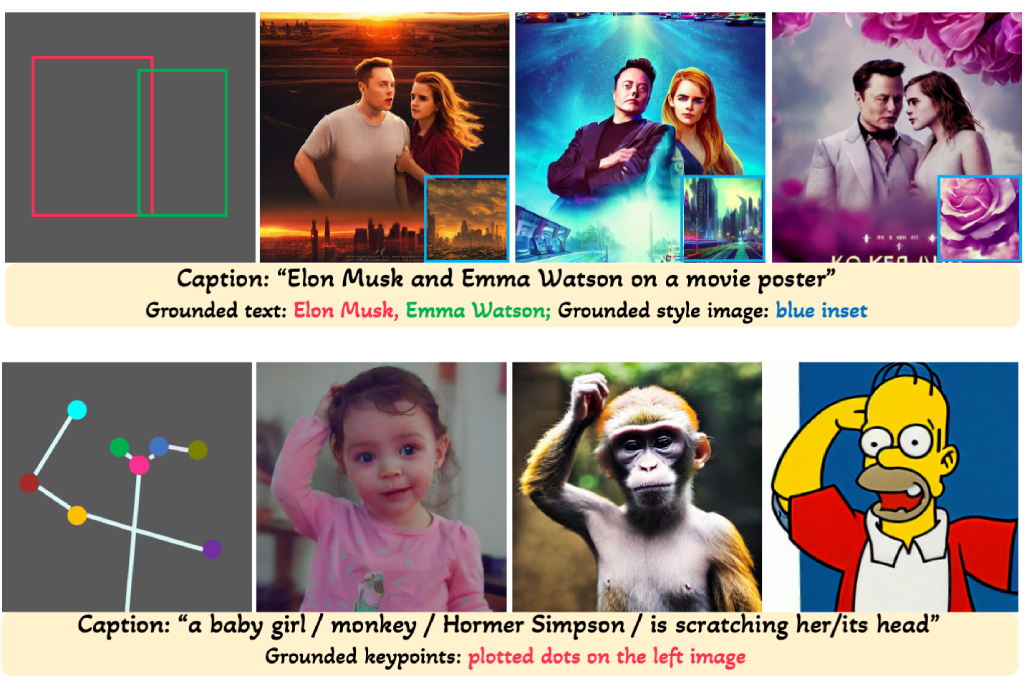

3D-aware Conditional Image Synthesis

This paper describes a 3D-aware conditional generative model for controllable photorealistic image synthesis. It integrates 3D representations with conditional generative modeling, i.e., enabling controllable high-resolution 3D-aware rendering by conditioning on user inputs.