An important goal of artificial intelligence is to develop language models capable of generalizing data in the form of instructions to solve complex problems. Finalizing language models on a set of data formulated as instructions improves model performance and generalization to unseen tasks.

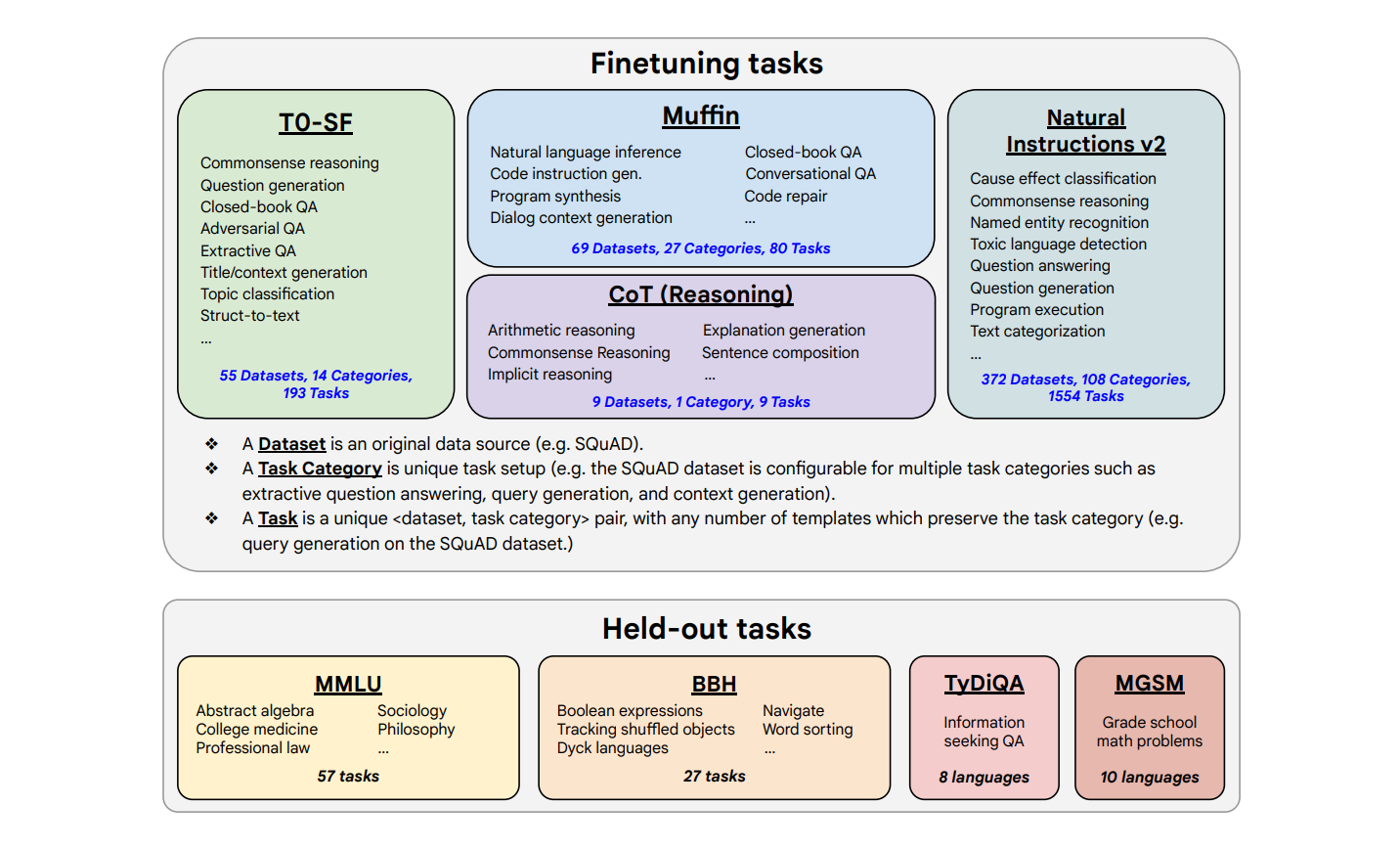

Google has presented its work to promote fine-tuning these instructions in several ways. For example, they are exploring finnasizing, focusing on scaling the number of tasks, scaling the size of the model, and fine-tuning on chain-of-think data. Finetuning instructions from the above aspects significantly improves performance on different model classes (PaLM, T5, U-PaLM), cue settings (zero-shot, multiple-shot, CoT), and evaluation benchmarks (MMLU, BBH, TyDiQA, MGSM, open generation).

In their new work, developers are applying fine-tuning instructions across a wide range of model families, including T5, PaLM and U-PaLM. These model families range in size from Flan-T5-small (80M parameters) to PaLM and U-PaLM (540B parameters). Each model uses the same learning procedure except for a few hyperparameters: learning rate, batch size, dropout, and fine-tuning steps. They use a schedule with a constant learning rate and fine-tune using the Adafactor optimizer, and packing combines multiple learners into a single sequence, separating the input data from the target data using an end-of-sequence marker.

The procedure for tuning instructions on collections of data sources using different instruction patterns is called Finetuning Flan (Finetuning language models) by the developers.

Leave links to work and code.

- Paper - https://arxiv.org/abs/2210.11416

- Code - https://github.com/google-research/t5x

- Hugging Face - https://huggingface.co/docs/transformers/model_doc/flan-t5

Recall that in the last article we introduced the human-image interaction dataset, DiffusionDB. It is the first large-scale image cue database containing 2 million real-world cue-image pairs, opening up a broad research opportunity to understand cue and generative model interactions, detect deep fakes, and develop human-image interaction tools that help users use these models more easily.

Comments