Stable LM 2 12B is a 12-billion-parameter model trained on 2 trillion data tokens in seven languages: English, Spanish, German, Italian, French, Portuguese, and Dutch. The model is available in two flavors: a base model and an instruction-tuned variant. This open model can be deployed on widely available hardware to achieve strong performance comparable to larger, more demanding models. Testing also shows that Stable LM 2 12B excels at tool usage and function calling, making it an ideal model for powering retrieval-augmented generation systems.

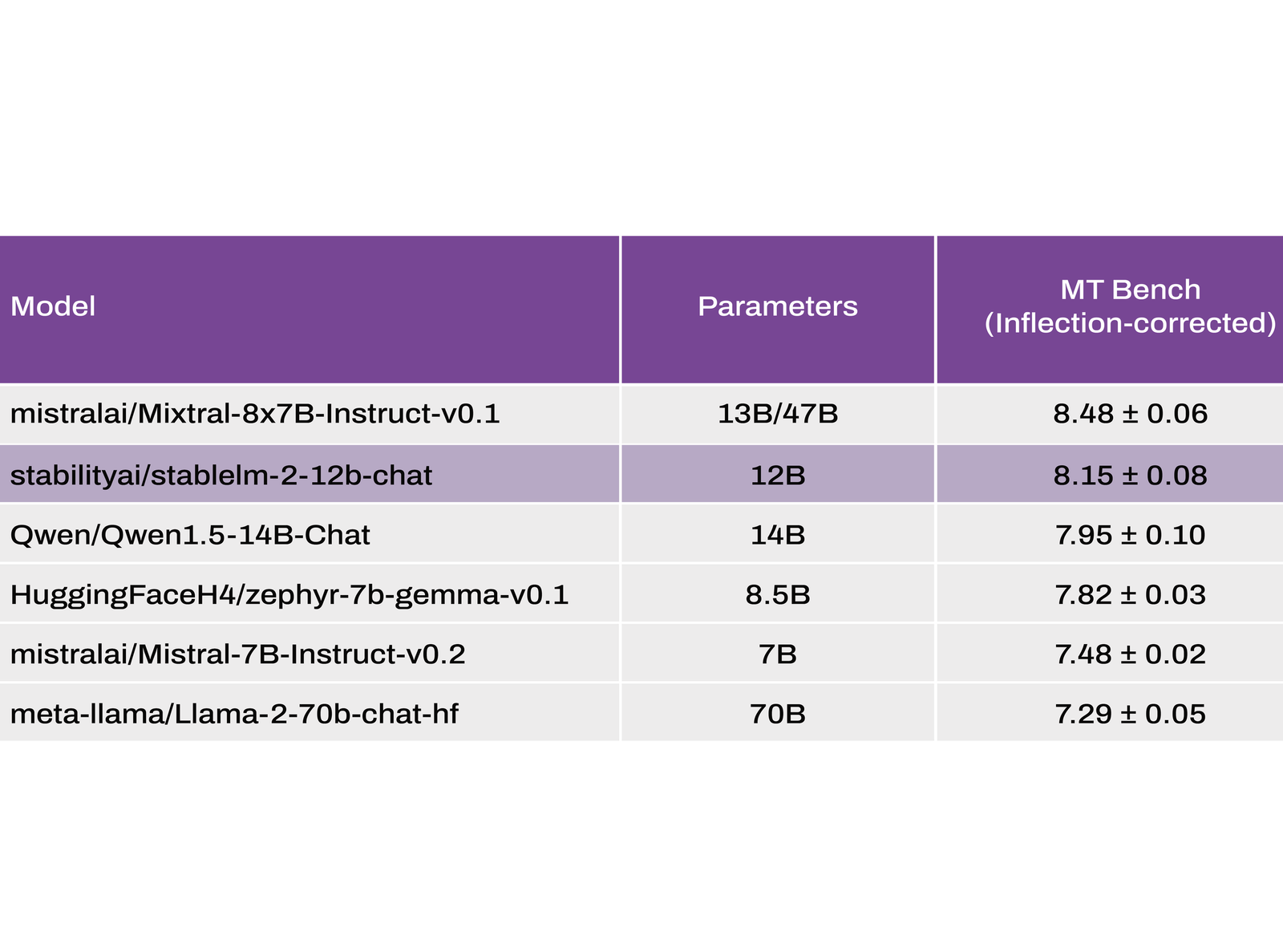

Stable LM 2 12B was benchmarked against other performant open-source models including Mixtral, Llama-2-70B, Qwen 1.5 (14B), Gemma (8.5B), and Mistral (7B). The results place Stable LM 2 12B near the top position in all cases, highlighting its careful balance of performance, efficiency, memory and compute consumption, and speed. Stable LM 2 12B is available with a Stability AI Membership for commercial and non-commercial applications.

In parallel with the Stable LM 2 12B announcement, Stability AI refreshed its already successful Stable LM 2 1.6B with improved conversational capabilities in the same seven languages as Stable LM 2 12B, while leaving its modest system requirements virtually intact.

Comments