Universal Guidance for Diffusion Models

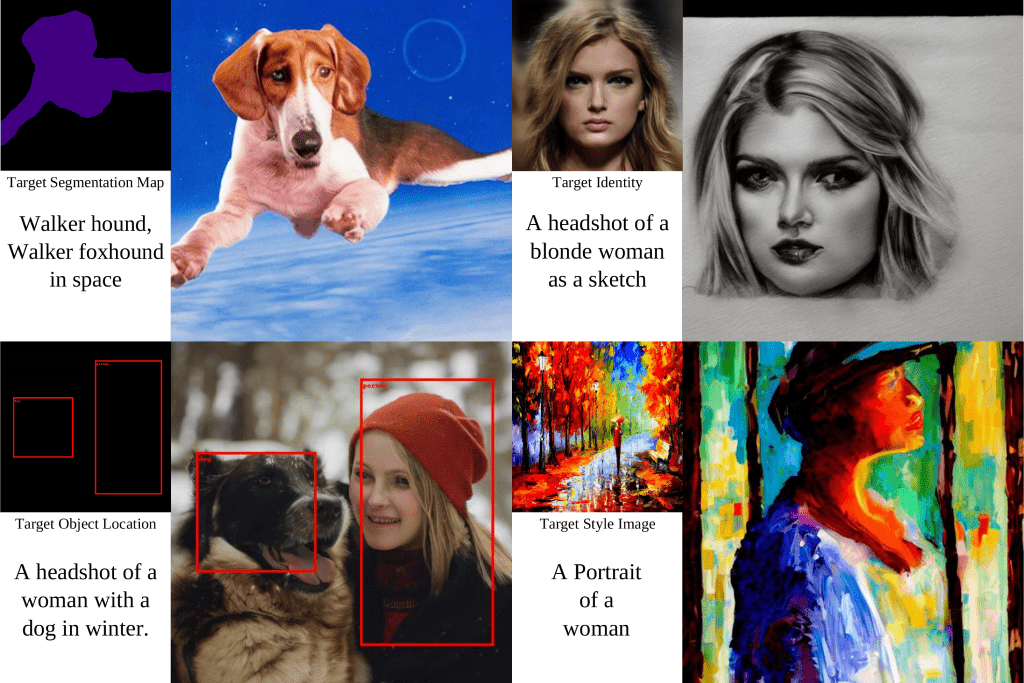

Typical diffusion models cannot be conditioned on other modalities without retraining. This work presents a universal guidance algorithm that enables diffusion models to be controlled by arbitrary guidance modalities without the need to retrain any use-specific components.

Comments