There are few domains in which LLM accuracy is as essential as in medicine, where mistakes can be a life-or-death matter. Take, for instance, the case in which GPT-3 recommends tetracycline as a course of treatment for a pregnant woman, despite acknowledging the potential danger to the fetus. Thus, it seems reasonable that LLMs intended for medical applications are held to a stricter standard, specially designed for the medical domain.

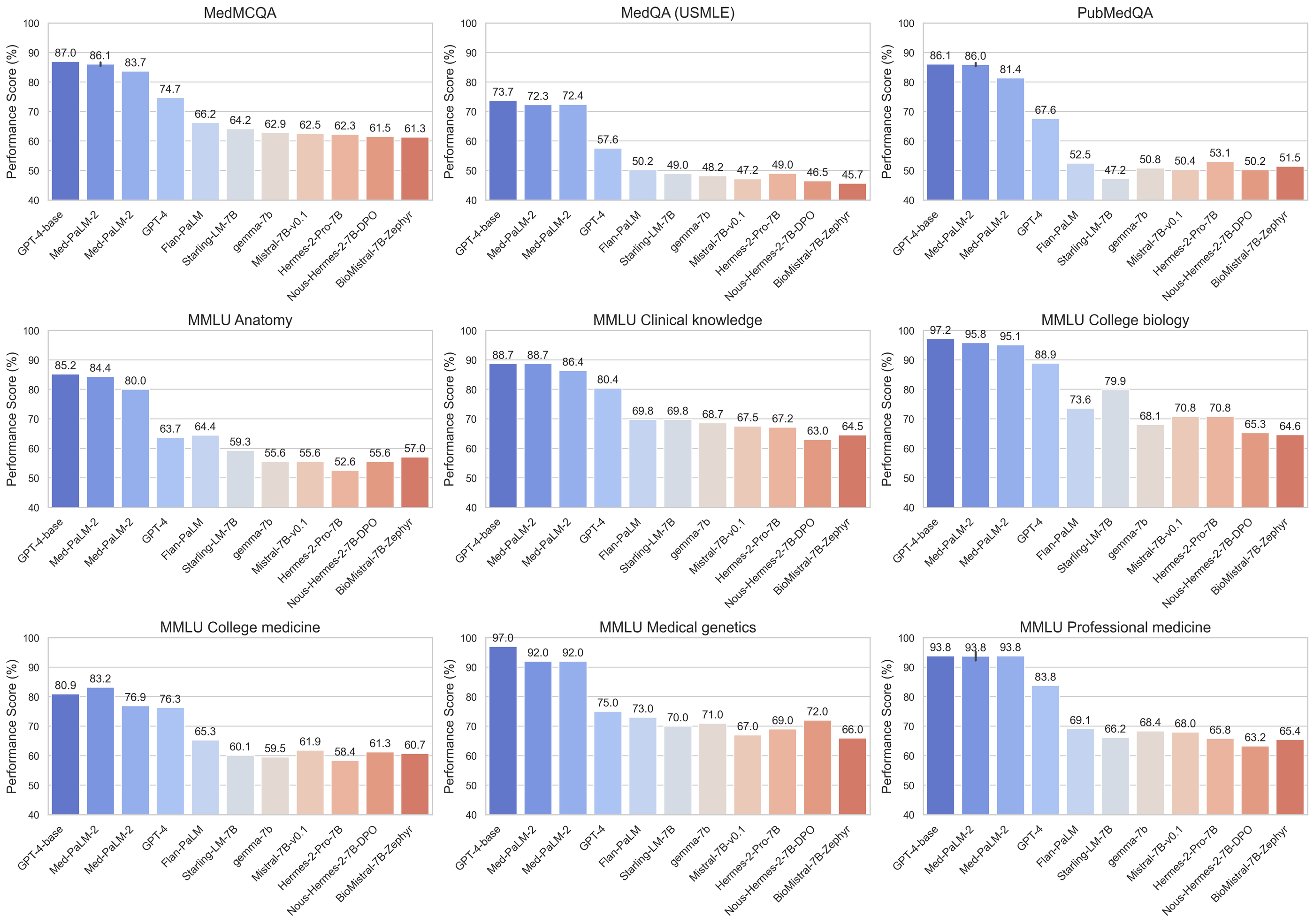

To this end, Hugging Face has launched the Open Medical-LLM Leaderboard. This standardized platform enables the evaluation and comparison of models based on their performance on various medical tasks and datasets. The current selection of benchmarks includes the MedQA dataset, constructed out of multiple-choice questions from the United States Medical Licensing Examination (USMLE); the MedMCQA, a dataset with question-answer pairs taken from India's medical examinations (AIIMS/NEET); the closed-domain PubMedQA dataset; and the medicine-relevant MMLU subsets.

Some insights that have already been extracted are that, as expected, commercial models like GPT-4-base and Med-PaLM-2 offer solid performance, while some smaller open-source models like Starling-LM-7B, Gemma, and Mistral-7B deliver competitive performance despite their more compact size. Rather surprisingly, Gemini Pro exhibits excellent performance in some areas such as Gastroenterology, Epidemiology, and Biostatistics, but significantly lags in other key areas, including Cardiology, Urology, and Toxicology. Due to this uneven performance, it is not an ideal choice for medical applications, at least not without further specialized training. Details on the benchmarks and instructions for model submission can be found in Hugging Face's official announcement.

Comments