In two recently published papers, a research team at Google presented two approaches to fine-tuning LLMs to provide accurate health and wellness information. The final goal is to apply them jointly to develop personalized health assistants.

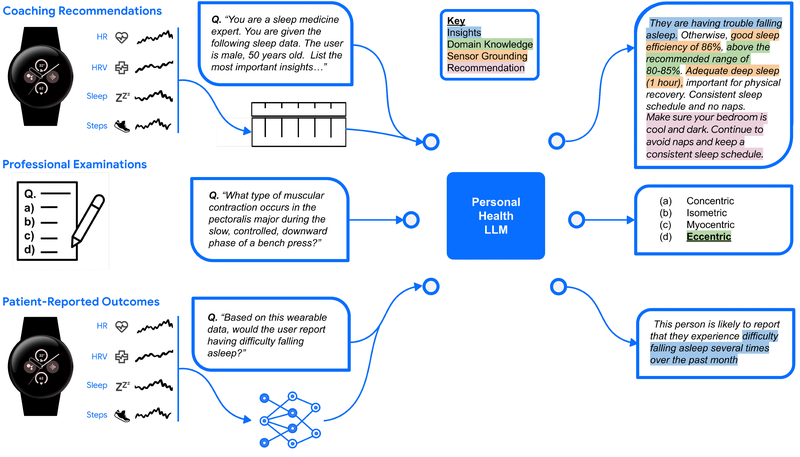

The first paper, Towards a Personal Health Large Language Model, showcases an LLM fine-tuned on data extracted from expert analysis and self-reported outcomes which can contextualize physiological data when replying to personal health-relate queries. The Personal Health Large Language Model (PH-LLM) was tested using benchmark data sets evaluating the ability to provide insights and recommendations based on provided physiological data, expert-level domain knowledge, and the prediction of self-reported assessments of sleep quality.

Neither PH-LLM nor Gemini Ultra 1.0 were found to be statistically different from expert performance in fitness advice. Preference for human expert advice on sleep was higher, but model performance did not lag greatly. Moreover, PH-LLM scored higher than the average human expert on domain knowledge assessments, and the model also achieved performance comparable to models trained specifically to predict self-reported sleep quality evaluations.

The other paper, Transforming Wearable Data into Personal Health Insights Using Large Language Model Agents, explores using Gemini Ultra 1.0 in tandem with an agent framework, code generation capabilities, and information retrieval tools to analyze data fed by wearable devices like smartwatches to deliver personalized interpretations and recommendations to users' health queries. The agent's capabilities were tested on two datasets: one evaluating the agent's numerical accuracy in health queries, and the other testing the agent's reasoning and coding capabilities on open-ended health queries with positive results.

Comments