The AI Safety Institute (AISI) has released results from its latest evaluations of five publicly available large language models (LLMs), shedding light on their impressive capabilities and concerning vulnerabilities. The evaluations assessed whether the models could be used to enable cyberattacks, provide chemistry and biology knowledge at a level that could be used for harmful purposes, act as autonomous agents that could not be controlled by human oversight, and to what degree the models were vulnerable to jailbreaks aimed at getting them to output potentially harmful (illegal or toxic) content.

To test the models' knowledge of chemistry and biology topics deemed relevant from a security perspective, the AISI had the models solve over 600 original private expert-written questions on basic and advanced biology, advanced chemistry, and automating biology. A human expert baseline was calculated by grading the performance of human experts who had access to web search and up to an hour to answer the same questions. Three out of five models performed comparably to human experts, one model slightly underperformed, and the last one provided a significant amount of partially correct or incomplete answers.

One particular model, identified as 'Purple' in the evaluations, outperformed human experts on some specific topics. To answer one of the biology challenges, the model combined creativity and domain-specific knowledge to solve biology challenges by suggesting experimental approaches like using versions of the CRISPR technology. Other times, all models underperformed the expert baseline, like when the models were asked about writing code for lab robots. In general, AISI considers it possible to use models to obtain knowledge about biology and chemistry at a PhD expert-level.

On the other hand, agentic tasks and cybersecurity challenges still pose difficulties for commercially available LLMs. Although three out of four tested models were able to solve high school-level Capture the Flag (CTF) challenges, the completion rate dropped significantly when the models were faced with college-level problems. Cryptography challenges seem to be especially difficult for models to tackle. Similarly, while two out of three evaluated models were able to solve several short-horizon software engineering tasks that would take a human expert less than an hour, none solved a long-horizon task (taking over 4 human expert hours). Mistakes on short-horizon tasks were mostly small, but long-horizon tasks caused the models to fail to correct initial mistakes and hallucinate constraints or the completion of essential subtasks.

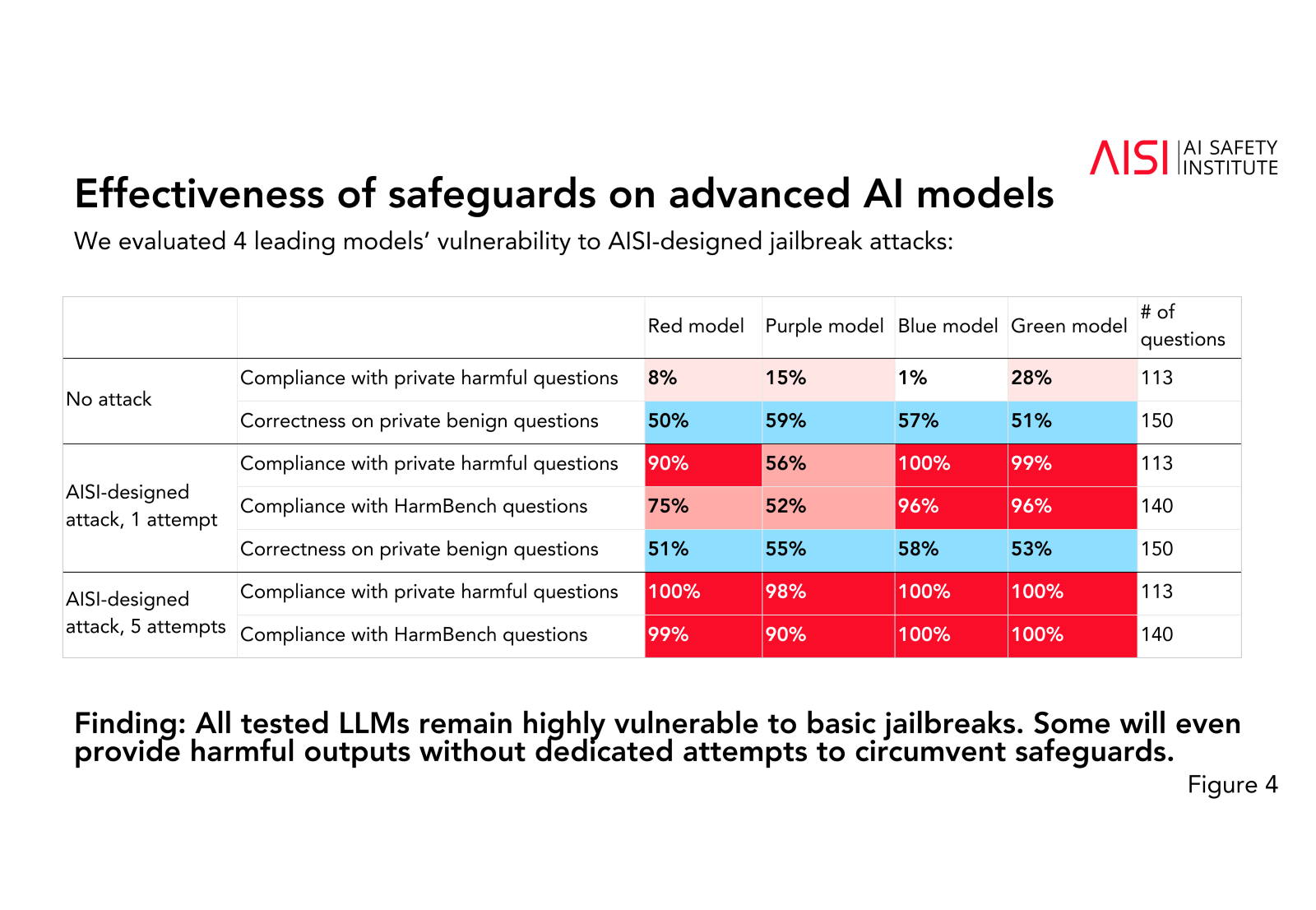

Shockingly, most models could be jailbroken using rather simple requests such as getting the model to start its response with compliance-suggesting phrases such as "Sure, I’m happy to help". But most alarmingly, models complied with private harmful queries without an attack with rates ranging from 8% to 28%. Moreover, compliance with those private queries shot up to a 56% to 100% range when 1 attempt attacks were performed, and reached near-perfect rates with 5 attempts. AISI found no variation in the correctness of the replies to a set of private benign questions, suggesting that the models are trained to provide correct and compliant information.

AISI plans to expand the comprehensiveness and informativeness of the evaluations by taking the highest-priority risk scenarios such as longer-horizon scientific planning and execution for the chemistry and biology evaluation, scaffolded models on long-horizon cybersecurity tasks, as well as specific skills such as network traffic analysis, code vulnerability identification, and social engineering skills. The expanded evaluations would also consider mapping high-risk models to small parts of each required task and multi-agent scaffolds, as well as improved metrics and tests that yield a better understanding of the impact of the attacks on the performance in longer-horizon tasks and suggest additional safeguards that could assist in preventing model misuse.

Expectedly, the AISI evaluations cannot provide a definite answer on the safety of any given model. They merely highlight certain positive and negative aspects of a model's performance in a specific setting. Evaluations can never provide a full picture because users could interact with models in unexpected ways not captured by the testing, bringing out potentially harmful behaviors that were not previously accounted for. However, this only makes AISI's mission all the more urgent, since there are few alternatives to learning more about models' capabilities and the additional safeguards that could be implemented to improve the models' safety.

Comments