Anthropic launched advanced prompt evaluation tools for its developer console

Anthropic has introduced new features in its developer console, including prompt generation, test case creation, and evaluation tools powered by Claude, to streamline creating and refining high-quality prompts for AI applications, making advanced prompt engineering more accessible to developers.

By now, it is well-known that prompt quality can have a significant impact on AI-powered applications' performance. Moreover, prompt development and refinement can be a time-consuming endeavor, especially when it is done manually. To address this, Anthropic has unveiled a suite of new features for its developer console, which will assist developers in streamlining the high-quality prompts creation process. These prompt evaluation tools are powered by Claude 3.5 Sonnet and designed to generate, test, and evaluate prompts efficiently.

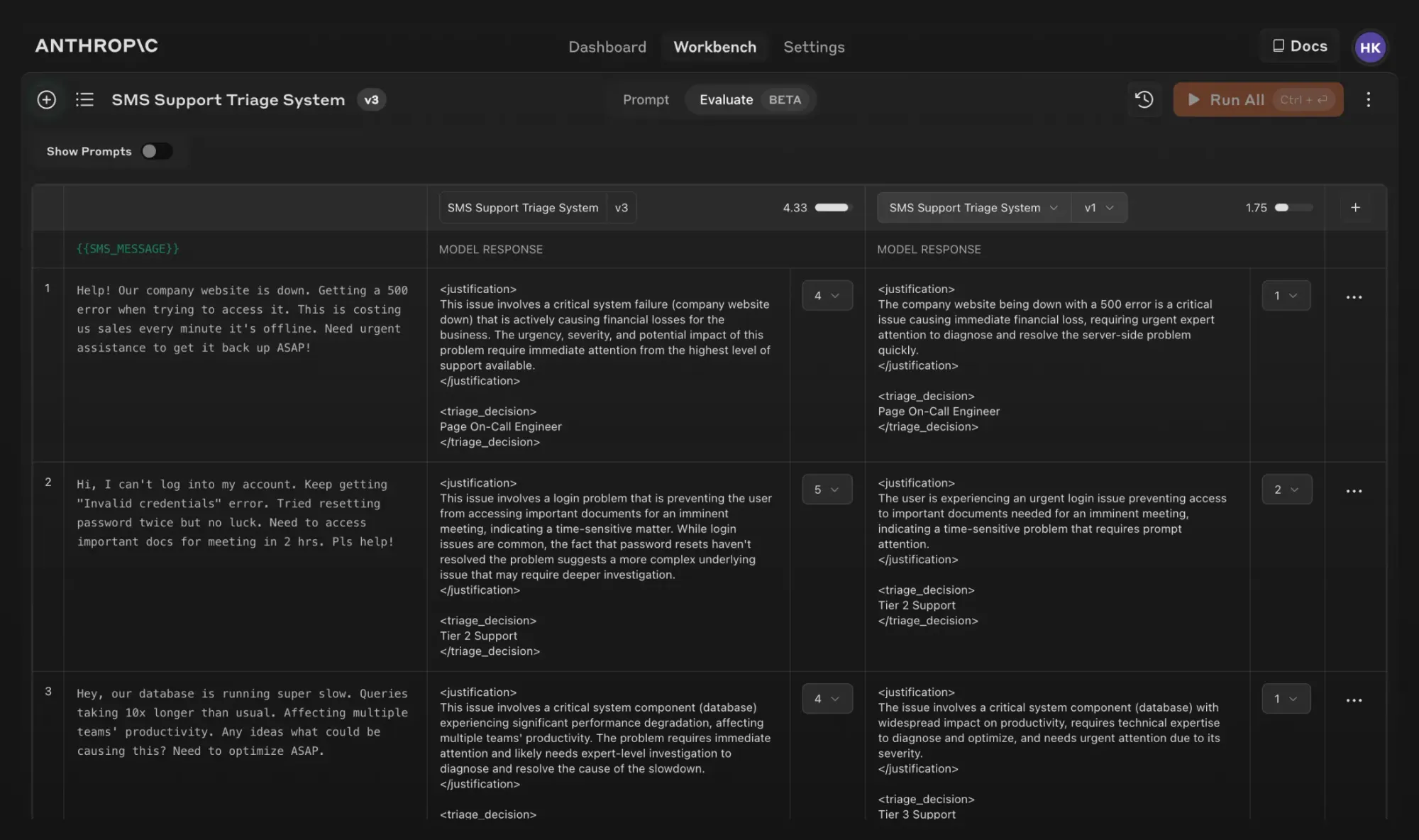

The Anthropic developer console already offers a built-in prompt generator, which can be leveraged to generate a high-quality prompt automatically. A new test case generator now complements the prompt generator by creating input variables for prompts and running the test case to obtain the model's response. However, the option to manually generate individual test cases remains available.

To further simplify the evaluation process, the new Evaluate feature lets developers collect manually created test cases, import them from a file, or have Claude generate them using the test case generation function. Once the test case suite is ready, the feature allows the simultaneous evaluation of all cases. Additional features enable quick iteration on prompts by enabling fast prompt editing and re-running of the test suite. The platform also allows comparisons of responses to different prompts side-by-side. Subject matter experts can grade response quality on a 5-point scale, providing quantitative feedback on prompt effectiveness.

The new features are now available to all users on the Anthropic Console, reflecting the company's recognition of the growing importance of the role played by prompt engineering in the widespread adoption of generative AI, especially in enterprise settings.