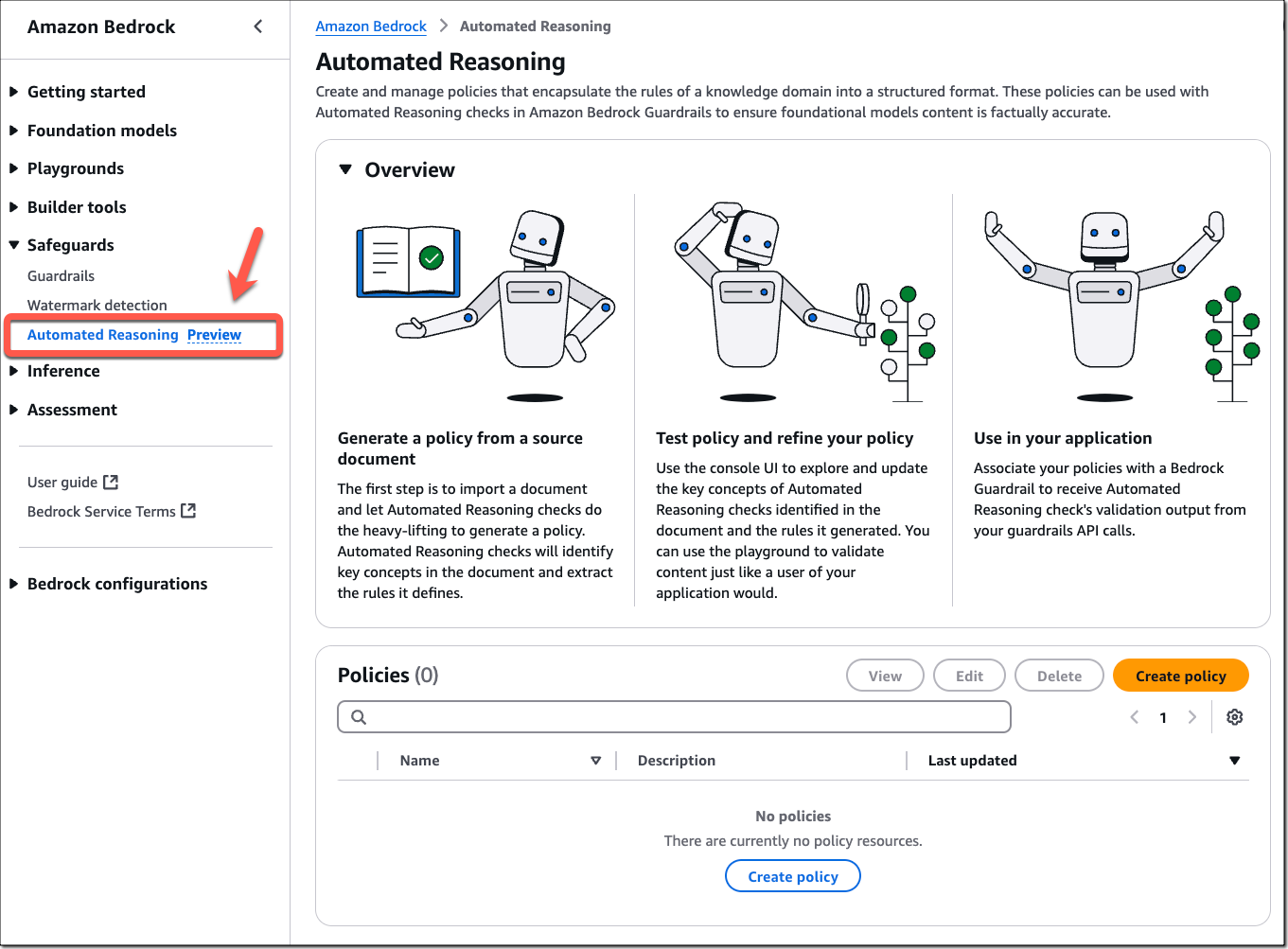

Amazon Bedrock Guardrails, AWS's toolkit that allows users to implement safeguards to enhance the safety and privacy of generative AI applications, is getting a new solution called Automatic Reasoning checks. With the new Automated Reasoning checks feature customers can generate, edit, and implement policies to prevent hallucinations from model outputs using logic-based algorithmic verifications. Automated Reasoning is available as a preview in the US West (Oregon) AWS Region. Access is granted per request, although AWS will activate a sign-up form in Amazon Bedrock Soon.

Although Amazon claims Automated checks are the first and only solution in the market to prevent hallucinations in generative AI applications, the feature is very similar to Microsoft's Correction feature and a grounding feature available in Google's Vertex AI. In all cases, the risk of hallucinations is mitigated by providing an external source of ground truth. Once the ground truth is established, model responses are analyzed for fragments that may be inaccurate or fabricated and verified against the ground truth. Whenever the response's evaluation is invalid, the model powering the application can be asked to regenerate its response, which is reevaluated for validity.

Automatic Reasoning checks support source documents spanning 1500 tokens or approximately 2 pages long. These are uploaded through the Amazon Bedrock Console and are analyzed to extract variables and the logical rules that operate on them. Although the policy rules are stored as formal logic expressions, they are translated into natural language so users can edit them if necessary. Additionally, users can add an intent that can include a brief description of the cases the policy is meant to cover or an example of the interactions it should validate. Once the policy is configured and enabled, the system will validate the model-generated content and explain the validation results.

Comments