ARTICLES

Dealing with DICOM Using ImageIO Python Package

In this article, the author demonstrates how to use the ImageIO Python package to read DICOM files, extract metadata and attributes, and plot image slices using interactive slider widgets using Ipywidgets. Learn more about this new approach!

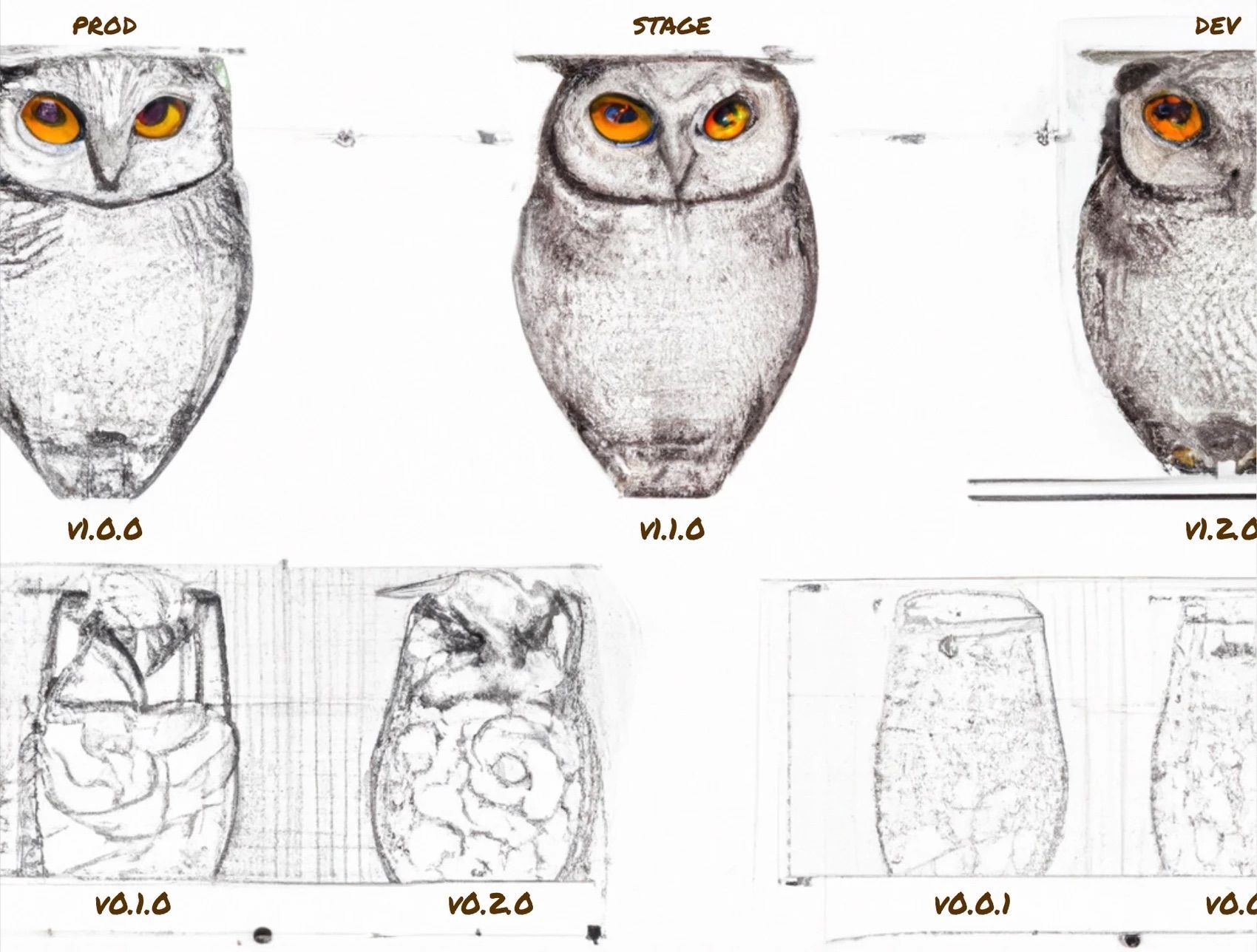

Building a GitOps ML Model Registry with DVC and GTO

For this tutorial, the authors pick a simple project with no models registered yet, to demonstrate adding a model registry on top of an existing ML project. They show how to register semantic model versions, assign stages to them, and employ CI/CD, all using a GitOps approach.

10 Metrics to Evaluate Supervised Machine Learning Models

The evaluation of models is usually an iterative process where there is a feedback loop between the results and model. This article look into ten major metrics that you can use to evaluate the performance of your machine learning models, step-by-step.

Scaling ML Model Development with MLflow

Model prototyping and experimenting are crucial parts of the model development journey. In this article, the author explores a possible Python SDK implementation to help DS teams to keep track of all the model’s experiments, saving from codes to artifacts to plots and related files.

ChatGPT and DALL·E 2 in a Panel App

With the advances made by DALL-E and ChatGPT, it has become much easier to design and build everything, including chatbots. This article explains how you can utilize these latest AI models to quickly develop a chatbot in Python. Check it out!

How Do You Know if Your Classification Model Is Any Good?

This guide explains how to determine whether your model is successful in the context of your goals. It starts from step one of model development and continues to the ways of assessing the performance of your classification model. Check it out!

A Brief Introduction to Recurrent Neural Networks

Recurrent Neural Networks (RNNs) introduce the concept of memory to neural networks by including the dependency between data points. With this, RNNs can be trained to remember concepts based on context. Learn more!

Failed ML Project - How bad is the real estate market getting?

The author of the article dives deep into the ML project where he made several critical mistakes that led to its failure. He explains his thinking, from ideation and data collection to the model’s relevance to a real-world context. Quiet an interesting read!

PAPERS & PROJECTS

Scalable Diffusion Models with Transformers

In this work, the researchers explore a new class of diffusion models based on the transformer architecture; train latent diffusion models, replacing the U-Net backbone with a transformer that operates on latent patches; and analyze the scalability of Diffusion Transformers (DiTs).

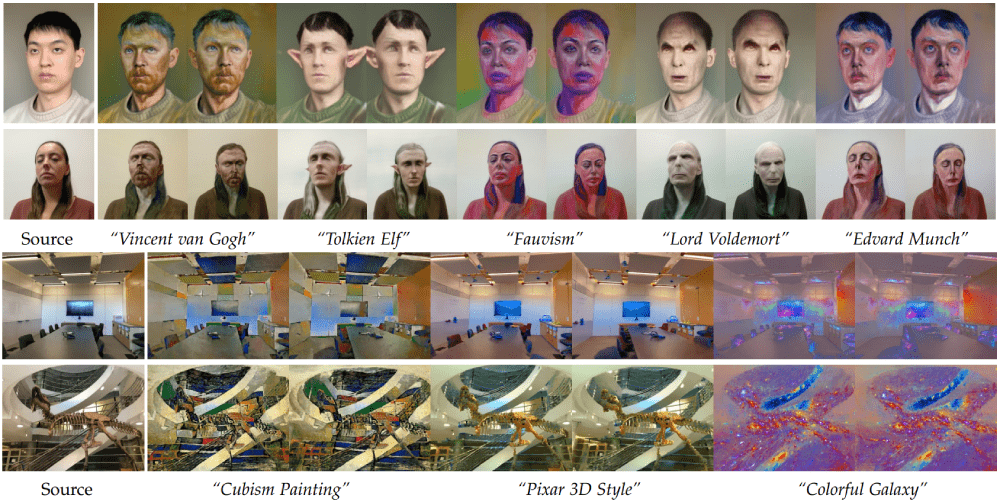

NeRF-Art: Text-Driven Neural Radiance Fields Stylization

Neural radiance fields (NeRF) enable high-quality novel view synthesis. Editing NeRF, however, remains challenging. In this paper, the authors present NeRF-Art, a text-guided NeRF stylization approach that manipulates the style of a pre-trained NeRF model with a single text prompt.

ECON: Explicit Clothed humans Obtained from Normals

ECON combines the best aspects of implicit and explicit surfaces to infer high-fidelity 3D humans, even with loose clothing or in challenging poses. ECON is more accurate than the state of the art. Perceptual studies also show that ECON’s perceived realism is better by a large margin.

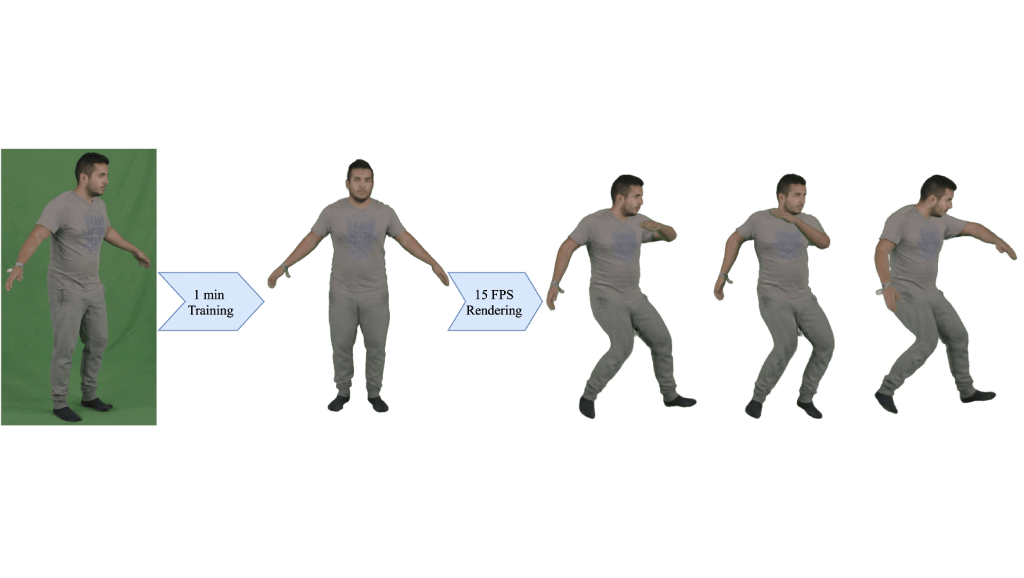

InstantAvatar: Learning Avatars from Monocular Video in 60 Seconds

InstantAvatar is a system that can reconstruct human avatars from a monocular video within seconds, and these avatars can be animated and rendered at an interactive rate. It converges 130x faster and can be trained in minutes instead of hours, way faster than competitors.

DifFace: Blind Face Restoration with Diffused Error Contraction

In this work, the researchers propose a novel method named DifFace that copes with unseen and complex degradations without complicated loss designs. They establish a posterior distribution from the observed low-quality (LQ) image to its high-quality (HQ) counterpart.

TextBox 2.0: A Text Generation Library with Pre-trained Language Models

TextBox 2.0 is a comprehensive and unified library that focuses on the use of pre-trained language models (PLMs) to facilitate research on text generation. The library covers 13 common text generation tasks and their corresponding 83 datasets, and incorporates 45 PLMs in total.

ClimateNeRF: Physically-based Neural Renderingfor Extreme Climate Synthesis

Climate NeRF is a novel NeRF-editing procedure that can fuse physical simulations with NeRF models of scenes, producing realistic movies of physical phenomena. It allows to easily render realistic weather effects, including smog, snow, and flood. Check it out!

Audio-Driven Co-Speech Gesture Video Generation

ANGIE is a novel framework that effectively captures the reusable co-speech gesture patterns as well as fine-grained rhythmic movements. To achieve high-fidelity image sequence generation, the authors use an unsupervised motion representation instead of a structural human body.

For collaboration inquiries, including sponsoring one of the future newsletter issues, please contact us here.

Comments