Hey folks,

Welcome to this week's edition of Data Phoenix Digest! This newsletter keeps you up-to-date on the news in our community and summarizes the top research papers, articles, and news, to keep you track of trends in the Data & AI world!

Be active in our community and join our Slack to discuss the latest news of our community, top research papers, articles, events, jobs, and more...

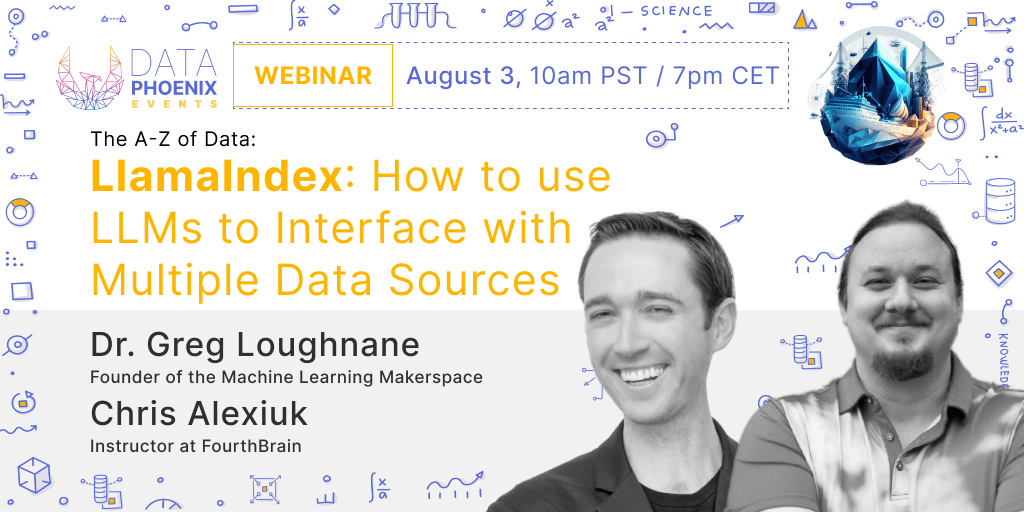

Data Phoenix upcoming webinar:

LlamaIndex: How to use LLMs to Interface with Multiple Data Sources

Following emerging Large Language Model Operations (LLM Ops) best practices in the industry, you’ll learn about the key technologies that enable Generative AI practitioners like you to build complex LLM applications. Specifically, we’ll deep dive on “data frameworks” like LlamaIndex, and we’ll demonstrate how to create state-of-the-art hierarchical indexes from different data sources. During the event, we will also show you how another commonly known LLM Ops framework (LangChain) underlies much of the functionality of LlamaIndex. All demo code will be provided via GitHub links during and after the event!

Summary of the top articles and papers

Articles

Running Llama 2 on CPU Inference Locally for Document Q&A

In this guide, the author explores how to run quantized versions of open-source Language Model (LLM) on local CPU for retrieval-augmented generation, also known as document Q&A, using Python. Specifically, he employs the high-performance Llama 2 chat model.

LangChain + Streamlit + Llama: Bringing Conversational AI to Your Local Machine

The author delves into an innovative approach to integrating open-source Large Language Models (LLMs) and LangChain for Free Generative Question Answering. This method is notable as it requires no API key, making it a cost-effective solution for many users.

Different Ways of Training LLMs

This article is an overview of several key training mechanisms, namely pre-training, fine-tuning, reinforcement learning from human feedback (RLHF), and adapters. It also delves into the concept of prompting and prompt tuning.

Explaining Vector Databases in 3 Levels of Difficulty

Vector databases have recently gained significant attention, reflected in the substantial funding acquired by many vector database startups. It's likely that you've considered its importance too - which might be why you need to read this explainer article.

Optimize AWS Inferentia utilization with FastAPI and PyTorch models on Amazon EC2 Inf1 & Inf2 instances

The authors deploy FastAPI model servers on AWS Inferentia devices, which are available on Amazon EC2 Inf1 and Inf2 instances. Additionally, they present a demo of hosting a sample model deployed across all NeuronCores to achieve optimal hardware utilization.

Papers & projects

Animate-A-Story: Storytelling with Retrieval-Augmented Video Generation

In this paper, the authors propose a visual storytelling framework that leverages off-the-shelf video retrieval systems, extract video depths as motion structure, and relies on a controllable video generation model that offers flexible controls over structure and characters. The videos are synthesized by following the structural guidance and appearance instruction.

DS-Fusion: Artistic Typography via Discriminated and Stylized Diffusion

The authors introduce a method to automatically generate an artistic typography by stylizing one or more letter fonts to visually convey the semantics of an input word, while ensuring that the output remains readable. They employ the denoising generator in LDM, with the key addition of a CNN-based discriminator to adapt the input style onto the input text.

CoTracker: It is Better to Track Together

In this paper, the authors propose CoTracker, an architecture that can jointly track multiple points throughout an entire video. It is based on the ideas from the optical flow and tracking literature, and combines them in a new design based on a transformer network. It models the correlation of different points in time via specialized attention layers.

OpenAssistant Conversations -- Democratizing Large Language Model Alignment

OpenAssistant Conversations is a human-generated, human-annotated assistant-style conversation corpus consisting of 161,443 messages distributed across 66,497 conversation trees, in 35 different languages, annotated with 461,292 quality ratings. The corpus is a product of a worldwide crowd-sourcing effort involving over 13,500 volunteers.

Zip-NeRF: Anti-Aliased Grid-Based Neural Radiance Fields

Neural Radiance Field training can be accelerated through grid-based representations in NeRF's learned mapping from spatial coordinates to colors and volumetric density. In this paper, the authors show how ideas from rendering and signal processing can be used to construct a technique that combines mip-NeRF 360 and grid-based models.

Comments