DeepSeek, a Chinese company aiming to unlock the key to AGI and the developer of the GPT models competitor DeepSeek models, recently released DeepSeek Coder V2, an open-source Mixture-of-Experts (MoE) specializing in code-specific tasks. DeepSeek Coder V2 was developed by pre-training an intermediate DeepSeek-V2 checkpoint with an additional 6 trillion tokens to enhance its coding and mathematical reasoning without sacrificing the model's performance in more general tasks. DeepSeek Coder V2 shows significant improvements over DeepSeek-Coder-33B in generalist, reasoning and coding tasks, expands programming language support from 86 to 338, and features a 128K token context window.

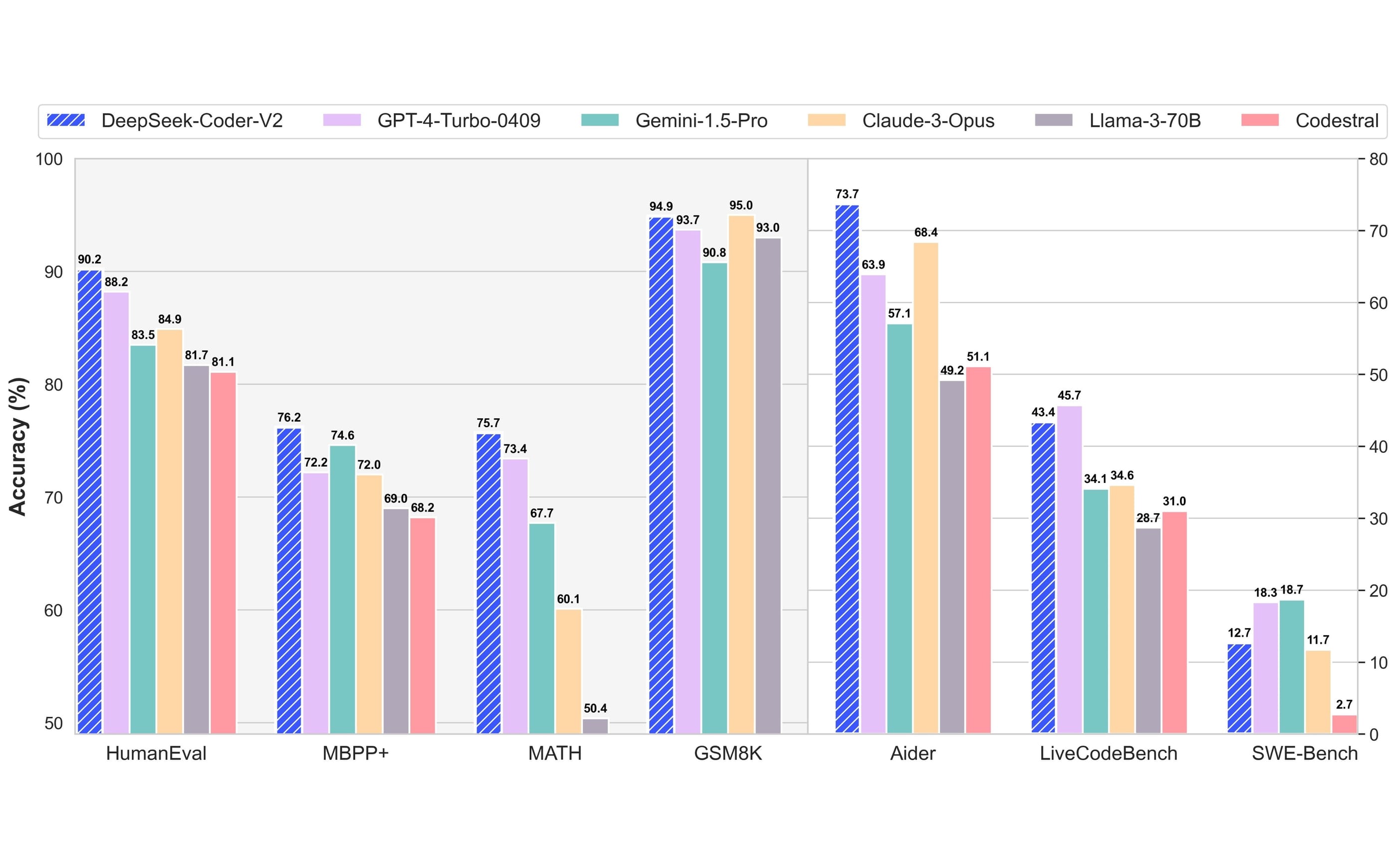

DeepSeek-Coder-V2 is available for download on Hugging Face, and comes in two versions, each including a base and an instruct model. DeepSeek-Coder-V2-Lite has a total of 16 billion parameters, with 2.4 billion active parameters, while DeepSeek-Coder-V2 has 236 billion total parameters, and 21 billion active. Both versions feature the same 128K token context window. Notably, DeepSeek-Coder-V2-Instruct is second only to GPT-4o in the HumanEval benchmark. It also performs competitively in other popular code generation and math reasoning benchmarks.

In addition to being available for download at Hugging Face, DeepSeek Coder V2 can be called via an OpenAI-Compatible API on the DeepSeek Platform, and can be experimented with as a web chat experience at DeepSeek's official website. Additional information, including a link to the technical paper, is available on GitHub.

Comments