Freeplay recently announced that it has gone into public beta, which means that product developing teams everywhere can now get access to the free trial as the startup continues to work together with early customers. Freeplay is a suite of enterprise-ready collaborative tools for building, testing, and optimizing LLM-powered products. Testing and optimization tools are becoming increasingly available in the market. Still, the team behind Freeplay claims that their solution is not another developer tool and that, unlike those tools, theirs has a broader scope of application and is meant to be useful for whole teams and not just developers.

Inspired by the way Figma improved the workflows for product development teams in the design process and leveled access to design artifacts, Freeplay is planning to overhaul collaboration between developers and non-developers completely, changing the way teams work towards the creation of LLM-powered software products. Freeplay's developer SDKs let teams manage iterations of prompt-model combos as versions. Regardless of the stage a product is at (development or production), prompts can be adjusted, and models can be swapped out with the confidence that the new pair will be stored as a new version, leaving the previous workflow unaffected. Changes are done without revisiting the codebase, so the working code becomes a playground where teams can prototype new ideas.

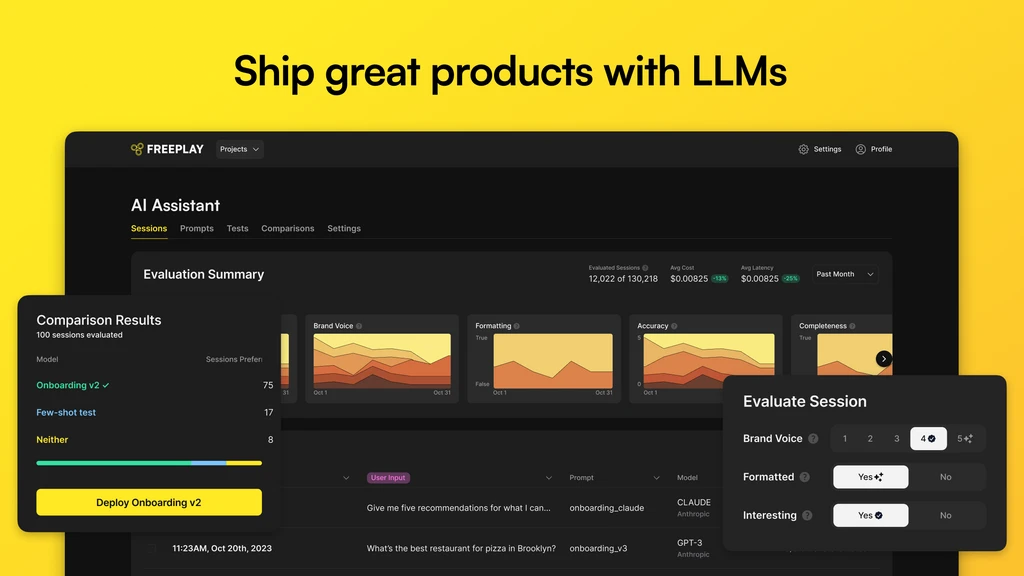

Freeplay records LLM requests and responses and makes them available in a built-in observability dashboard. This eliminates the need for an additional external observability tool and turns Freeplay into a platform that provides a fully integrated workflow that allows teams to label results, curate datasets, and fine-tune models, among other evaluation and optimization tasks. To make evaluation and optimization easier, Freeplay combines human and automated evaluation methods. If there is a custom set of evaluation criteria for human labeling sessions, it can be deployed for AI-assisted evaluation methods. As a result, human-labeled examples can correct or confirm automated evaluation results. Once evaluation methods are in place, the testing process can be fully automated.

All of these features come together in a platform thought out for mature product development teams. Freeplay includes role-based access controls, separate workplaces per feature or project, and industry-standard security and privacy controls, in addition to single-instance deployment options. A self-hosted option is intended for organizations with high compliance obligations, but the feature is currently in a limited pilot. An in-depth view of Freeplay can be found here, and access to the public beta is available on Freeplay's homepage.

Comments