The Generative Media team at Google DeepMind is working on a video-to-audio (V2A) technology that accepts videos with text prompts at the input to generate and automatically synchronize audio with the on-screen action. The V2A technology can be paired with a video generation model (like Google's Veo) to create matching scores, sound effects, or dialogue for the model's video output. Alternatively, the model can also be provided with more traditional video material like archival footage or silent films to create soundtracks matching the contents and tone of the video.

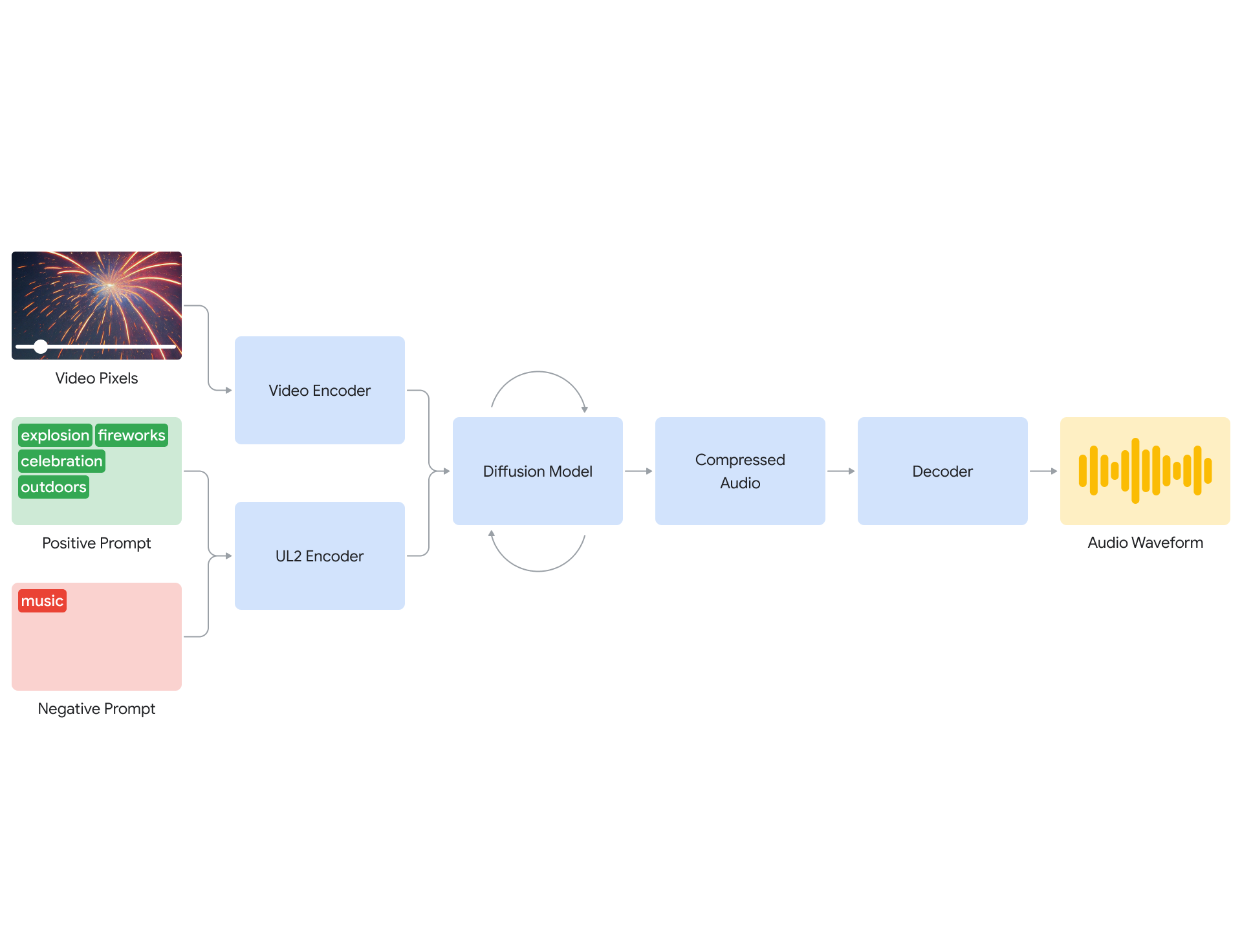

A notable feature is that V2A can generate unlimited soundtracks for a given input via positive prompts nudging the system towards the sought sounds, and negative prompts specifying the sounds it should avoid. This process enables users to quickly experiment by generating several soundtrack options for a given video and then choosing the most suitable one. V2A's first step towards audio generation is to encode the input video in a compressed representation. Then, the diffusion model refines the audio from random noise guided by the visual input and the given text prompts (if any). Once the audio is generated, the output from the diffusion model is decoded, converted into a waveform, and combined with the video data.

The system understands raw pixels, meaning that the text prompts are optional and that users can feed a video and still be able to generate synchronized audio for their input, eliminating the need for manual alignment of the generated audio and the video file. This was achieved by training the model with as much information on the training data as possible. To do this, the audio was annotated using AI, and instances of spoken dialogue were transcribed before being fed to the model. The multimodal training allows the model to associate specific visual scenes with a refined selection of audio events, and essential capability to ensure accuracy and the automatic synchronization of the generated audio with the input video.

Prompt for audio: Cars skidding, car engine throttling, angelic electronic music (Credit: Google DeepMind)

Still, V2A has a couple of limitations. The first is that the audio quality is conditioned on the video quality. Thus, if the video file contains noise or artifacts unlikely to be found in the training dataset, the quality of the generated audio will noticeably decrease. The other limitation has to do with lip-syncing. Since V2A generates speech from transcripts, if the system is paired with a video generation model not conditioned on transcripts, the resulting output will include mismatched audio and mouth movements.

To prevent potential misuse, V2A's outputs include SynthID watermarks that identify the material as AI-generated. V2A is upcoming since it is still being tested for safety and for feedback from selected creators and filmmakers.

Comments