Shortly after World Labs showcased the outputs of its AI system that generates interactive 3D scenes from 2D images, Google DeepMind announced Genie 2, a world model capable of generating playable 3D environments from a single image input. Genie 2 is primarily meant as a tool to generate rich and diverse training environments for embodied and AI agents, mitigating an important bottleneck slowing research into AI capabilities down. The research team behind Genie 2 also acknowledges the model could eventually become the basis for creative work involving prototyping interactive scenarios.

Genie 2 is a world model designed to simulate virtual worlds and the consequences of taking actions within them. It was trained on video data, and its training made it possible for Genie 2 to display some additional capabilities, such as modeling object interactions, character animations, physics, and a degree of predictive power. To generate the showcased outputs, the research team prompted Genie 2 with an image generated using Google DeepMind's Imagen 3. Although the example clips span between 10 and 20 seconds, Genie 2 can maintain consistency for up to a minute.

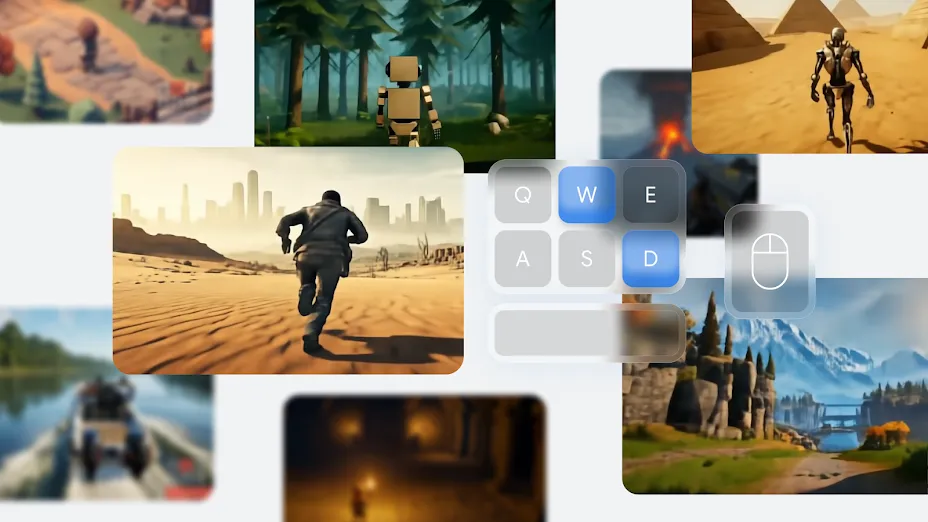

Some of Genie 2's specific capabilities include responding to actions resulting from keyboard or mouse input, like moving a character in the right direction, the capability to generate multiple movement trajectories from a single starting frame, displaying sufficient long horizon memory to re-render parts of the world that were no longer part of the main view, and creating diverse perspective, including first-person, isometric, and third-person driving views. Some of these capabilities were tested using SIMA, Google DeepMind's instructable game-playing AI agent, created in collaboration with several game developers so it could be trained on data from multiple video games.

Comments