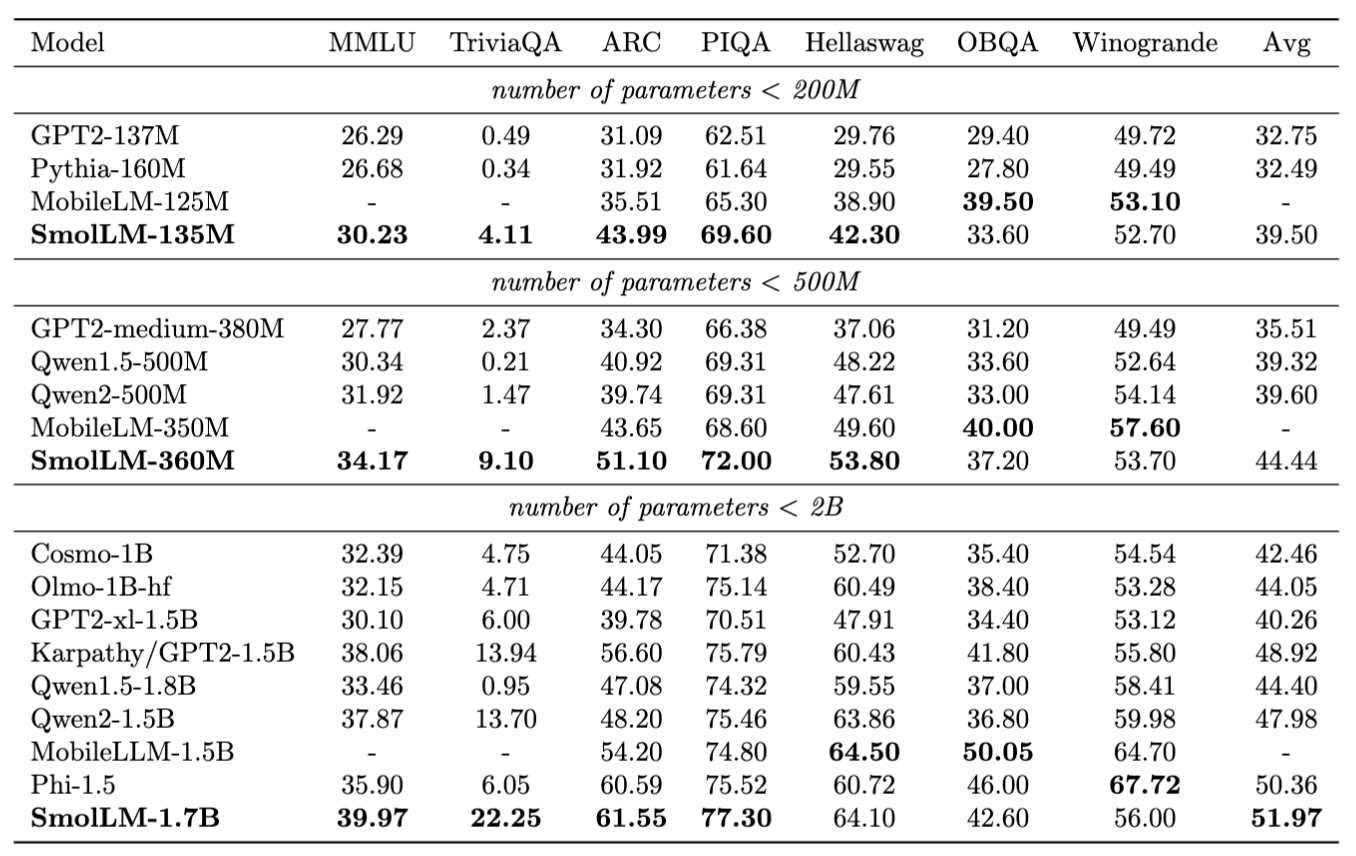

Hugging Face recently released SmolLM, a new family of state-of-the-art tiny language models inspired by the growing interest in language models that can be run locally on consumer devices. SmolLM includes three model variants: 135M, 360M, and 1.7B parameters, which were trained on the SmolLM-Corpus, prepared and launched by Hugging Face. The SmolLM models offer impressive performance and were shown to outperform similar-sized models in various popular benchmarks focused on common sense reasoning and world knowledge, in addition to demonstrating a solid performance in coding-related benchmarks.

Hugging Face claims that the SmolLM models' performance is due to the high quality of the SmolLM-Corpus dataset, which is composed of:

- Cosmopedia v2: 28B tokens of synthetic textbooks and stories generated using Mixtral-8x22B-Instruct-v0.1

- Python-Edu: 4B tokens of educational Python samples

- FineWeb-Edu: 220B tokens of educational web content

The official release announcement details exactly how the SmolLM-Corpus dataset was curated.

In addition to the high-quality dataset, the 135M and 360M parameter SmolLM models are built using Grouped-Query Attention to prioritize depth over width to boost their efficiency even more while retaining a size small enough to run on a device. The largest of the models uses a more traditional architecture, and all three feature a 2048-token context window extendable by fine-tuning with long context fine-tuning.

Hugging Face has released both transformers and ONNX checkpoints for the SmolLM models. Additionally, there are plans to release versions of the models in GGUF format compatible with the llama.cpp library. Web demos showcasing SmolLM-135M and SmolLM-360M are available using WebGPU.

Comments