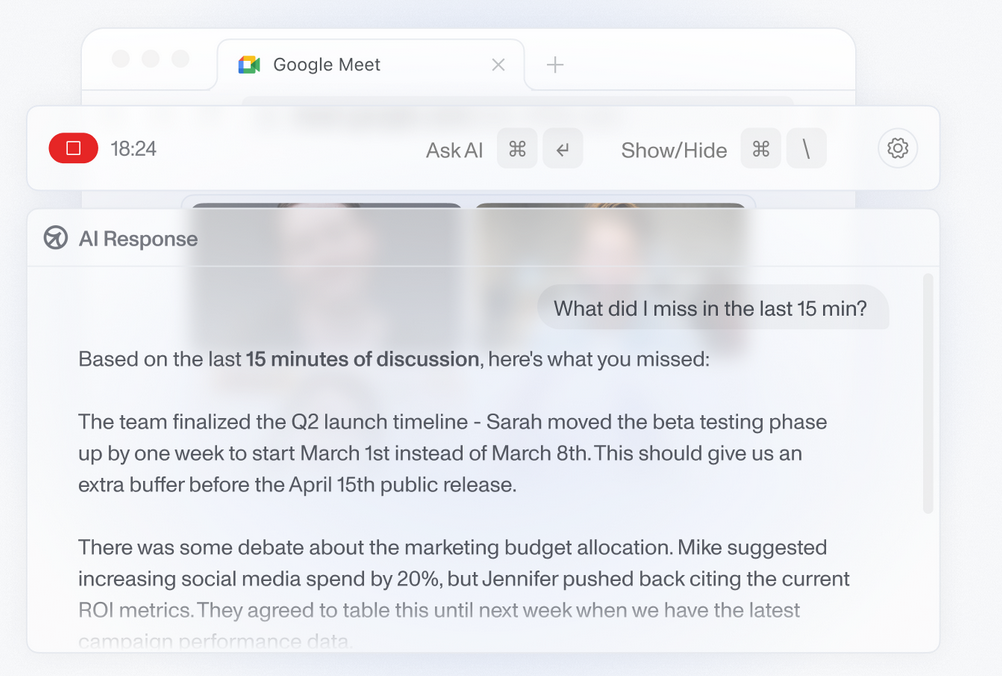

Large language models are trained on billions of data points and perform exceptionally well across a wide range of tasks. However, one aspect where these models often fall short is their lack of determinism. While building a prototype of an LLM application has become remarkably easy, transforming that prototype into a fully-fledged product is equally challenging. Even with carefully crafted prompts, the model can exhibit problematic behavior such as hallucinations, incorrect output structures, toxic or biased responses, or irrelevant replies for certain inputs. The potential error modes can be extensive.

This is where a robust LLM evaluation tool like UpTrain comes to the rescue which empowers you to:

- Validate and correct the model's responses before presenting them to end-users.

- Obtain quantitative measures for experimenting with multiple prompts, model providers, and more.

- Conduct unit testing to ensure that no faulty prompts or code make their way into your production environment.

Join us for an insightful talk as we delve deep into the intricacies of assessing the performance and quality of LLMs and discover the best practices to ensure the reliability and accuracy of your LLM applications.

Sourabh Agrawal

Sourabh is a 2X founder in the AI/ML space. He started his career working at Goldman Sachs where he built ML models for financial markets. Post that he joined the autonomous driving team at Bosch/Mercedes, building state-of-the-art CV modules for scene understanding. He started his entrepreneurial journey in 2020 and founded an AI-powered fitness startup that he scaled to 150K+ users. During his past experiences, he encountered a frequent source of frustration due to the lack of tools to evaluate these models- a problem even more pronounced in the case of Generative AI models. To solve this, he is building UpTrain - an open-source tool to evaluate, prompt test, and monitor LLM applications.

Comments