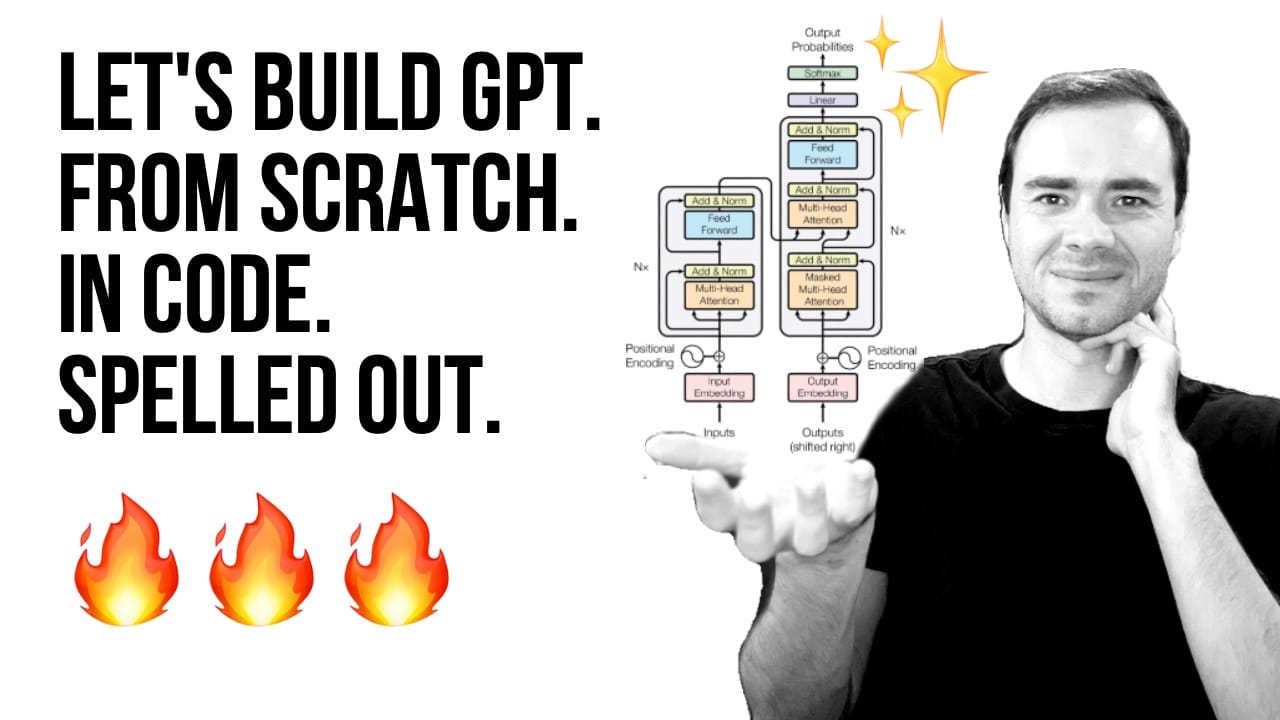

Let's build GPT: from scratch, in code, spelled out

In this video, Andrej Karpathy demonstrates how to build a Generatively Pretrained Transformer (GPT), following the paper "Attention is All You Need" and OpenAI's GPT-2 / GPT-3, and much more. Make sure that you watch at least parts of it!

Comments