A little over a week ago, Meta announced the release of its new-generation Llama 4 models, marking what the company calls "the beginning of a new era of natively multimodal AI innovation." The new suite includes Scout and Maverick, two immediately available models and previews Behemoth, a larger, more powerful model still in development.

Technical Innovations

The newly released models feature Meta's first implementation of mixture-of-experts (MoE) architecture and several other technical advancements, like early fusion for native multimodality, an improved vision encoder, and a new training technique known as MetaP.

- Native Multimodality: Early fusion allowed Meta to create unified models that can process image and text data from the outset. This enables training rounds that leverage unlabeled text, image and video data simultaneously, and spares Meta from having to train text and visual data processing components separately, as was done with early multimodal systems which were made out of (at least) two independent components an LLM and a computer vision model.

- MoE Architecture: This architecture is considered an improvement over dense model layers. In MoE models, a token activates only a fraction of the total parameters, making the Llama 4 models more compute-efficient for training and inference than dense models of the same size.

- Enhanced Training Techniques: Meta developed a new approach to model training it calls MetaP. This technique enables Meta to set critical model hyperparameters, which are the settings that determine how the models will learn from data. This contrasts with parameters, which determine what the models learn from the data they are fed. Optimal hyperparameter values are often tricky to find, but they can be essential to improve model performance. According to Meta, the new MetaP technique enables it to configure the hyperparameters in its Llama 4 models more reliably.

- Improved Context Handling: Llama 4 Scout supports up to 10 million context tokens. According to Meta, this is due to what it calls iRoPE architecture. iRoPE uses interleaved attention layers without positional embeddings and inference time temperature scaling of attention to enhance length generalization.

Scout, Maverick, and Behemoth: Model highlights

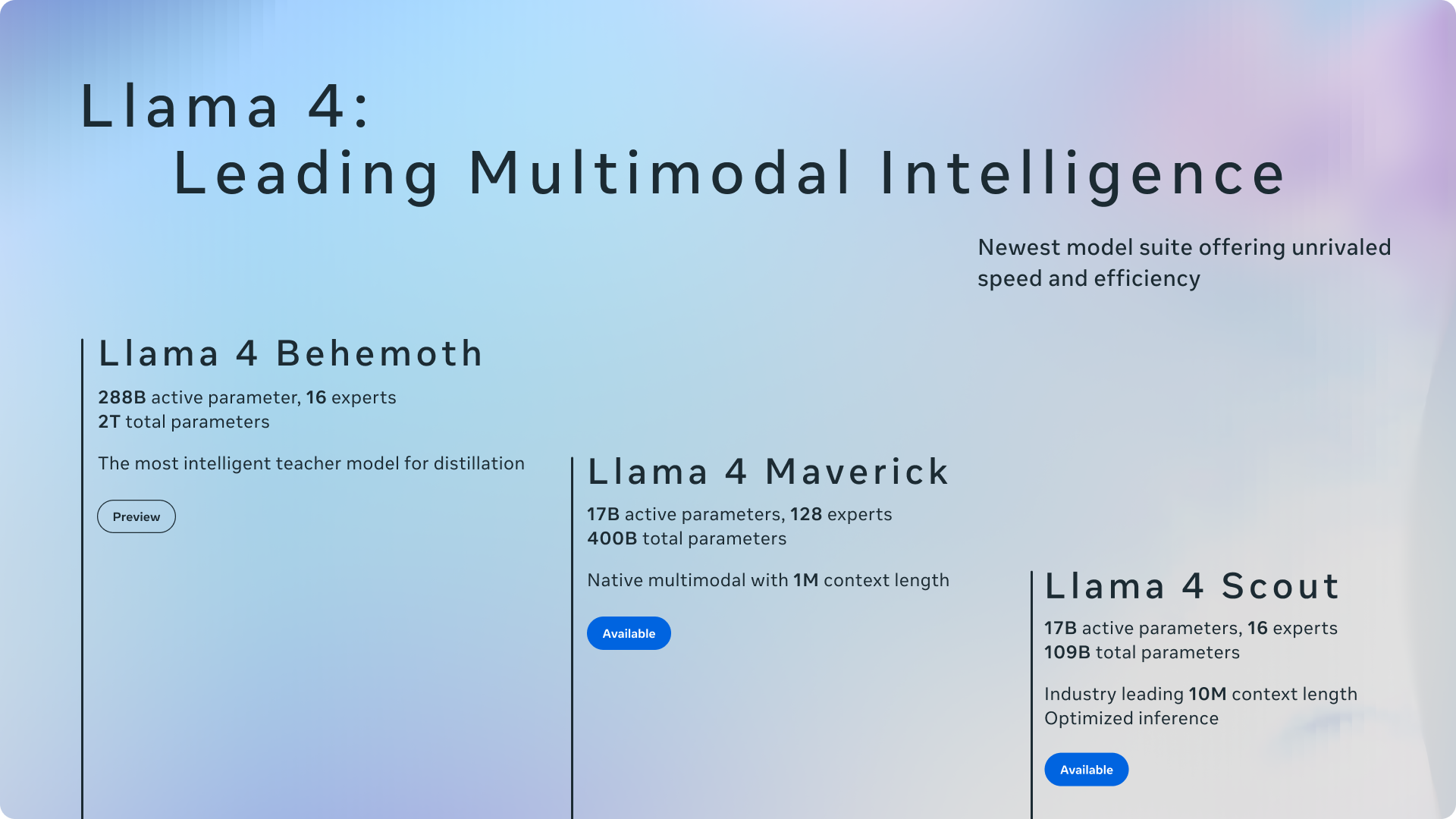

- Llama 4 Scout: A 17 billion active parameter model with 16 experts (109B total parameters), capable of fitting on a single NVIDIA H100 GPU with Int4 quantization. It boasts an industry-leading context window of 10 million tokens—a dramatic increase from Llama 3's 128K context length.

- Llama 4 Maverick: A 17 billion active parameter model with 128 experts (400B total parameters), designed as Meta's "product workhorse" for general assistant and chat use cases. According to Meta, it outperforms models like GPT-4o and Gemini 2.0 Flash across various benchmarks.

- Llama 4 Behemoth (preview only): Meta's most powerful model yet, featuring 288 billion active parameters, 16 experts, and nearly two trillion total parameters. Meta claims this model outperforms GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on several STEM benchmarks.

Availability and Applications

Both Llama 4 Scout and Llama 4 Maverick are available for download today on llama.com and Hugging Face. Users can also experience these models through Meta AI in WhatsApp, Messenger, Instagram Direct, and the Meta AI website.

Meta emphasizes that these models were designed with comprehensive safeguards, including pre- and post-training mitigations, system-level approaches like Llama Guard and Prompt Guard, and extensive evaluations and red-teaming.

Comments