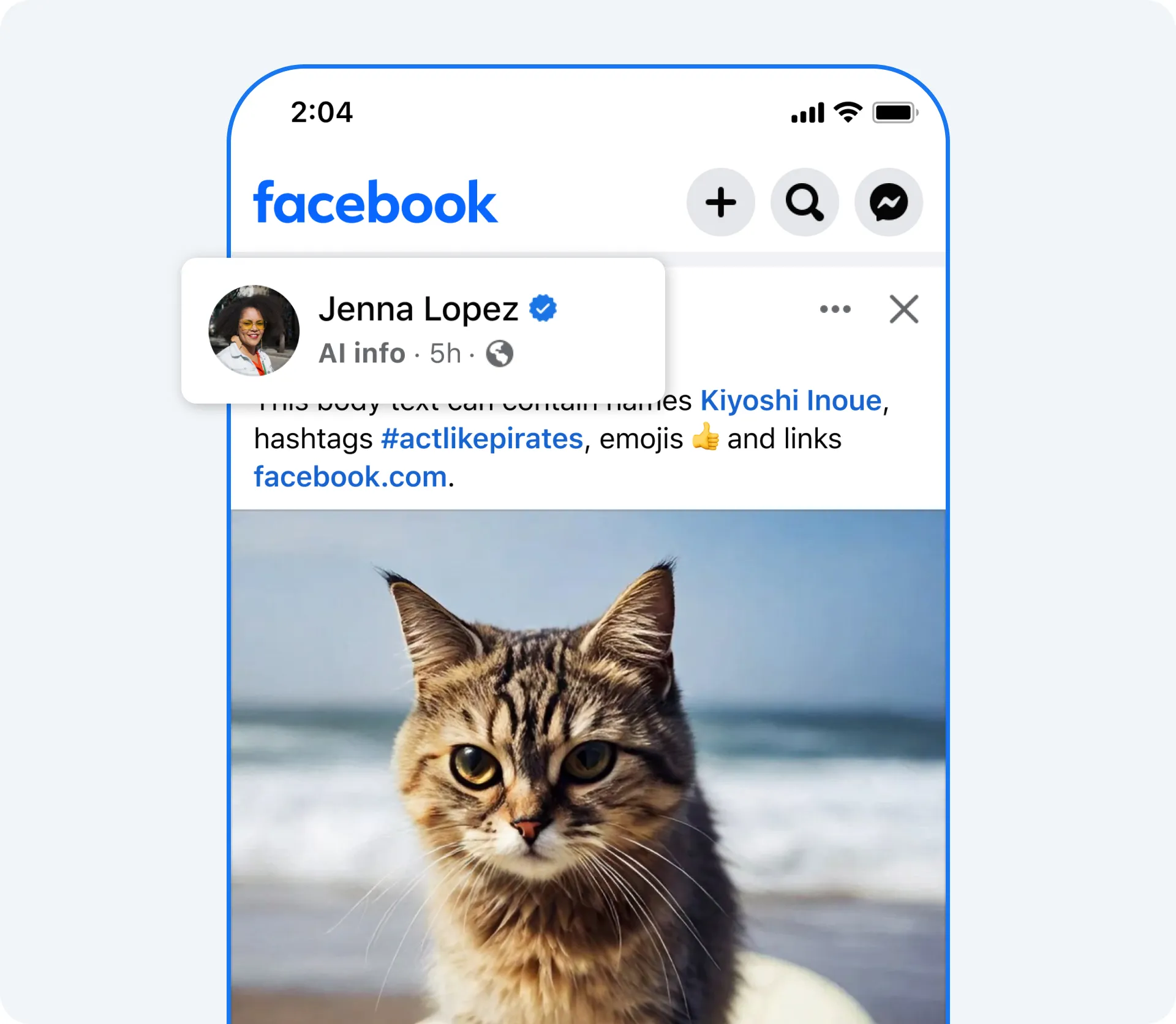

Meta modifies its media labeling strategy after real photos were tagged as 'Made with AI'

Meta has renamed its "Made with AI" label to "AI Info" after facing issues where labeled real photos gave the impression that they were AI-generated. The new AI Info label encourages users to click for additional context so users can receive accurate information about AI usage in the labeled images.

Earlier this year, Meta announced the "Made with AI" label for AI-generated audio, video, and images. Following feedback from its Oversight Board, Meta decided to take a less restrictive approach toward freedom of speech by not removing generated content as long as it didn't violate the company's Community Standards. Instead, Meta introduced the label, which, along with contextual information, would provide users with more information to assess the content correctly and have more context in case they find the same content unlabeled elsewhere.

Issues with the label started recently when reports of Meta attaching real photos with the "Made with AI" label started surfacing. According to Meta, the label works based on watermarking detection and other common industry practices for AI-generated content identification, or by self-disclosure that users are about to upload AI-generated content. Although one may figure that the tagged photos may have triggered the label because an AI-powered feature was used to edit them, it is unclear if this happened for every tagged picture. And even if it did, the "Made with AI" label can come across as misleading since content edited with AI-powered features such as generative fill is certainly not made with AI.

To address the issue of retouched pictures getting confused with AI-generated ones, Meta has changed the label to "AI Info". Since its inception, the "Made with AI" was meant to be clicked to provide additional contextual information about the image. It is unclear whether this additional content would have indicated that the content was edited using AI-powered features in the tagged images. Meta expects that by renaming the tag users will be more inclined to click it to reach the additional information.