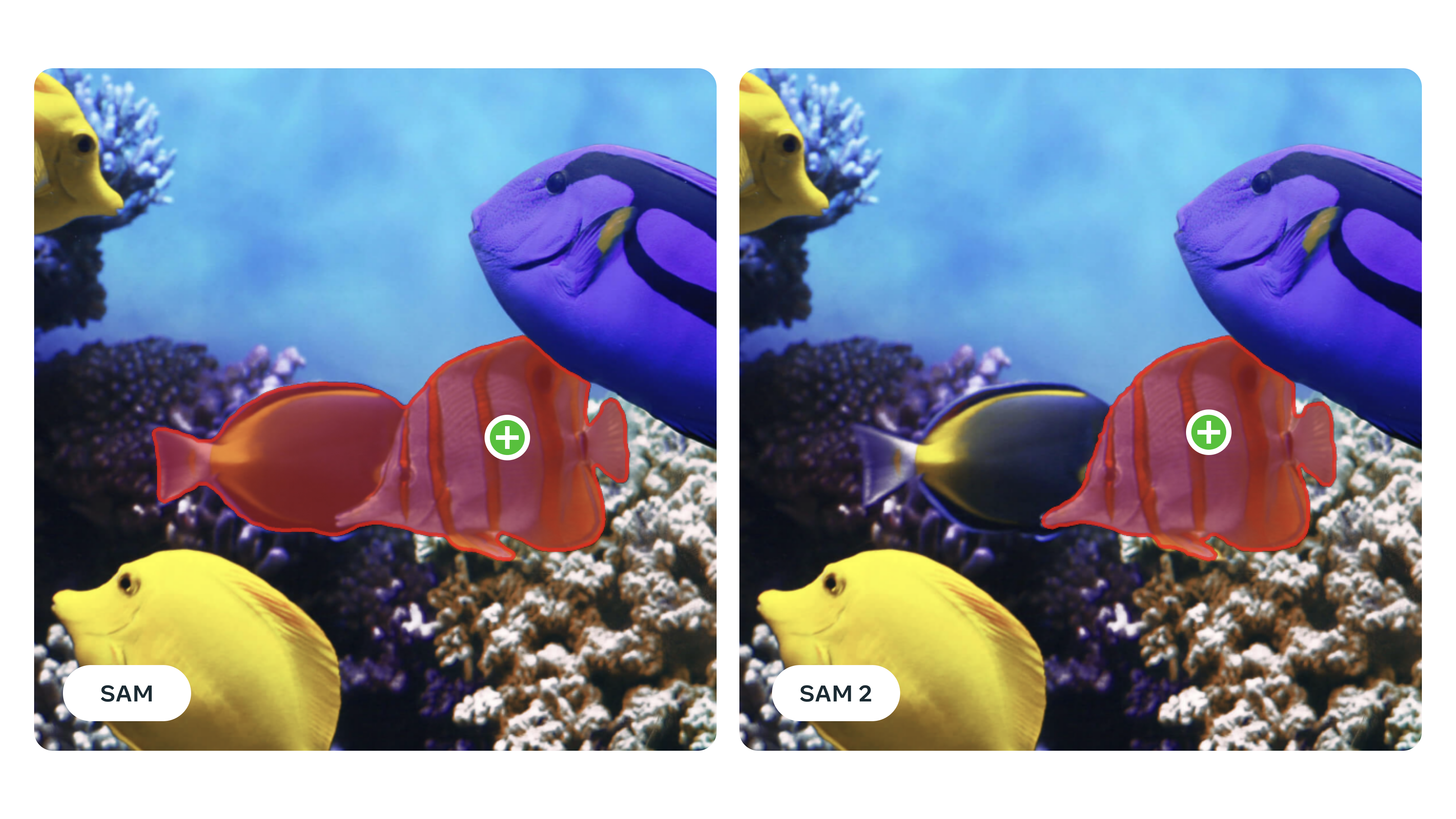

The Meta Segment Anything Model (SAM) 2 is a generalization of the Meta Segment Anything Model, and extends the segmentation capabilities from images to video. Meta has officially released this model under an Apache 2.0 license in the company of the SA-V dataset, which was used to build SAM 2, and a web-based demo that allows users to experiment with a version of the SAM 2 freely (the latter requires Chrome or Safari). The SAM 2 evaluation code is available under a BSD-3 license.

SAM 2 is a state-of-the-art unified model for real-time, prompt-based object segmentation that improves on the image segmentation capabilities of its predecessor and achieves better results at video segmentation than any other available solution. Its open availability reflects Meta's ongoing commitment to democratize access to a wide variety of breakthroughs in AI research, especially when they can unlock new possibilities. Just as the SAM made in-app experiences like Backdrop and Cutouts on Instagram possible, and powered object-segmentation solutions in fields as diverse as medicine, marine science, and disaster relief, SAM 2 is poised to support the next generation of AI-powered experiences, industry-leading solutions, and research breakthroughs.

Meta's official announcement is an excellent resource for an in-depth overview of the SAM2 architecture, the methodology behind the model, the SA-V dataset creation process, and more.

Comments