Microsoft recently announced that it would create a $2 million Societal Resilience Fund jointly with OpenAI to promote AI education and literacy among voters and vulnerable communities. Motivated by the billions of people heading to democratic elections this year, the fund will allocate grants to organizations striving to deliver AI education and create a better understanding of the capabilities of AI. The first cohort of supported organizations includes Older Adults Technology Services from AARP (OATS), the Coalition for Content Provenance and Authenticity (C2PA), the International Institute for Democracy and Electoral Assistance (International IDEA), and Partnership on AI (PAI).

Each organization has already shared more details about the project the grants will support. OATS will develop a training program to educate older adults on foundational aspects of AI. C2PA will launch an educational campaign on digital disclosure methods and best practices. International IDEA will conduct global training sessions to equip Electoral Management Bodies, civil society, and media actors with skills to navigate the opportunities and challenges presented by AI. PAI will enhance its Synthetic Media Framework to provide transparency about generative AI and set best practices for responsible AI development.

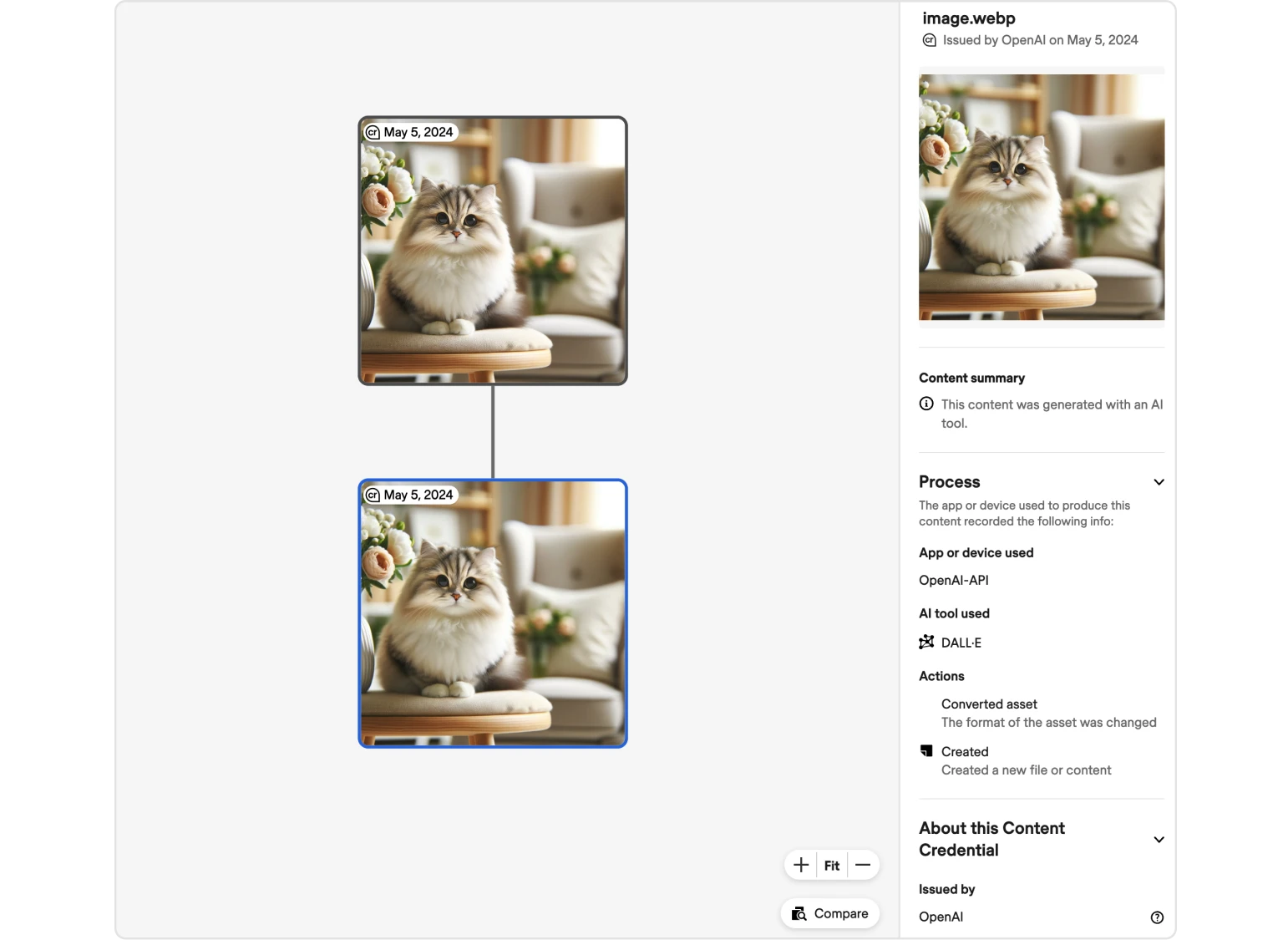

In parallel, OpenAI announced that it will join the Steering Committee of C2PA. This action follows OpenAI's initiative earlier this year to add C2PA metadata to all images created and edited using DALL·E 3 in ChatGPT and the OpenAI API. The company also plans to integrate C2PA metadata for Sora, its video generation model once it is broadly available. Although it is still possible to create deceptive content without any metadata or remove it from properly identified content; modifying or falsifying this type of metadata is not as easy. Thus, even if C2PA metadata is still imperfect as a solution, it represents an important advancement in provenance standard implementation.

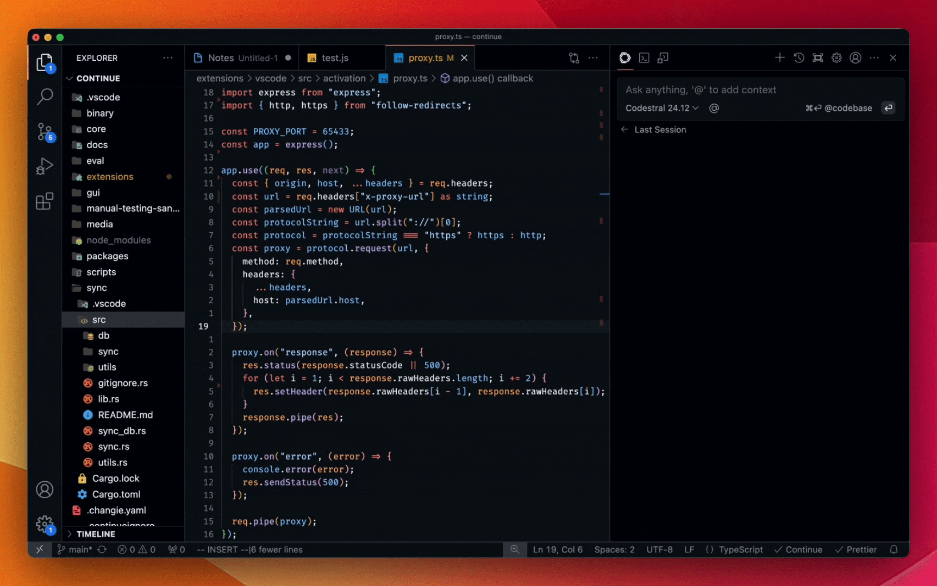

OpenAI also shared that, in addition to its collaboration with C2PA, it is also working on additional provenance methods like tamper-proof watermarking and detection classifiers. The company has opened applications to access its detection classifier for content created using DALL·E 3 from research labs and research-oriented journalism nonprofits for feedback. The tool reliably predicted the likelihood that an image was created using DALL·E 3 in a dataset containing generated and real-world photos, with less than ~0.5% of non-AI generated images flagged as DALL·E 3-generated. Its accuracy for other image generation models is still low, with a reported ~5-10% correctly flagged images. Finally, OpenAI also revealed that it has applied audio watermarking to Voice Engine, currently in limited research preview.

Comments