Since OpenAI's o1 and subsequent "reasoning" models arrived at the foundation model scene, it seems to have triggered a new wave of competition to achieve the highest scores on popular math and reasoning benchmarks. Phi-4, for instance, is reported to outperform large models like Llama 3.3 70B, GPT-4o, and Qwen 2.5 72B in MATH (math competition word problems) and GPQA (graduate-level STEM questions), two popular benchmarks said to measure models' math and reasoning capabilities.

The model's capabilities in math problem solving were tested using the AMC 10/12 exam, published in November 2024, after Phi-4 had already been trained. The American Mathematics Competitions exams are taken yearly by high school students in the US as part of the path towards the International Mathematics Olympiad. Students in the top 5% of the AMC exams are invited to the American Invitational Mathematics Exam (AIME), another exam frequently used as a benchmark for testing LLMs' mathematics capabilities.

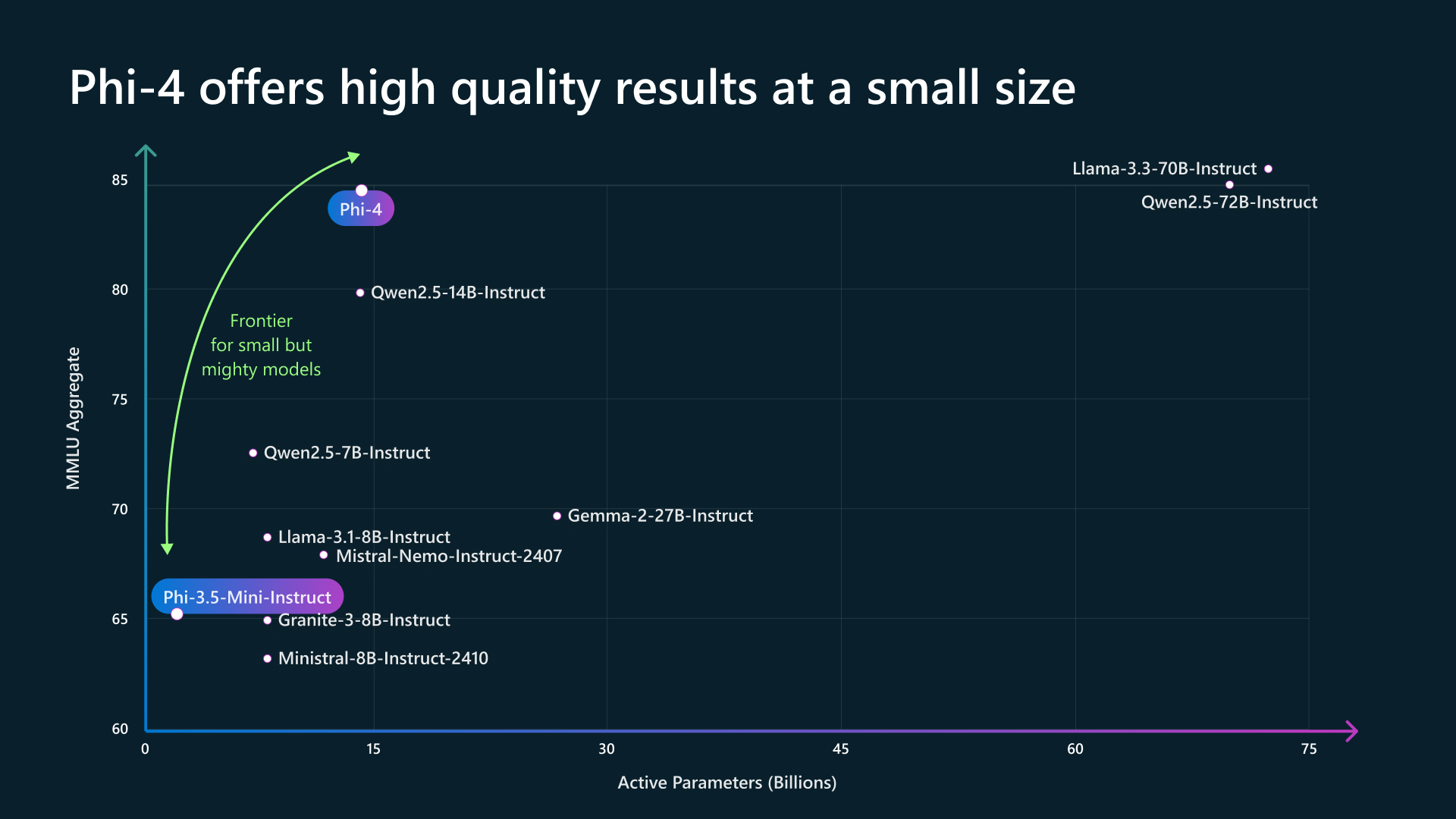

Compared to models in its size class, like Phi-3 14B, Qwen 2.5 14B, and GPT-4o mini, Phi-4 displays a strong performance that surpasses its rivals on most benchmarks. However, guided by the benchmark scores alone, the increased reasoning performance comes at a cost in areas like extraction (DROP), factual question answering (SimpleQA), and instruction following (IFEval). According to the technical paper, Phi-4's instruction-following capabilities could be improved using targeted data.

The "secret" behind Phi-4's remarkable performance in complex reasoning and math tasks is a mix of enhancements throughout the training process, including novel techniques to generate high quality synthetic data, an updated curriculum (from Phi-3), optimized data mixtures, and improvements in post-training. Of these, the most noteworthy are the first three, as the research team behind Phi-4 states that the performance improvements (over Phi-3) can be attributed to the thoughtful use of what is primarily synthetic data during pre-training. The research team even conducted a comparison that enabled them to conclude that their approach to mixing high-quality synthetic data with strictly filtered web data yields better results than using only synthetic data, or shortening the exposure to synthetic data by giving the model access to more high-quality web tokens. The full technical details can be found on the Phi-4 technical paper.

Phi-4 is currently only available on Microsoft's Azure AI Foundry under a research-only license, but will be released on Hugging Face shortly.

Comments