The Azure AI team at Microsoft recently released Florence-2, a groundbreaking vision foundation model now available on Hugging Face under an MIT license. Florence-2 features a prompt-based representation of several computer vision and vision-language tasks that enable it to tackle tasks including captioning, object detection, grounding, and segmentation, delivering high-quality results across all task types. Because of this, Florence-2 is unlike other models developed using traditional approaches to solve vision-based tasks, which turn out task-specific due to the challenges associated with the universal representation of vision-based tasks.

The research team identified two broad areas that organize the specific problems keeping foundation models from achieving universal representation: spatial hierarchy, or the models' capabilities to identify and understand image-level concepts and fine-grained details in parallel. Handling these levels of granularity becomes the basis of a model's capability to accommodate spatial hierarchy within vision. Additionally, the model should also be adept at handling semantic granularity, or in other words, the spectrum that spans from high-level image captions to nuanced, detail-heavy descriptions.

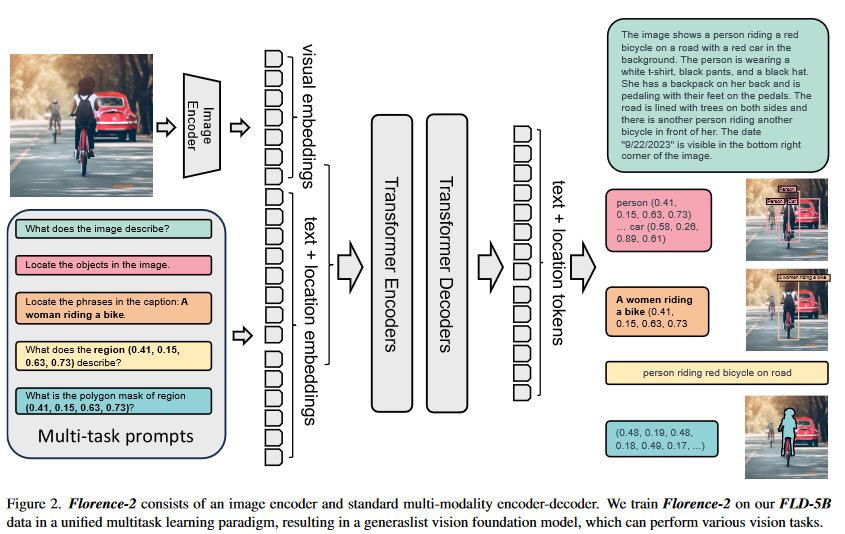

To address these tasks, one of the research team's most notable actions was leveraging specialized models to generate a massive visual dataset called FLD-5B, which includes 5.4 billion annotations for 126 million images. Florence-2's sequence-to-sequence architecture integrating an image encoder and a multi-modality encoder-decoder was trained on the FLD-5B dataset, resulting in a model capable of handling diverse vision tasks on the same unmodified architecture.

Remarkably, Florence-2 outperformed larger models, including DeepMind's 80B parameter Flamingo, in zero-shot captioning tests based on the COCO dataset. Florence-2 even outperformed Microdsoft's visual grounding-specific Kosmos-2 model. When fine-tuned with public human-annotated data, Florence-2 also showed competitive results compared to larger specialist models across tasks like visual question answering. Testing results indicate that Florence-2 is poised to become a strong vision foundation model, as the community begins to put it to the test in real-world applications.

Comments