Mistral AI has released its first-ever multimodal model, Pixtral 12B. Based on the recently launched NeMo 12B, Pixtral 12B delivers competitive performance in various tasks involving image inputs in a compact 24 GB presentation. Pixtral 12B can understand images and text, without limit to the amount and size of the pictures that can be provided as input via a URL or encoded using base64. The model can also process documents including images, features a 128k context window, and is released under an Apache 2.0 license.

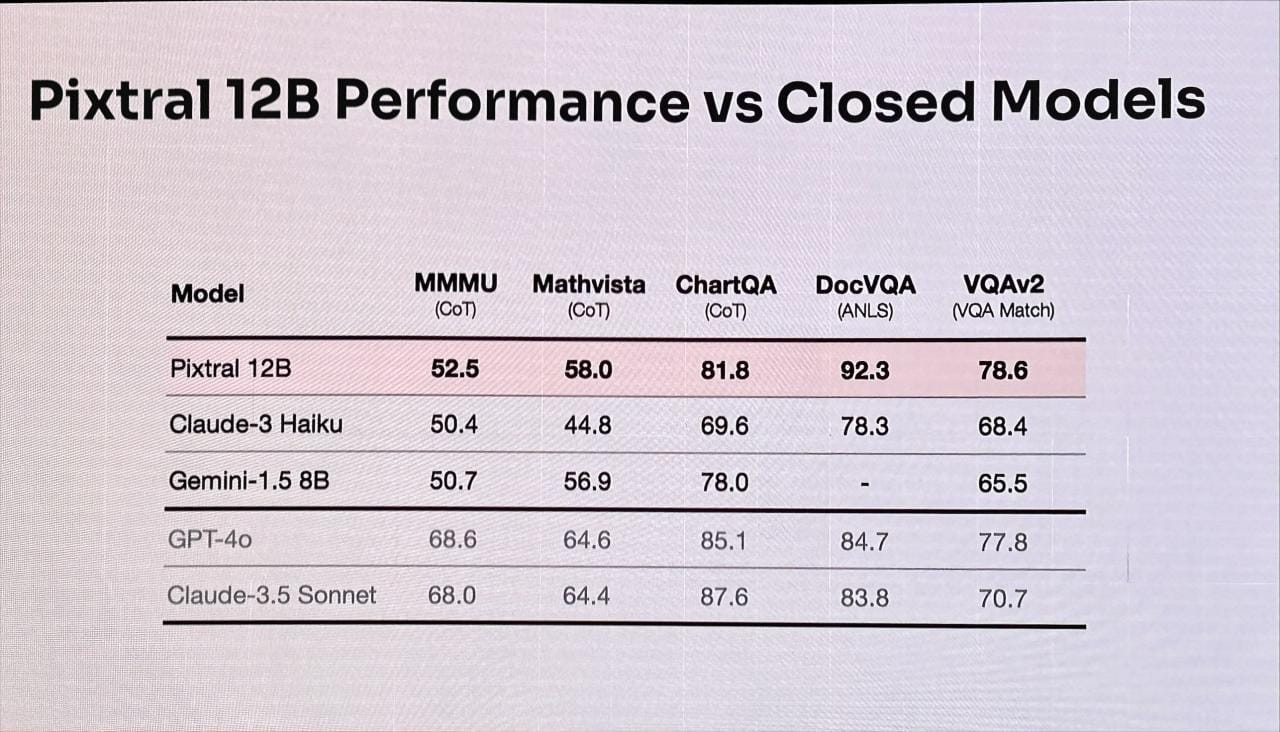

Pixtral 12B was benchmarked against some of the best open and closed-source multimodal models in its size class, including Qwen2 7B, LLaVA-OV 7B, Phi-3 Vision, Claude 3 Haiku, and Gemini 1.5 8B. Pixtral's solid performance is expected to provide an open-source alternative for use cases dominated by models such as Claude 3.5 Sonnet and GPT-4o, such as image captioning, image-to-text transcription (OCR), data processing and extraction, and image analysis. Pixtral 12B is currently available through a torrent link at GitHub and Hugging Face, and it has been confirmed that it will be accessible through Mistral's Le Plateforme and Le Chat soon.

Comments