Keeping pace with the AI industry and keeping up with popular culture is tough. In this event, we’ll help you do both!

We’ll combine the most popular open-source LLM - Llama 2 - with the most popular LLM Operations (LLM Ops) infrastructure tooling - LangChain - to build the most popular type of LLM application - a Retrieval Augmented Generation (RAG) or Retrieval Augmented Question Answering (RAQA) system - to analyze the most popular internet phenomenon on the planet - Barbie + Oppenheimer = Barbenheimer. We’re going to have some fun with this one!

All demo code will be provided via GitHub and/or Colab links during and after the event!

Who should attend the event?

- Learners who want to build Retrieval Augmented Generation (RAG) systems.

- Learners who want to leverage the open-source Llama 2 LLM in their applications.

- Learners who want to get up to speed on best-practice tools in LLM Ops.

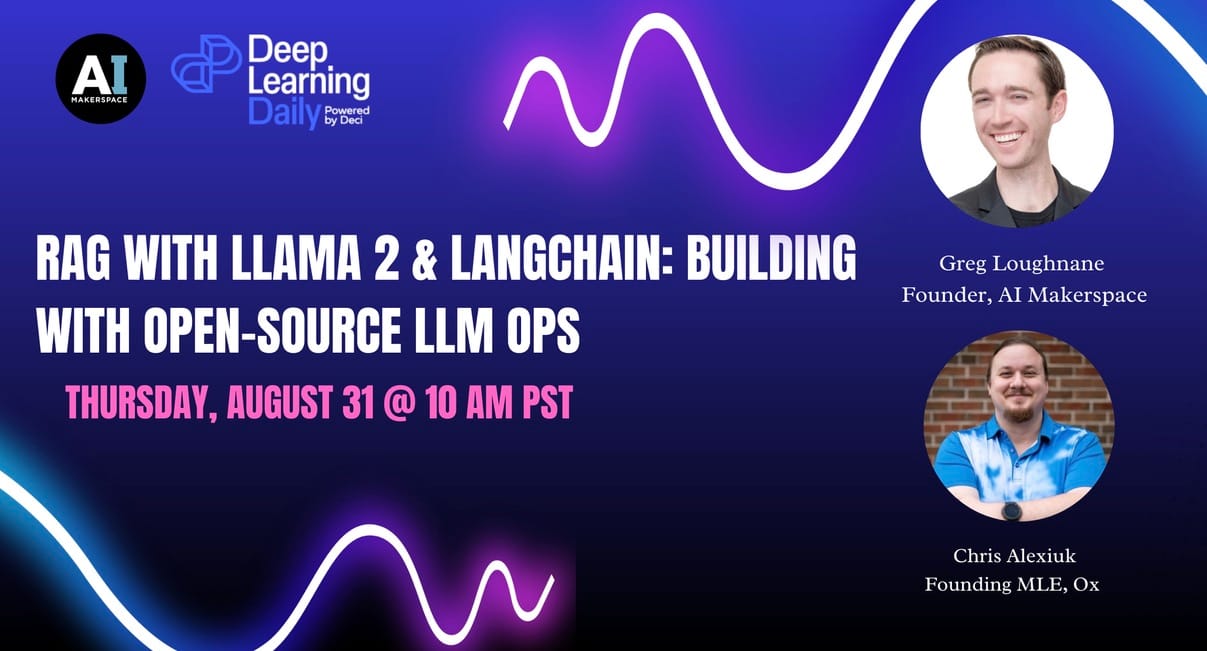

Speakers:

- Dr. Greg Loughnane is the Founder & CEO of AI Makerspace, where he serves as lead instructor for their LLM Ops: LLMs in Production course. Since 2021 he has built and led industry-leading Machine Learning & AI boot camp programs. Previously, he has worked as an AI product manager, a university professor teaching AI, an AI consultant and startup advisor, and ML researcher. He loves trail running and is based in Dayton, Ohio.

- Chris Alexiuk is the Head of LLMs at AI Makerspace, where he serves as a programming instructor, curriculum developer, and thought leader for their flagship LLM Ops: LLMs in Production course. During the day, he’s a Founding Machine Learning Engineer at Ox. He is also a solo YouTube creator, Dungeons & Dragons enthusiast, and is based in Toronto, Canada.

Comments