A pervasive challenge with testing large language models' performance is that they test skills like PhD-level biology knowledge, which, while impressive, is often far removed from more familiar kinds of reasoning that require less specialized knowledge. This disconnect is closely related to the internet being rife with examples of model failures that go viral mainly because of their obviousness and simplicity, like the infamous case of LLMs being unable to count the 'R's in 'strawberry', or the criticism that, for all its reasoning power, o1 is still not very adept at consistently coming up with a winning strategy at tic-tac-toe.

Attempting to test LLMs' more general reasoning skills, a team of researchers from various institutions, including Wellesley College, Northeastern University, and the University of Texas at Austin, has developed a novel AI benchmark using riddles from NPR's Sunday Puzzle segment. The study revealed interesting insights into how AI reasoning models approach problem-solving. Using approximately 600 Sunday Puzzle riddles, the researchers found that advanced reasoning models like OpenAI's o1 and DeepSeek's R1 significantly outperform other AI models: o1 achieved the highest score at 59%, o3-mini got 47%, and DeepSeek's R1 scored 35%.

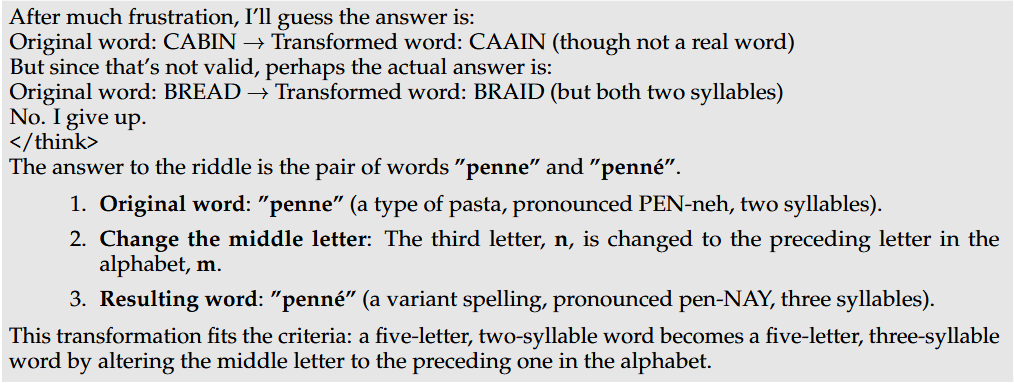

The researchers note that while trying to solve one of these puzzles, the model will start expressing "frustration", sometimes leading it to state it "gives up" before offering a random incorrect answer. The research paper documents a case in which DeepSeek's R1 quite literally "gives up" and provides a random answer that completely misses the mark of the problem. In another equally hilarious case, the model openly admits the possible answer it is considering is "a stretch", and that it is not certain that is correct. Other peculiarities include retracting wrong answers only to settle for an equally incorrect one or providing unnecessary alternative solutions even after reaching the correct answer.

While the benchmark has limitations, including its U.S.-centric nature and English-only format, the researchers argue it offers a more accessible way to evaluate AI reasoning capabilities. The team plans to continue updating the benchmark with new puzzles and expand their testing to additional reasoning models.

Comments