The Open Platform for Enterprise AI (OPEA) is the Linux Foundation AI & Data's latest Sandbox Project partly developed by Intel and industry partners, including Anyscale, Cloudera, Hugging Face, Qdrant, VMWare, and more. The OPEA has emerged as a response to the fragmentation of resources like tools, techniques, and solutions in generative AI technologies, mainly derived from the fast development and increasing competitiveness the industry has seen recently. OPEA's approach to standardized, modular, and heterogeneous pipelines will be based on collaboration with industry partners to "standardize components, including frameworks, architecture blueprints, and reference solutions that showcase performance, interoperability, trustworthiness, and enterprise-grade readiness."

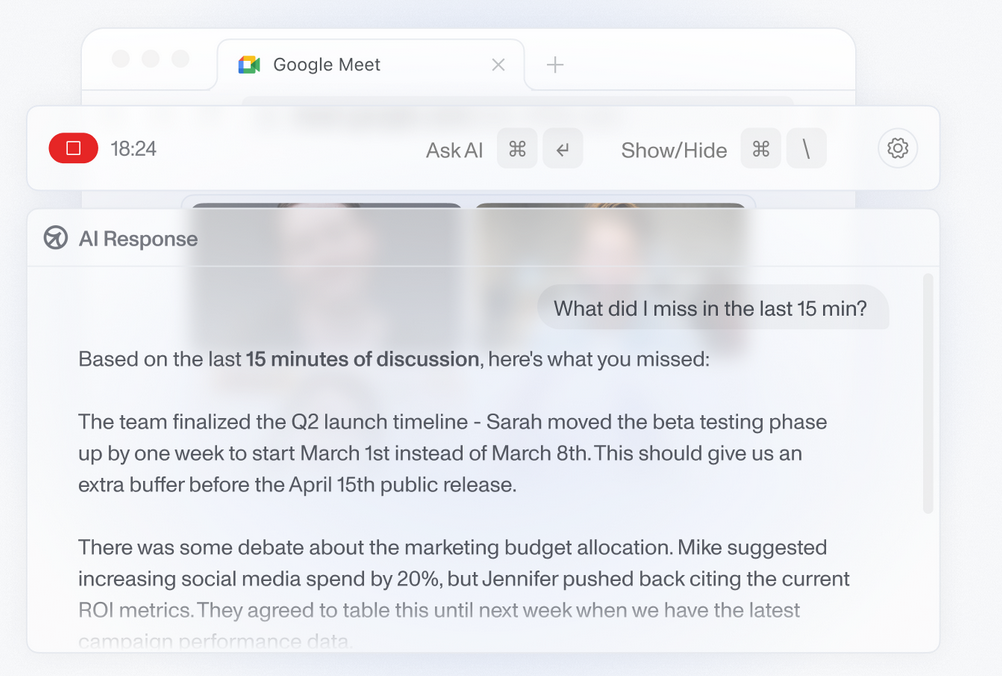

As one of the main contributors to OPEA, Intel has stated it plans to publish a technical conceptual framework, release reference implementations for generative AI pipelines based on Intel Xeon processors and Gaudi 2 accelerators, and expand infrastructure capacity in the Intel Tiber Development Cloud. In parallel, Intel has also revealed that it has already taken its first steps to fulfill these goals as it has published a set of reference implementations in the OPEA GitHub repository which includes frameworks for a chatbot based on Intel Xeon 6 and Gaudi 2, and for document summarization, visual question answering and a code generation copilot in Gaudi 2. Additionally, Intel released an assessment framework providing a standardized grading and evaluation system based on performance, trustworthiness, scalability, and resilience. Looking forward, the OPEA will provide self-evaluation tests based on this framework and perform external grading and evaluation when requested.

Comments